A Wild Probot Appeared

A Wild Probot Appeared

Virtually all of Sentry has been open source from the very beginning. Not only is our source code publicly available; our entire development process is visible to everyone on GitHub. This allows us to include the developer community in our daily process and is, alongside making cool welcome gifs for every new employee, probably the most important value in our company culture.

However, as our engineering team has grown, it’s become harder to manage and maintain all our repositories on GitHub. At the time of this writing we have a stunning 150 repositories in our organization, and this number increases every single week. It was for this reason that a couple of months ago we set out to create our very own “Grooming Bot” to help us keep all our repos clean.

We wanted a simple tool that reminds us to review our PRs, handles stale issues, and keeps issues clean of sensitive information. We started building it ourselves, and just a few lines of JavaScript and a few days of work later we had it running.

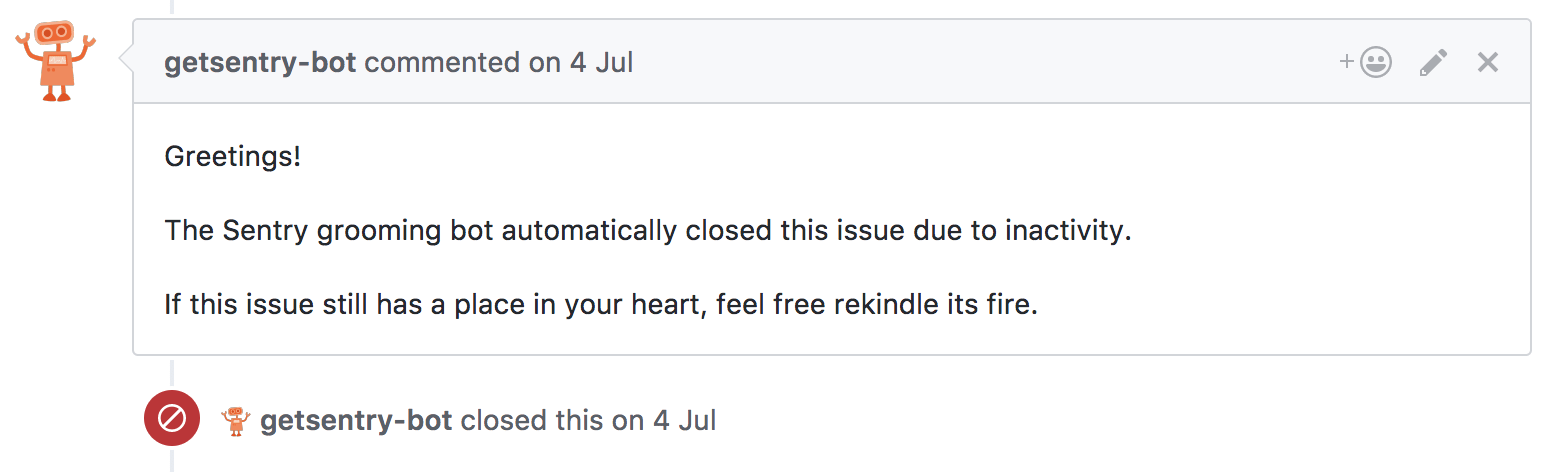

Our old grooming bot

Awesome, but as we moved closer to actually using it in production, something unforeseen happened: A wild Probot appeared!

Probot to the Rescue

Turns out some of our friends at GitHub (with Brandon Keepers leading the way) had built something much more elegant than what we came up with. We immediately jumped on the hype train, since what they’d created was exactly what we envisioned when we started our little side project. Probot has a nice extensible interface and the GitHub integration is well encapsulated, making it a no-brainer to use.

Probot is an application framework that utilizes Github Apps (formerly known as integrations). It hides away the authentication flow and provides a robot interface that allows you to directly attach listeners to event webhooks. No more need to refresh tokens or poll API endpoints. It looks like this:

module.exports = (robot) => {

robot.on('issue.created', (context) => {

const body = 'Hello world from Probot';

context.github.issues.createComment(context.issue({ body }));

});

}As you can imagine, we had no trouble porting our code to Probot. Within days we refactored all functionality and it’s been working like a charm ever since. In production no less.

Leaving the Dry Dock

To be clear, we didn’t release it into the wild right away. It took us a couple of iterations to get everything right and discover all the edge cases. To get there, we needed to test with our actual GitHub organization, while also avoiding accidentally going on a rampage and destroying anything there. To do so, we did three things:

Created a “development app” that has limited access rights. When developing on our local machines, we use its token to access the Github API, instead of the production token.

Added a

DRY_RUNenvironment variable that disables all side effects. In dry run, the bot still issues all reading API requests and logs its actions but refrains from anything that could modify outside state.Refactored the

DRY_RUNenvironment into the dryrun npm package, to mock it more easily in tests.

To our delight, Probot came with built in support for Sentry. This made it super easy to track errors that we missed during development. All we had to do is set the SENTRY_DSN environment variable and we were ready to go. And this actually helped us find some nasty bugs that we would have later really (really) regretted not catching.

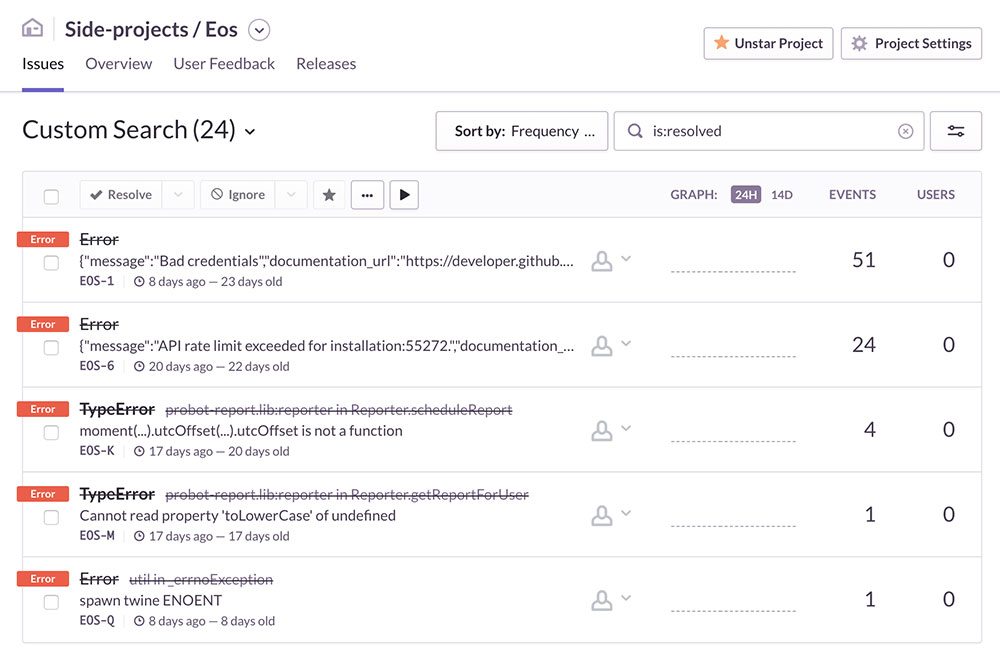

Nasty bugs caught and fixed

Organizing Configuration

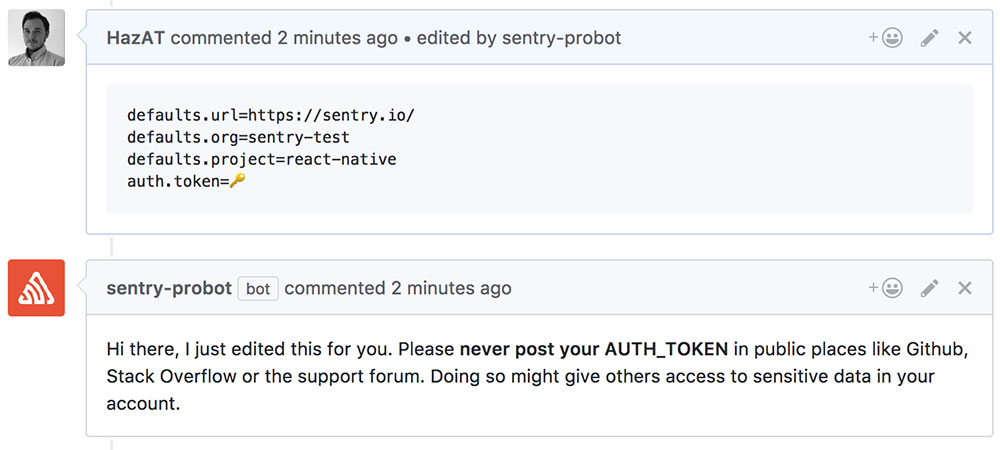

We’ve lately developed a notion of naming things after Greek gods. In that sense, we first started to craft “Momos”, the god of satire, censure, writers and poets. If the name doesn’t make it clear already, it tries to keep issues, pull requests and comments clean of certain sensitive information. For example, people should not post their auth token in issues.

Momos in action

It activates in all repositories that contain .github/censor.yml. This is a best practice defined by Probot to prevent bots from going berserk on repositories you don’t want it to. A nice side effect of this is that it allows us to configure it. In our case it would be:

message: "Hi there, I just edited this for you."

rules:

- pattern: "(auth\\.token|SENTRY_AUTH_TOKEN)=\\w+"

replacement: "$1=🔑"

message: "Please **never post your AUTH_TOKEN** .. ."However, that configuration will likely be the same for all our repositories. It doesn’t make sense to copy and paste it everywhere. Just imagine the amount of work to change the wording if the bot is used on only half of our 150 repositories…

For this reason, we created probot-config, a small Probot extension that allows repositories to inherit base configurations. This allows us to move the configuration to a central place and still opt in to the bot on a per-repository basis. In each repository, we now only have to add this:

_extends: some-other-repoIn case you’re curious, Momos lives at https://github.com/getsentry/probot-censor.

Taking Asynchronous Action

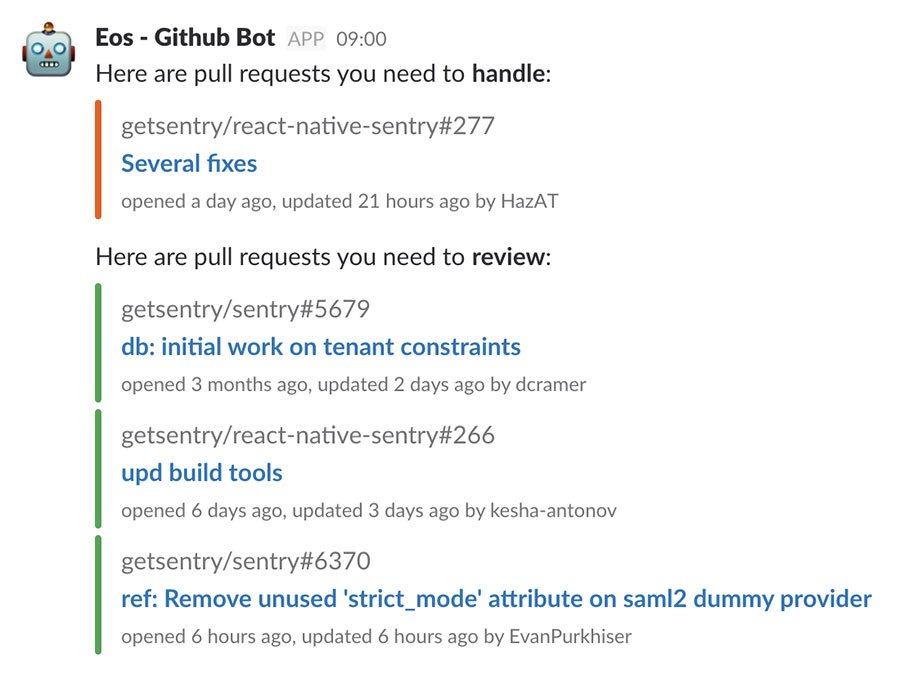

Next, we turned our attention to a bot called “Eos”, named after the goddess of the dawn in Greek mythology. It sends our engineering team friendly emails, reminding them to review their PRs and tackle newly created issues on repositories they’re watching.

An Eos email

And since we use Slack for communication, we also created a slack integration (for those who are too hip to use good ole email).

Eos in Slack

While creating this bot, we faced an issue with asynchronous actions. As we wanted the bot to send reminders at scheduled times, it needed to keep state and wake up upon request. In our case, we needed it to know about all members in the organization. There are three cases to take care of:

When the bot starts up, it can already be installed on organizations with existing members

While the bot is running, it can be installed on new organizations with existing members

While installed, an organization can add or remove members

To deal with this, we create an instance of our bot for every installation and delegate certain webhooks to those instances. The code below should roughly illustrate how that works. Of course, there is more going on behind the scenes, but this should get the idea across:

const instances = {};

github.paginate(github.apps.getInstallations({ per_page: 100 }), (result) => {

result.data.forEach(installation => {

// Use the organization's login to identify the instance later on

instances[installation.account.login] = new Instance(robot, installation);

});

});

robot.on('installation.created', ({ payload: { installation } }) => {

instances[installation.account.login] = new Instance(robot, installation);

});

robot.on('installation.deleted', ({ payload: { installation } }) => {

delete installations[installation.account.login];

});

robot.on('member.added', ({ payload }) => {

instances[context.repo().owner].addMember(payload.member);

});

robot.on('member.removed', ({ payload }) => {

instances[context.repo().owner].removeMember(payload.member);

});The instances internally schedule reports, e.g. 9am local time. This is a case that Probot does not actively support. Specifically, there is no documented way to obtain an authenticated API client. Just storing it from one of the webhooks would not work, as its internal token expires after a couple of minutes.

Looking at the Probot source code or extensions like probot-scheduler reveals an internal API that can be used to re-authenticate on demand. All that we need to do is remember the installation:

const github = await this.robot.auth(this.installation.id);

// now github is authenticated, use like in a webhookYou can find the code open-sourced on Github https://github.com/getsentry/probot-report

Disclaimer: This report Bot is heavily focused on our setup, so in order to use it you probably need to fork it or help us make it more general purpose.

Interacting with External Services

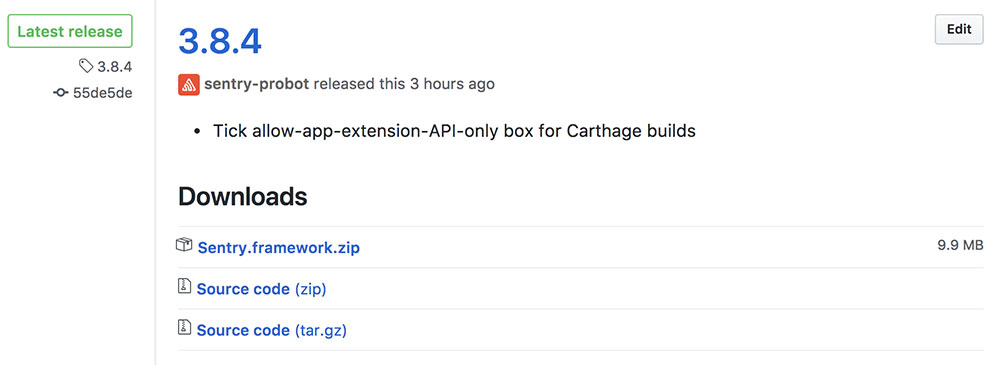

Finally, we set out to streamline all the different releases we have to create and build throughout all our repos, and we named it Prometheus (not just a terrible movie, but also the Greek god who crafted people). There are multiple reasons why we do not do this directly in CI, which we will highlight in a separate post.

We continuously build on Travis or AppVeyor and upload artifacts to a bucket on S3. Once CI is done with the build, it updates status checks on Github which our bot listens to. That way, our bot never attempts partial releases as it can wait for all status checks to go green. Once there, it creates a release on Github or uploads artifacts to external indices like PyPI and NPM.

It can also parse the corresponding entry in CHANGELOG.md and add it as release body:

Prometheus in action

To interact with these external systems, the bot requires access tokens configured in environment variables. You will have to run a separate instance of this bot to use it in your organization. We chose this way over a centrally hosted bot to keep secrets truly private.

Training Probot to Evolve

Probot is still young and in rapid development. Just look at how active its issue tracker is. Naturally, we ran into some of its limits while working with it over the last several weeks:

Probot could help in re-authenticating the GitHub client when its internal token expires. This would remove the need for quirky calls to

robot.auth()and further simplify asynchronous tasks.The GitHub API wrapper tries to avoid rate limits by throttling requests. However, this does not work for search requests, due to a significantly lower quota. Ideally, Probot would assist in avoiding these limits and provide a way to queue up requests, defer action when a rate limit is hit, and replay failed requests.

There is no streamlined model for persistent storage yet. While some in the community have suggested embedding a lightweight database in the core of the framework, we tend more towards using config files in repositories. Probot should continue to focus on interacting with GitHub, while all other concerns remain up to specific apps and their developers.

Centralized configuration for organizations, however, should be a concern for Probot. We feel that copy-and-paste of configuration files is a no-no and should be avoided, just like duplicate code. While probot-config is by far not complete, it is certainly a good start in the right direction.

Finally — and this is not news to the Probot team — the process of setting up a new GitHub App is still too manual. Most of the steps involved, from creating the app to obtaining private keys and the token, could be automated by the setup wizard.

We are very pleased that Probot came to life and helped us automate some of our repetitive tasks. The excellent documentation and active community made it fun to take on issues we had pushed off in the past. Since Probot is open source, we are also trying to help and improve it as much as we can. We definitely encourage you to check it out and join the conversation on Slack.