How we optimized Python API server code 100x

Python code optimization may seem easy or hard depending on the performance target. If the target is “best effort”, carefully choosing the algorithm and applying well-known common practices is usually enough. If the target is dictated by the UX, you have to go down a few abstraction layers and hack the system sometimes. Or rewrite the underlying libraries. Or change the language, really.

This post is about our experience in Python code optimizations when whatever you do is not fast enough. I personally had much fun undertaking the challenges and squeezing API response times under one second. So much fun, in fact, that we’ve got enough notes for a series of blog posts.

By writing “we” I mean the engineers working at Athenian. Athenian offers a SaaS to help engineering leaders build a continuous improvement software development culture. To translate from landing-pagish, we mirror GitHub and JIRA metadata to our own DB, analyze it, and display metrics and charts in the SPA. For now. The plan is to conquer the world, of course.

Those naughty coroutines

The API request processing typically balances between two poles: CPU and IO wait. There is no clear separation between them; like yin and yang, they dance together hand in hand with sophisticated relationships. If one profiles a request, they will see a messy DAG of function calls that can project to CPU and IO occupation axes. Let’s consider this simplified code:

await asyncio.gather(query_sql_a(), query_sql_b(), query_sql_c())We launch three coroutines that request data from a SQL DB. Athenian uses PostgreSQL, so let’s imagine that we work with PostgreSQL. Each coroutine passes through three stages:

(CPU) Prepare the request to PostgreSQL and send it.

(IO wait) Wait until PostgreSQL responds.

(CPU) Read the response and convert it to Python objects.

Let’s suppose that (1) and (3) both elapse one second for each of the coroutines, and that PostgreSQL is infinitely powerful and always requires 5 seconds to compute the response for query_sql_a, 3 seconds for query_sql_b, and 1 second for query_sql_c. This doesn’t mean that, for example, query_sql_a will always spend 5 seconds in IO wait (2), because Python can only execute one of the three coroutines at each moment of time.

asyncio.gather launches coroutines in the order of passed arguments. That’s not written in the documentation and must be an implementation detail, but it’s important to consider: we will first execute (1) of query_sql_a, then (1) of query_sql_b, then (1) of query_sql_c, then wait one second while PostgreSQL is busy, then execute (3) in the opposite order.

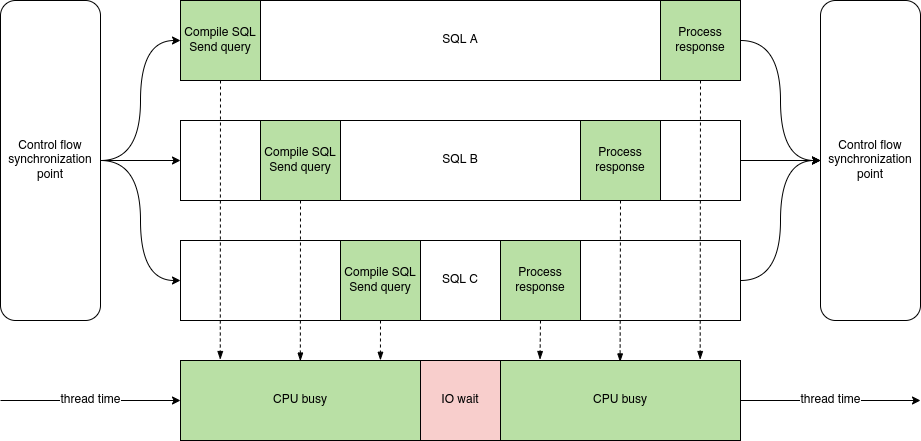

Execution plan of A, B, C coroutines. CPU time is 86%, IO wait time is 14%. Image by Author.

According to the execution plan, we bottleneck in the CPU: 86% of the OS thread time CPU was doing some useful work. Now consider a different order of launching coroutines:

await asyncio.gather(query_sql_c(), query_sql_b(), query_sql_a())

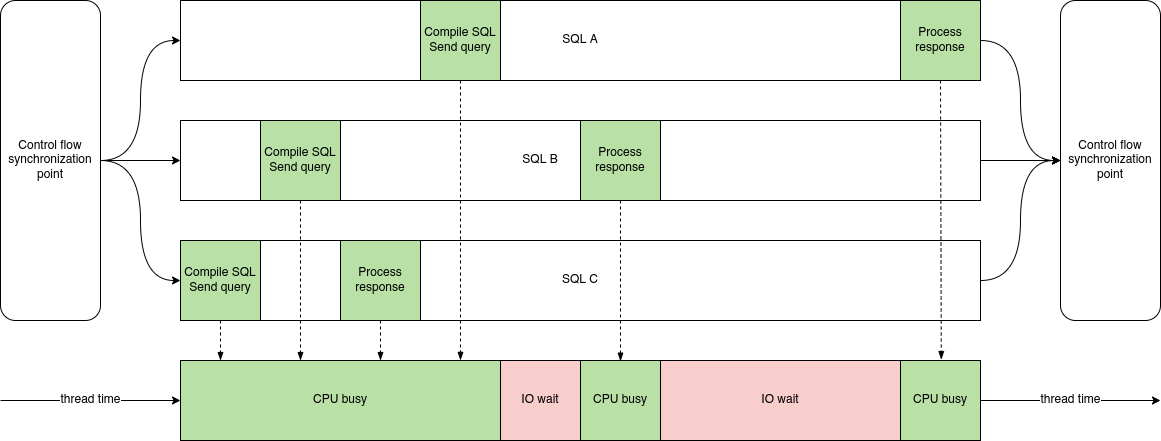

Execution plan of C, B, A coroutines. CPU time is 60%, IO wait time is 40%. Image by Author.

The second execution plan demonstrates how bad things go if we don’t guess the optimal order in which we should launch coroutines. The wall time increases from 7s to 10s — by 43%. We no longer heavily bottleneck in the CPU (60% vs. 86%).query_sql_c stage 3 competes with query_sql_a stage 1 and wins or loses depending on the event loop internals.

I am writing about Python code optimizations here, so I will not cover such outstanding issues as SQL performance and reducing individual IO wait-s. Hence my advice would be

Try to pass coroutines in

asyncio.gather()ordered by the expected IO wait time descending. That is, the first argument should be the coroutine with the highest expected IO wait, and so on.

Real example: we have a spot in the code where we gather ~10 coroutines. When I ordered them according to the mentioned heuristic, the average overall execution time decreased x2.

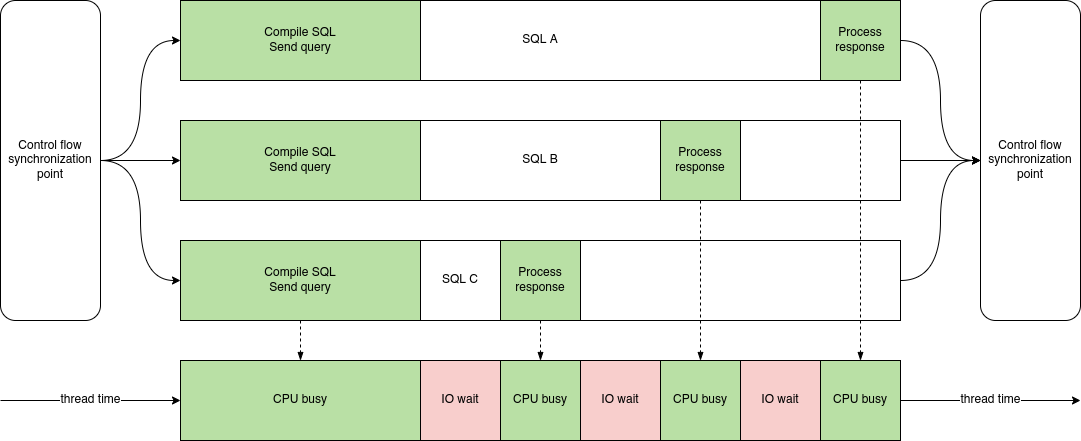

Would it make sense to order arguments of a hypothetical thread_gather that launches and joins threads instead of coroutines? Of course not. Would it be faster to launch threads instead of coroutines in my example? Actually, coroutines perform better given the GIL:

Execution plan of A, B, C threads. CPU time is 66%, IO wait time is 33%. Image by Author.

Shared in union

Our API uses SQLAlchemy Core to generate SQL (no ORM). There are quite a few places where some conditions in WHERE repeat. One example is the replacement of OR with UNION ALL: instead of

SELECT * FROM table WHERE ((a = 1 and b = 2) OR (a = 2 and b = 1)) AND c = 3```

we write

```Python

(SELECT * FROM table WHERE a = 1 and b = 2 and c = 3)

UNION ALL

(SELECT * FROM table WHERE a = 2 and b = 1 and c = 3)Why UNION ALL is usually better in such a scenario is a topic of another blog post. Let’s focus on how UNION ALL looks in SQLAlchemy:

union_all(select([table]).where(and_(a == 1, b == 2, c == 3)),

select([table]).where(and_(a == 2, b == 1, c == 3)))Imagine that instead of c = 3 there is a big expression, with variadic IN, etc. — constructing such an object twice is expensive. Instead, we can write:

shared_cond = c == 3

union_all(select([table]).where(and_(a == 1, b == 2, shared_cond)),

select([table]).where(and_(a == 2, b == 1, shared_cond)))This won’t work for every SQLAlchemy engine and for SQLite in particular because SQLAlchemy generates ?, ?, ? as parameter placeholders there and not indexed references $1, $2, $3. Nevertheless, together with the upgrade from SQLAlchemy 1.3 to 1.4 where they improved the treatment of big IN-s we got 1.5-2x faster SQL compilation.

From rows to columns

We query PostgreSQL through asyncpg. asyncpg fetches return rows like nearly any other relational DB drivers. However, our analytics API needs to build pd.DataFrame-s which are columnar: the values of each returned column are stored together. Moreover, before pandas 2.0, several columns of the same dtype are stored together in the same numpy array aka the block.

Naively constructing DataFrame using DataFrame.from_records() is extremely inefficient. Suppose that PostgreSQL knocks at asyncpg’s door. Here is what comes next:

Parse PostgreSQL wire protocol and create Python objects.

Insert those Python objects into created

asyncpg.Record-s.Iterate rows and insert Python objects into numpy array of dtype

object.Infer better dtypes (e.g. int, datetime, etc.) and convert Python objects to them.

Consolidate — BlockManager, the special pandas 1.x quirk, merges numpy arrays of the same dtype together.

Given pure object columns (e.g., with SQL nulls), we touch their reference counters 4 times: in (1), (3), (4), and (5). asyncpg.Record is used as an auxiliary container and can be excluded. Moreover, we don’t have to perform (4) because we already know the correct dtypes from the SQL query. The end-to-end transition from pgproto to ready DataFrame, therefore, takes above 500ms with ~20 object and ~20 typed columns and 300,000 rows on a modern x86 CPU.

The ideal pipeline would be:

Parse PostgreSQL wire protocol and write directly to numpy arrays without materializing strictly typed objects.

Construct BlockManager without redundant consolidation.

Pure object values would increment reference counters only once. The whole thing would elapse ~5ms by mere estimation. However, unfortunately, parsing pgproto and constructing asyncpg.Record-s reside deep inside Cython and even C code of asyncpg, so making the ideal pipeline means forking the project. We will surely fork it before conquering the world but have had to find a compromise in the meantime.

Our current compromise pipeline:

Parse PostgreSQL wire protocol and create Python objects.

Insert those Python objects into created

asyncpg.Record-s.Iterate rows and insert Python objects into two numpy arrays of dtype

object— one for dtyped and one forobjectcolumns.Convert dtypes given the prior knowledge from the SQL query.

Construct the blocks and wrap them in BlockManager.

Now pure object values increment the refcounters twice: in (1) and (3). We no longer try to guess the types. The memory copy bloat is significantly reduced. Our measurements indicate at least 10x faster conversion times, around ~50ms.

The actual source code can be found in the repository where our API lives: athenianco/athenian-api-open. It’s not universal and there is not enough will to make it a proper open-source library. Feel free to adapt it to your needs! We distribute those files under the MIT license.

Let me finish this section by giving generic advice.

Avoid object columns in pandas DataFrame-s whenever possible. Operations with them are much slower than with properly typed ones.

Iterating lists of tuples without GIL

A very specific objective: optimize the iteration over raw asyncpg.Record-s. It is indeed possible to work with them directly with GIL released. Cython code follows:

cdef extern from "asyncpg_recordobj.h":

PyObject *ApgRecord_GET_ITEM(PyObject *, int)

cdef extern from "Python.h":

# added nogil -> from cpython cimport ...

# these are the macros that read from the internal ob_items

PyObject *PyList_GET_ITEM(PyObject *, Py_ssize_t) nogil

cdef nogil_iter(rows: list[asyncpg.Record]):

cdef:

Py_ssize_t i, size

PyObject *record

PyObject *value

size = len(rows)

with nogil:

for i in range(size):

record = PyList_GET_ITEM(<PyObject *>rows, i)

value = ApgRecord_GET_ITEM(record, 0)asyncpg_recordobj.h is a simplification of the real recordobj.h in asyncpg:

typedef struct {

PyObject_VAR_HEAD

// asyncpg specifics begin here

// if they add another field, we will break spectacularly

Py_hash_t self_hash;

PyObject *desc; // we don't care of the actual type

PyObject *ob_item[1]; // embedded in the tail, the count matches len()

} ApgRecordObject;

#define ApgRecord_GET_ITEM(op, i) (((ApgRecordObject *)(op))->ob_item[i])Depending on what the type value has, the nogil hack may be handy or appear useless. For example, if value is a string and your CPython stores Unicode strings in UTF-8 internally, <const char *>PyUnicode_Data(value) will work. If value is an integer, PyLong_AsLong(value) will work, too. But working with complex classes will require taking the GIL.

The speedup should be ~10x.

In case we work with tuples instead of asyncpg.Record-s, we can slightly change the code above to remain functional:

cdef extern from "Python.h":

# added nogil -> from cpython cimport ...

# these are the macros that read from the internal ob_items

PyObject *PyList_GET_ITEM(PyObject *, Py_ssize_t) nogil

PyObject *PyTuple_GET_ITEM(PyObject *, Py_ssize_t) nogil

cdef nogil_iter(rows: list[tuple]):

cdef:

Py_ssize_t i, size

PyObject *record

PyObject *value

size = len(rows)

with nogil:

for i in range(size):

record = PyList_GET_ITEM(<PyObject *>rows, i)

value = PyTuple_GET_ITEM(record, 0)You’d better not mistake with indexing both asyncpg.Record-s and tuples because you’ll otherwise immediately catch a dragon in native code.

Zero-copy (de)serialization

We currently store various precomputed data in PostgreSQL. We fetch it according to many filters coming from the application logic. The collected profile and traces in Sentry explicitly showed that we sometimes spent too much time in data serialization during INSERT INTO … VALUES and deserialization — the creation of Python objects while parsing pgproto that I mentioned in one of the previous sections.

We were able to optimize that hot spot by employing a special, limited, immutable data structure based on structured numpy arrays. In a nutshell, it is an array wrapper around bytes. That’s the only item in __slots__, really.

When we want to extract some field "foobar" from the structure, we execute:

When we want to extract some field "foobar" from the structure, we execute:

@property

def foobar(self):

return self._array["foobar"][0]Our serialization is zero copy:

def serialize(self):

return self._array.view(np.uint8).dataAnd the deserialization is nothing, too:

def __init__(self, data: bytes):

self._array = np.frombuffer(data, dtype=self.dtype, count=1)dtype looks like np.dtype([("foobar", int), ("baz", "datetime64[ns]")])

The secret weapon of our structure is very efficient conversion to Pandas DataFrame:

concat_bytes = b"".join([x.serialize() for x in rows])

boss = np.frombuffer(concat_bytes, dtype=dtype, count=len(rows))

pd.DataFrame({"foobar": boss["foobar"], "baz": boss["baz"]})The concatenation of bytes can be further optimized with nogil in Cython.

The actual implementation is more complex. It supports:

Scalar non-

objectfields that numpy has, including unicode strings and blobs.Variadic length arrays of those types.

Properties generate automatically.

Optional mutable addon fields (not serialized).

This is an example:

@numpy_struct

class PullRequestFacts:

class Immutable:

created: "datetime64[s]"

first_commit: "datetime64[s]"

...

class Optional:

repository_full_name: str

...It’s hard to be faster than zero copy and O(1). @numpy_struct gave us at least 10–50x performance improvement compared to pickle and storing fields in individual SQL table columns.

There are drawbacks, however:

We cannot work with arbitrary types.

We cannot push down filters over the fields to SQL.

So @numpy_struct is not a universal solution to all the problems.

Pandas without Pandas

Pandas 1.x is a microperformance dumpster fire. That’s official. At the same time pandas is very convenient and overall is a great, well-documented tool.

We had to rewrite certain parts of the API code in favor of low-level numpy array manipulation. Let me give a few examples. The first is the trivial extraction of sub-dataframe by some condition on the columns.

df = pd.DataFrame({"a": [...], "i": [...]}).set_index("i")

df[df["b"] > 10]We do it more verbose but more efficient:

df.take(np.flatnonzero(df["a"].values > 10))If we call this in a loop with a few hundred repetitions, and the dataframe size is less than a hundred rows, our extraction runs an order of magnitude faster. It happens because df[...] selects by index values and therefore performs unnecessary index lookups, and also because we simply do not execute a lot of underlying glue code.

The second example is executing some function on the values of column “b” grouped by the values of column “a”.

df = pd.DataFrame({"a": [...], "b": [...]})

df.groupby("a")["b"].apply(function)This is an alternative, much faster way to do the same:

arr_a = df["a"].values

arr_b = df["b"].values

keys, indexes, counts = np.unique(

arr_a, return_inverse=True, return_counts=True)

order = np.argsort(indexes) # better when arr_a's dtype is S or U

offsets = np.zeros(len(keys) + 1, dtype=int)

np.cumsum(counts, out=offsets[1:])

for i, key in enumerate(keys):

grouped_b = arr_b[offsets[i]:offsets[i + 1]]

function(key, grouped_b)This code snippet leverages the power of np.unique that can efficiently count unique values (return_counts=True) in an array, and also find first encounters (return_index=True) or map each value to a unique index (return_inverse=True). We sort the elements of arr_a and iterate the groups knowing the size of each group.

{{ DATA.FRAME.GROUPBY }}

Pandas uses a hash table technique for groupby under the hood and thus has a better big-O than sorting, however, high level of abstraction and poor microperformance add a huge linear penalty. The actual speedup depends on the size of the dataframe and the nature of columns “a” and “b”. In our production, the typical boost is 20 to 50x.

It is possible to similarly replace many other operations on top of groupby such as idxmin() or count() and even account for missing values via NaN-s and NaT-s.

We used to follow another approach in the past:

df.groupby("a").grouper.groups.values()The np.unique way avoids materializing the whole list of variable-length array indexes for each group, hence is faster.

Was all of those worth it?

Instead of comparing performance optimization with shaving yaks, I will compare it with training to run a marathon. You start in a completely awful shape, then slowly progress, week by week, yielding slightly better results every time. Until one day you meet the physical requirements and run a marathon. Each kilometer of the race will remind you of the things you went through to be able to run forward.

Athenian API processes hundreds of thousands of items filtered by ten different properties, logically joining several software development activities in one giant queryable DAG, in milliseconds. We started with a really slow MVP codebase two years ago, only 4 months after the company emerged. I feel shame for that code, and that’s a good thing: we didn’t overkill it. Two years after, the same API queries execute ~1000x faster. I nearly scratched the surface of what we did to reach 1000x, and we by no means have finished! The next blog post will summarize our PostgreSQL query optimization experience. Considering only the Python code performance, it has improved ~100x.

TL;DR

I have considered a few tricks with Python code that helped us improve the analytics backend performance. They were:

asyncio.gatherargument ordering by IO wait time.Shared filters in SQLAlchemy Core.

Custom construction of Pandas DataFrame from

asyncpg.Record-s.Iterating lists without GIL in Cython.

Zero-copy (de)serialization data structure.

Replacing pandas

groupbywith pure numpy.

Those tricks gave us two orders of magnitude performance improvement on our workload. The sample source code is on GitHub.

If the product we’re building sounds like something your engineering org needs, check out Athenian.com.