Using Sentry Performance To Make Sentry Performant

Like many companies, Sentry uses feature flags to determine when certain users see certain features. Recently, we decided to switch our feature flag software to an open-source system called Flagr. And while implementing Flagr, we used our new Performance tools to find — and ultimately fix — a serious issue in how we were fetching our flags.

Here’s how we approach feature flags: as the page loads, we make a call to our Flagr instance to fetch all of the flags and cache the results. And while our initial implementation evaluated each flag individually, due to the sheer number of flags we needed to check, it became necessary to switch to a batch evaluation. Fortunately, Flagr has an autogenerated Python library, which made implementing the batch request simple enough:

def bulk_has(features, actor):

with sentry_sdk.start_span(op="feature.flagr.bulk_has"):

# generate feature list, check if cached

with sentry_sdk.start_span(op="feature.flagr.evaluation"):

response = flagr.EvaluationApi(self._client).post_evaluation_batch(

body=flagr.EvaluationBatchRequest(

flag_keys=[feature.name for feature in flagr_features],

entities=entities,

enable_debug=False,

),

# connection, and read timeout

_request_timeout=(0.1, 0.01 * len(flagr_features)),

)

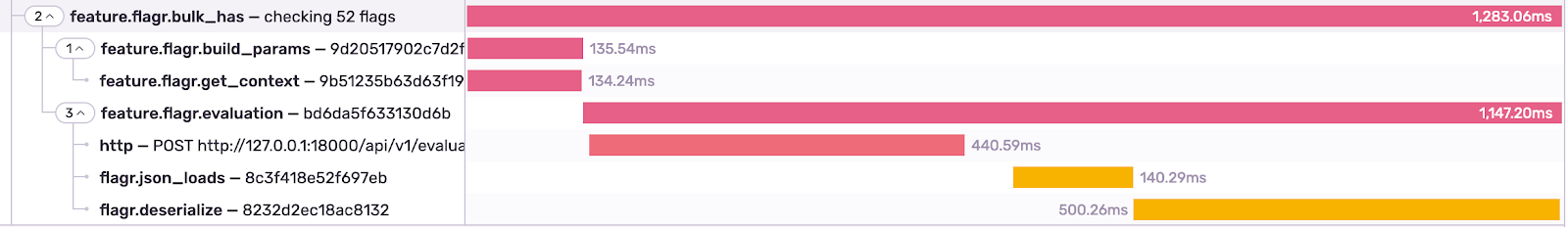

# Parse, cache and return resultsHere, we pass each flag we’re evaluating to the flag_keys parameter, so each flag knows which entity to evaluate. And when we try this with all 50 feature flags, we not only see that the whole function took roughly 900ms, but that the feature.flagr.evaluation span occupied the bulk of that time (500ms).

At first glance, it seems as if evaluating all 50 feature flags is the reason for the 500ms delay. But thanks to Performance’s auto-instrumentation, we can see that the HTTP call to the Flagr API only took 70ms. So the next step here was to manually instrument Flagr’s Python client to get a better understanding of what was going on. Our assumption was that the main performance hit was happening when we were trying to deserialize the flags:

def __deserialize(self, data, klass):

with sentry_sdk.start_span("flagr.deserialize"):

if data is None:

return None

# The rest of the deserialize functionYou can see that the deserialize function is called quite frequently, and is in fact the major cause of the performance issues. We felt that the size of the JSON response may perhaps be related to the amount of time required for deserializing — a hunch that’s supported by how quickly a single flag check takes. The gap after the http span is minuscule — far less than a millisecond:

We can confirm this theory by using Performance on the batch request. We put more manual instrumentation into the Flagr client — specifically, adding the span flagr.json_loads around those lines of code that perform the JSON parsing. While slow, it became clear that the majority of the time is still spent deserializing JSON:

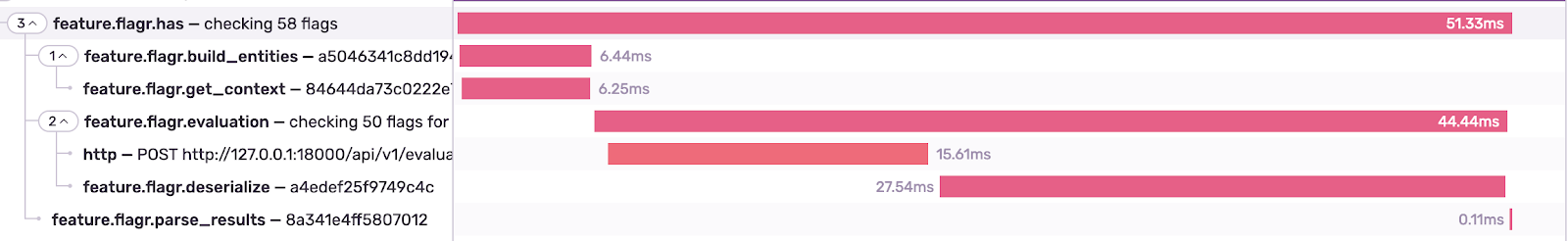

As we dug into the client more, we noticed that deserialization was turning the entire JSON blob (i.e. the flag results) into a Python object. While deserializing JSON is convenient from a development perspective, because we were only using a few JSON fields, the cost just wasn’t worth it. After patching our own deserializer with a simple json.loads(response.data) we saw a huge improvement in speed — so much so that other areas began to look slow by comparison:

Here, we see that the gaps both before and after our evaluation taking up nearly 100ms. And by manually instrumenting suspect code (again), we were able to attribute the delay to the cache writing — and checking — each flag that's in it. Since we know Flagr is taking a fraction of that time, we can conclude that using the cache is slowing us down. By removing the cache, we were able to check all 50 flags in 200ms.

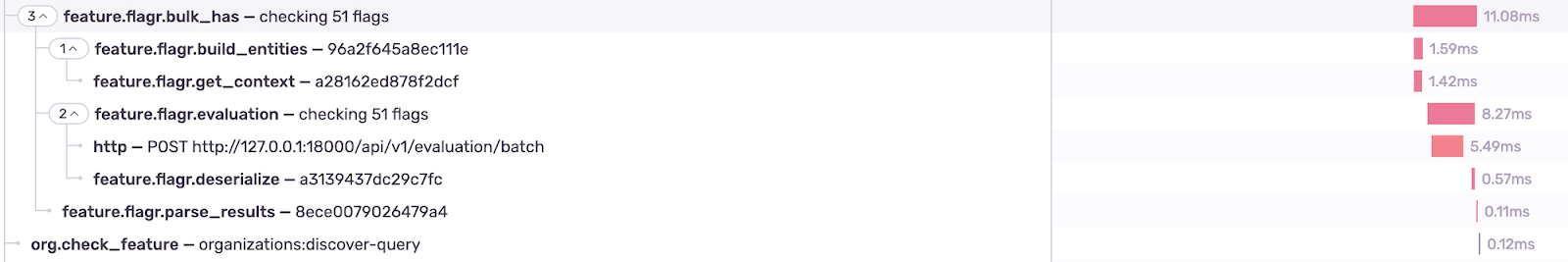

But we can go deeper. Let’s check this large JSON blob that is taking us 140ms to parse. We see there are 2500 objects in the response, far more than the 50 we would expect. It looks like our earlier assumption — that we had to pass an entity for each flag — was incorrect due to the fact that Flagr actually checks each flag for all entities. And since we had passed one entity for each flag, we were essentially performing 2500 checks (i.e. 50x50) rather than the 50 we were expecting. After updating the code so that it checks each flag only once, the time to check all 50 flags — voila —dropped to 10ms:

Thankfully, the work identifying the issues with deserialize was not wasted, as the original deserialize still takes magnitudes longer, and was much easier to identify with a larger set of flags to evaluate.

Feature flags are a great tool to control operational aspects of a system's behavior without destabilizing the codebase. But to use feature flags properly, you need to know which way the wind’s blowing. And for us, Performance does that: it’s the saliva on our index fingers that we hold up to the wind.