Proactively Wrangle Events Using Sentry’s Alert Rules

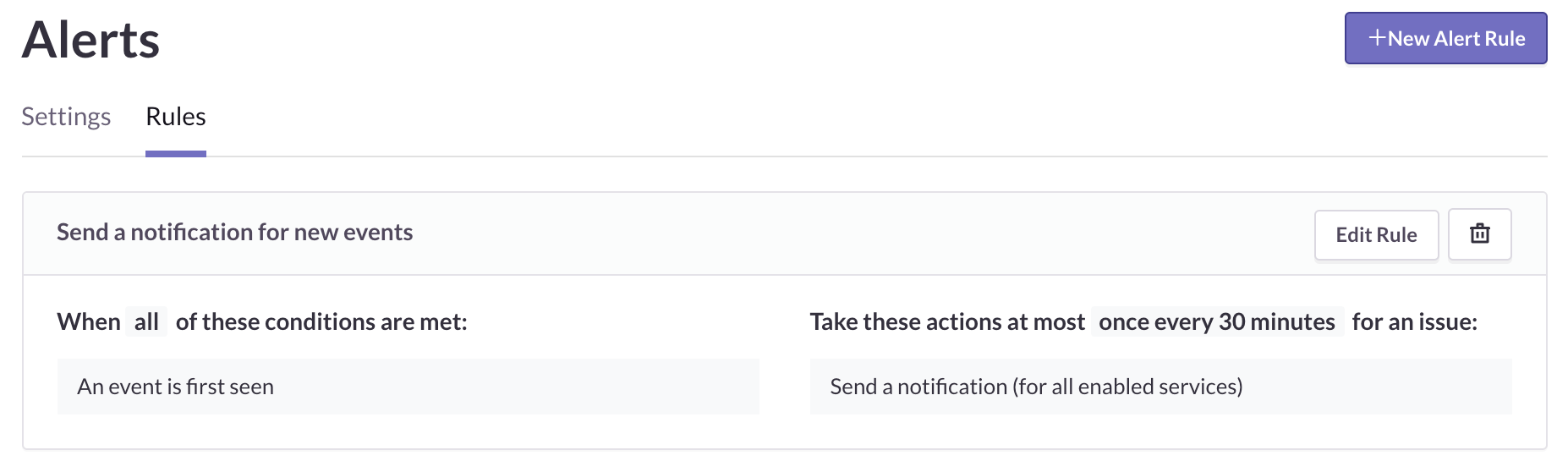

Proactively Wrangle Events Using Sentry’s Alert RulesWhen you start a project in Sentry, we automatically set up a single alert rule that notifies you any time an error is first encountered. The rules for this alert look like this:

Sentry’s default alert rule

What this means is that the first time a new issue is generated by an error, we’ll notify you of it via whatever services you have connected to Sentry. Initially this is just email, but it's easy to add Slack, HipChat, PagerDuty, or a number of other alerting and notification tools.

Leaving this as-is and keeping it as your only alert rule treats every error the same — at least as it applies to notifications — when we all know that every error is not the same. There are several different reactions you might have when you’re alerted to a new issue:

Sh*t! Sh*t! Sh*t! I didn’t know about that. Gonna fix!

Hmm, yes, noted. We’ll deal with that soon.

Uh, that’s just a minor JavaScript error experienced by some weirdo who still uses Opera. I don’t care.

OK, I’m gonna filter these to a folder in Gmail and check them when I feel like it.

The needs of your organization could be very different from other orgs or even vary internally from project to project. Maybe you don’t want to just be notified of new issues, but of errors that continually crop up (we can send you alerts based on individual groups and rules as often as every five minutes). Maybe you only want to be notified when an error hits a certain threshold.

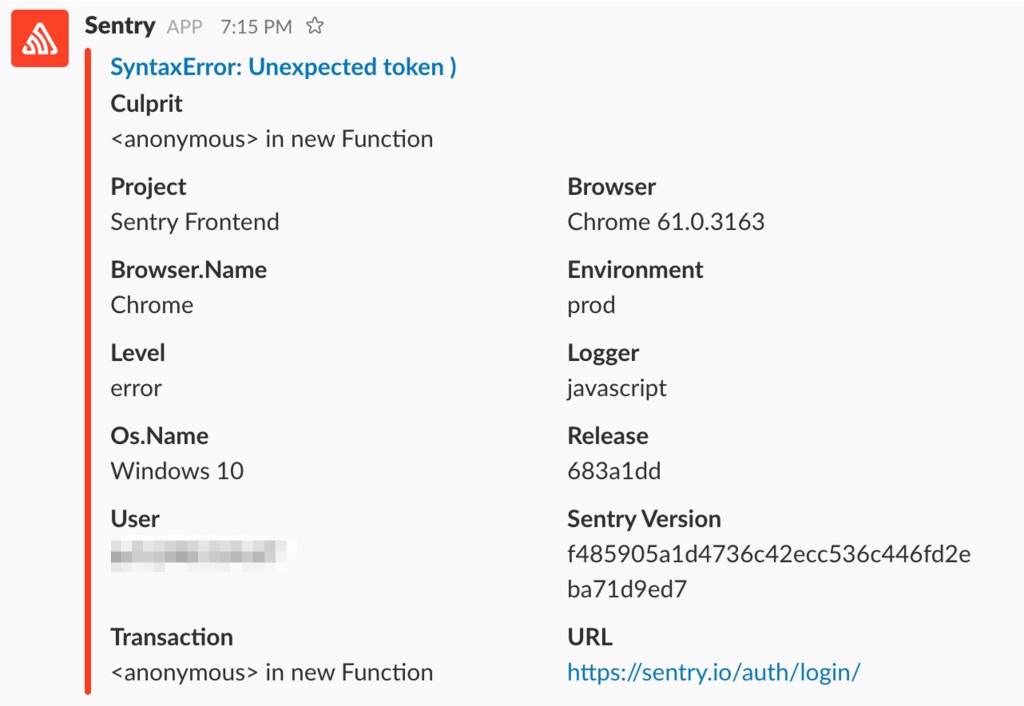

Of course, you can see all this detail (and more) in Sentry. So why do we limit you to just one kind of alert? Well, here’s the thing:

We don’t limit you to one kind of alert. You can create any number of conditions for alert notifications that are sent out to specific channels of your choosing based on just about any data triggers or filters you’ve defined within Sentry.

To highlight the possibilities, let’s dive into a few examples and consider some of the different variables you can use within them:

Send alerts to PagerDuty when sh*t hits the fan

Notify me in Slack when there’s a significant issue to examine

Send everything to email for examination later and historical reference

An alert notification with Slack

All of these alerts (and any others you think up) are built in the same place: the Alerts section of each project’s settings.

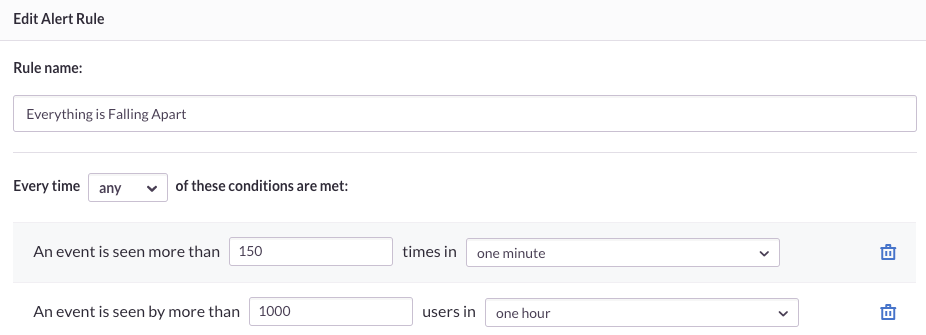

1 — Everything is falling apart

Major product functionality is widely understood to be at its best when it’s actually working. When it’s not working — especially for a notable segment of your users — then you likely want to know immediately so you can fix it.

Our example “Everything is Falling Apart” rule pictured above is triggered under two circumstances:

An event is seen more than 150 times in one minute

An event is seen by more than 1000 individual users in one hour

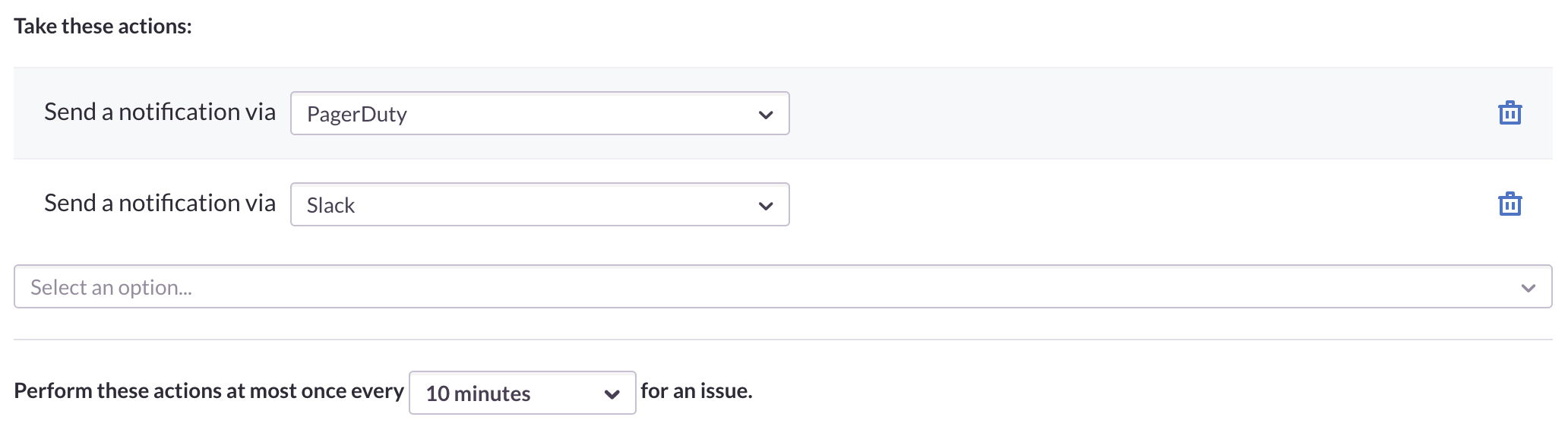

When an issue matches either of these conditions, a notification is sent to Slack and PagerDuty every 10 minutes until the issue is resolved and we’re no longer seeing an event that meets these constraints. In real life, you can set your own duration thresholds: this could be between five minutes and one week.

These specific numbers and variables may not be right for you or your company. They may even sound ridiculous if applied to your own situation. That’s why these alerts are so flexible. Not only can you enter whatever duration threshold you want, you can make it so either ANY or ALL of the conditions must be met before the alert is fired. If you select ANY, then every condition associated with a rule is treated as its own alert. That means if multiple conditions are encountered during the same period, they’ll fire their own individual notifications, the timing of which will be separately beholden to your threshold rules.

There are also plenty of other variables around which you can build alerts and notifications, including tags, user.ids, platforms, and many more. We’ll show you others of them in action as we go deeper into this blog post.

2 — The problem is significant

Plenty of errors aren’t P1, so not worth waking up a developer or SRE in the middle of the night to deal with them. Heck, some errors aren't even worth disrupting dinner (unless you've just been served a hot plate of week-old liver and onions, in which case: you're welcome). But you still want the error fixed quickly, so you need it surfaced even more quickly for other departments, like Support or Product Management, to be fully prepared to answer questions while the issue is ongoing.

In the above “Significant $ Problem” alert, we’ve set it so that an alert will fire off to Slack and email every three hours if a customer’s user.id includes “VIP” and the URL where the event occurred contains “billing.”

After all, VIP customers with lots of money to spend shouldn’t find anything impeding them from doing so.

There are no limits to the amount of alerts that can be associated with a project. So go crazy and create any variety of alerts like these for all the variables you deem important.

3 — The error exists and continues to occur

Sentry’s automatically generated alert tells you when an error is first encountered. But maybe you’d like to know when an error has impacted some minimum number of users (like 20) or be reminded once a week about every error, however minor, so you can’t forget to deal with them. This could be for all errors or just specific types of errors based on the same sorts of variables we’ve discussed above.

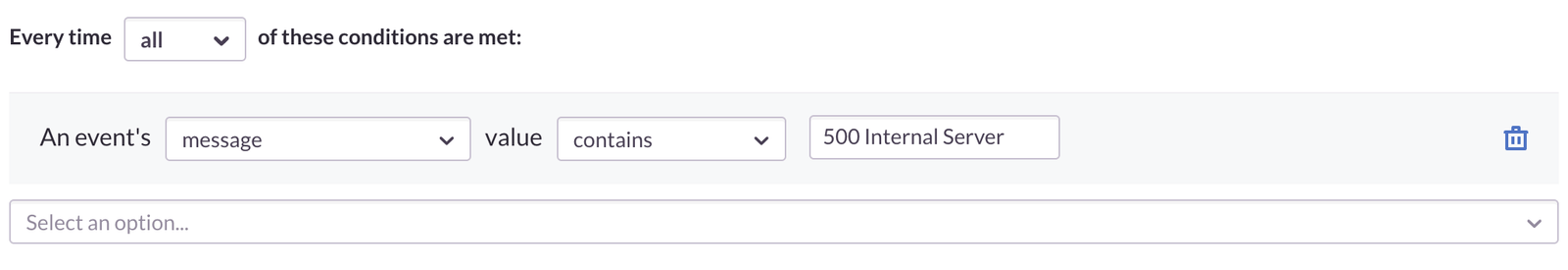

Here’s one that specifically triggers for any event that includes a 500 Server Error:

And, as before, the alert can be set to send a new email notification for any duration threshold you choose, from five minutes to one week.

You can undoubtedly think of many more possibilities. And, if those possibilities fall within the realm of events captured by Sentry, then you can create helpful alerts for them.

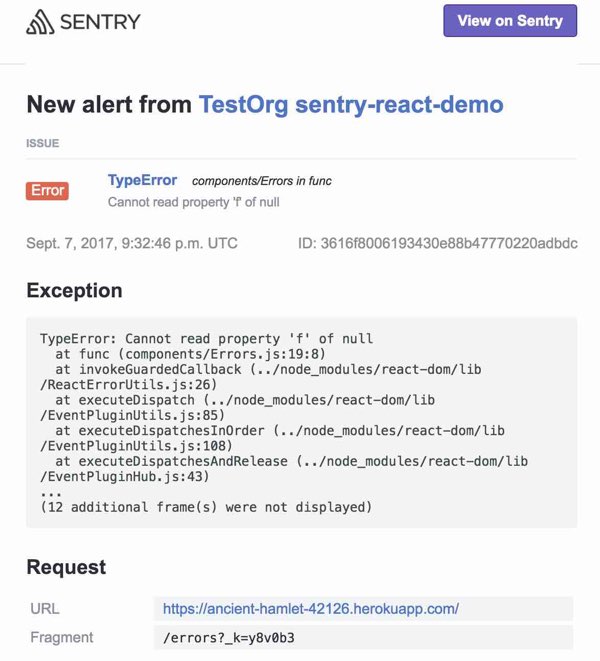

Exception from an emailed notification alert

Note that these alerts are one of two different kinds of notifications we send out. The other kind of notification is related to your workflow within Sentry, like assigning issues to developers, making comments on an issue, or regressions (when an issue is seen again after it has been marked as resolved and, thus, becomes unresolved). These workflow notifications are simply letting you know when something has changed with work you’re actively doing in your account and are not impacted by additions or alterations you make to alert rules.

The more thought and design you put into your alert rules, the more proactively you can triage and appropriately tackle errors that come in. The better you manage this, the fewer events you’ll see cropping up and the happier your customers will be.