Introducing new issue detectors: Spot latency, overfetching, and unsafe queries early

Introducing new issue detectors: Spot latency, overfetching, and unsafe queries early

Not everything in production is on fire. Sometimes it’s just... a little warm.

A page that loads a second too slow. An API that returns way more than anyone asked for. A query that feels totally fine until someone sends something unexpected and suddenly you’ve got an incident.

We’ve been improving how Sentry detects those kinds of problems, making it easier to spot:

Large HTTP payloads — flags oversized responses (like unpaginated queries or bloated GraphQL results) that slow down page loads and burn mobile bandwidth

Consecutive HTTP requests — flags 3+ sequential HTTP calls that could be parallelized to cut unnecessary wait time and keep users from rage-quitting

Query injection issues — surfaces unsafe input flowing into raw queries (SQL, Mongo, Prisma) before it turns into a real security problem

Available to all Sentry users, these new detectors give you more visibility into issues that don’t always trigger errors — but can gradually degrade performance, weaken security, or frustrate users over time, so you can catch and fix them before they turn into bigger problems.

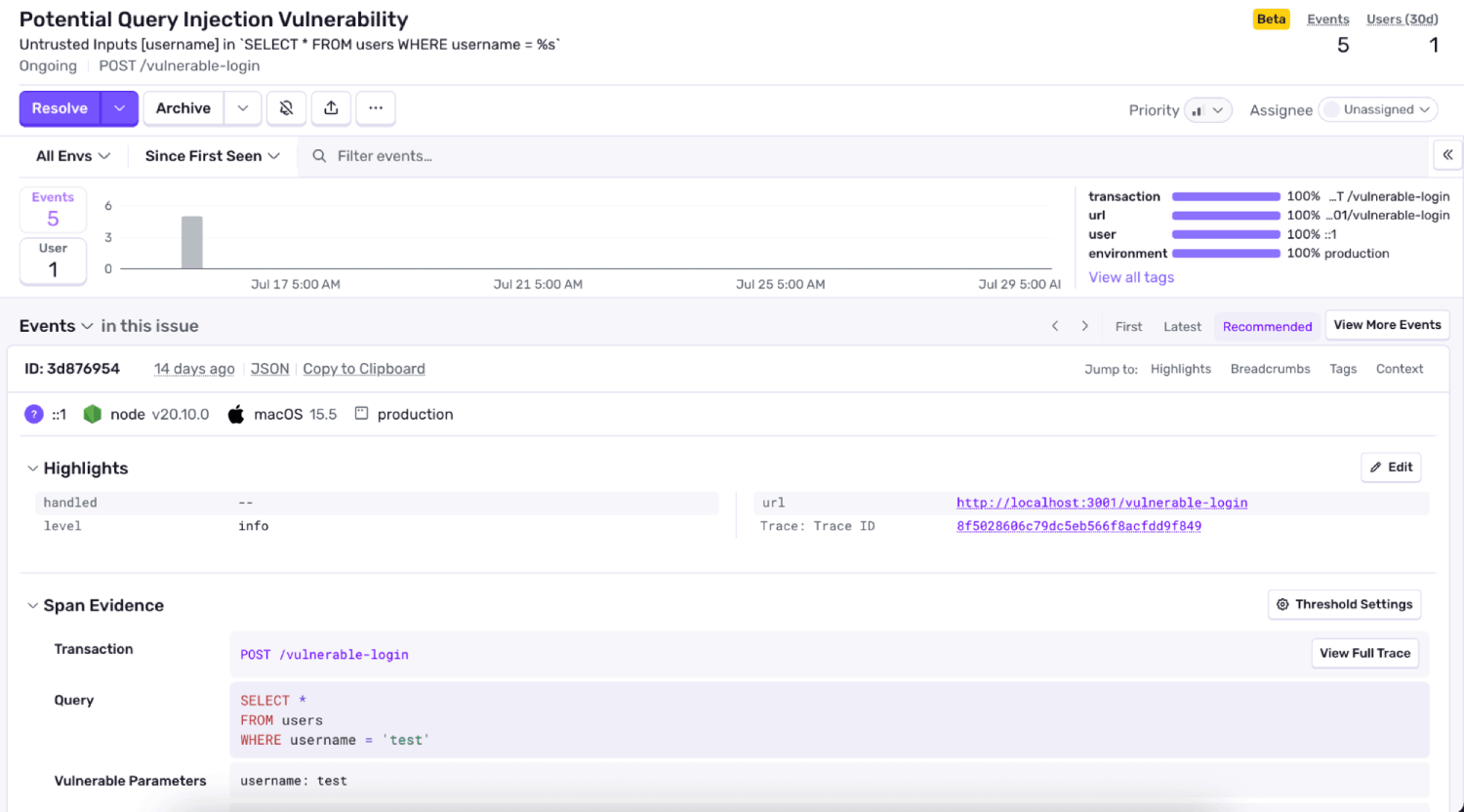

Catching unsafe query patterns before they’re exploitable

You’re wrapping up a feature, dropping a request param into a query. It works, tests pass, and you move on.

But if that input isn’t properly escaped or sanitized, it opens the door for someone to change what the query does — even if everything looks fine.

Sentry now detects these query injection issues by tracing how input flows through your code — from request to query — and raising an issue when we spot unsafe patterns like string interpolation, template literals, or raw values passed directly into queries.

So when you someone grabs an id from the URL and drops it directly into a SQL string, it might look safe. But with the right input — like 1 OR 1=1 — that query suddenly returns everything instead of just one row. No errors, no alerts, just a security incident that’s easy to miss and hard to trace.

These inputs often come from URLs, headers, cookies, or body payloads — and even partial escaping isn’t always enough.

When this alert shows up, it doesn’t necessarily mean you’ve been exploited — but it does mean your code could be. And now, you’ll catch it early, before it becomes a real problem.

Find hidden latency: Large HTTP payloads and consecutive requests

Some of the hardest performance problems to catch are the ones that don’t look like problems at all.

Nothing’s failing. There are no alerts — just a page that feels slow leaving you asking whyyyyy? And that kind of drag creeps in gradually — especially as teams ship quickly and features layer on.

Sentry now flags two new issue types: large HTTP payload and consecutive HTTP requests.

Catching overfetching before it hits production

Let’s say you’re returning some data from an API — maybe user profiles, config, or a nested object tree. Over time, more fields get added. Pretty soon, that “small” response is hundreds of kilobytes.

You wouldn’t notice locally, but it’s eating up bandwidth and slowing down clients — especially on mobile or constrained networks.

That’s where large HTTP payload issues come in.

We raise this issue when an HTTP span has an encoded body size that exceeds a defined threshold, signaling that the response might be returning more data than necessary. These aren’t theoretical concerns; bloated payloads affect parse time, memory usage, and perceived performance.

That means if your checkout handler sends the entire cart object — including product details, pricing rules, and form state — directly in the request body, resulting in an unnecessarily large payload, Sentry will now alert you. You’ll get full context, including the trace, so you can optimize the request by sending only the data your backend actually needs to validate the order.

By flagging these early, we help you catch overfetching before it becomes real user-facing drag.

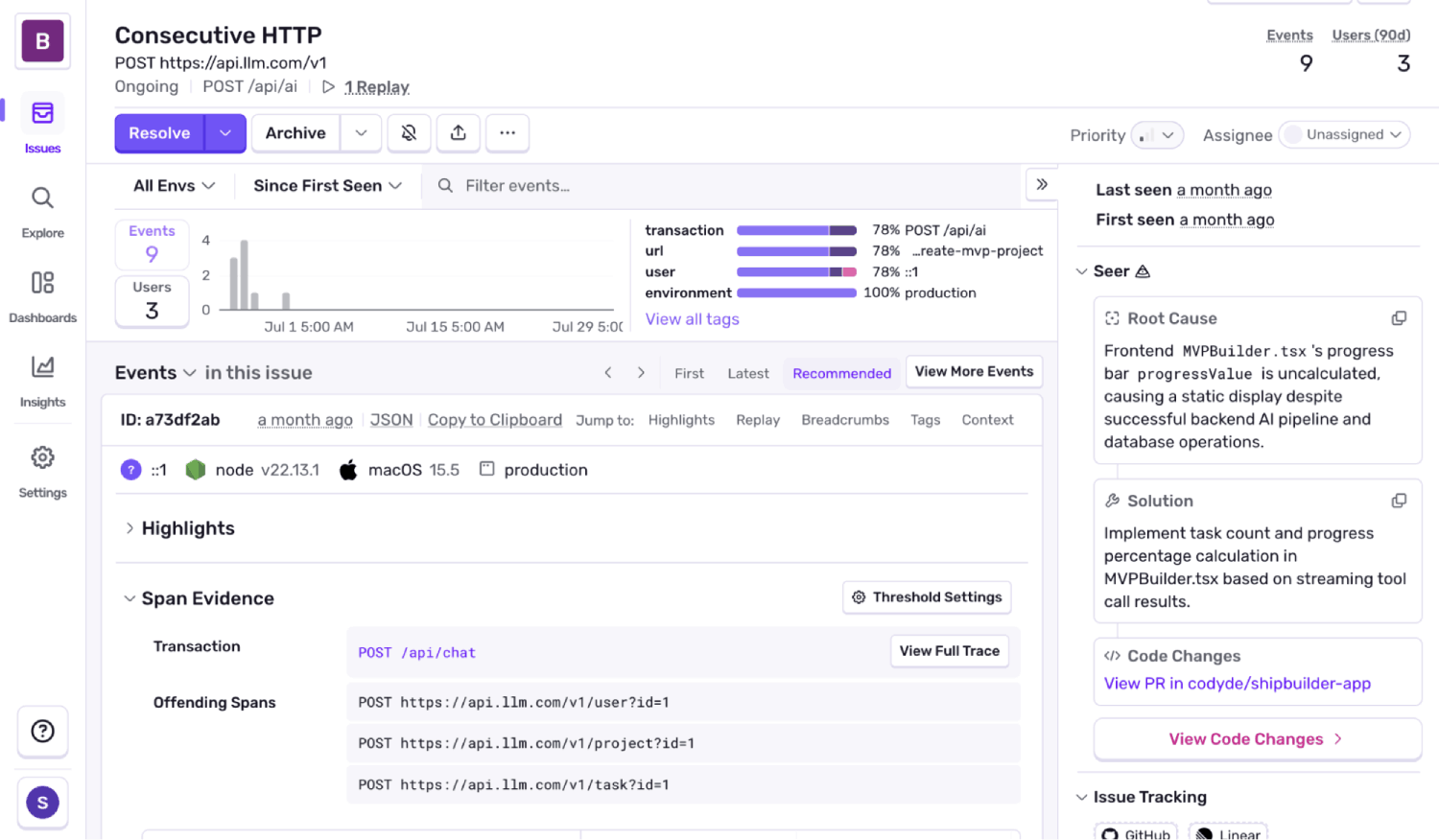

Spotting latency from sequential HTTP calls

Another sneaky pattern is request chaining: one call waits on the next, which waits on the next. Maybe it’s fetching config, then loading data, then sending analytics. It works — but it runs sequentially, even though it doesn’t need to.

Consecutive HTTP Request issues are raised when at least three HTTP calls occur back-to-back, and at least 2000ms of latency could have been saved by parallelizing them. That’s real time lost to requests waiting on each other unnecessarily.

We detect these by analyzing traces and measuring gaps between outbound HTTP spans. It’s especially common in service-to-service call chains — places where sequencing isn’t intentional, just incidental.

Once flagged, the fix is usually simple: fire requests in parallel or restructure the flow to remove the dependency.

For example, if you’re building an app and during the onboarding process it might trigger a createUser request, then a createProject request, then send an individual createTask request sequentially. The tasks are independent, but the calls are chained — adding avoidable latency to the response stream. Sentry flags this as a consecutive HTTP request issue, with trace-level detail so you can parallelize the flow and reduce wait time.

These issues aren’t noisy — and that’s by design. But when they show up, they highlight real opportunities to reduce load time, optimize network usage, and make your app feel faster — without refactoring your core logic.

How to get started (tl;dr you don’t really need to do anything)

To start seeing large HTTP payload, consecutive HTTP request, and query injection issues:

Instrument tracing in your application using Sentry’s SDK. Make sure HTTP spans and database queries are being captured — either through automatic instrumentation or manual tracing.

Once you’re sending spans, go to the Issues tab in your Sentry project. Use the search filter issue.type and drop in the issue type you’re looking for, like

issue.type:"performance_consecutive_http"orissue.type:"performance_large_http_payload"

If you’re already using tracing, there’s nothing new to set up — these issue types are already available and will appear when triggered.

Want to stay up-to-date on the latest from Sentry? Check out our changelog for a running list of all product and feature releases. You can also drop us a line on GitHub, Twitter, or Discord. And if you’re new to Sentry, you can try it for free today or request a demo to get started.