You built the MCP server. Now track every client, tool, and request with Sentry.

You built the MCP server. Now track every client, tool, and request with Sentry.

TL;DR - Starting today, you can instrument most server-side JavaScript SDK based MCP servers with one line of instrumentation code within your MCP SDK implementation.

wrapMcpServerWithSentry(McpServer)With this in place, you’ll be able to see details like protocol usage, client usage, traffic, tool usage, and performance across your MCP implementation.

When we launched our own MCP (Model Context Protocol) server earlier this year, the goal was simple: make it easy for AI agents to use Sentry’s context to debug issues in our users applications, in real-time.

Building against an emerging tech, whose standards are a moving target, isn’t easy. Even our Co-Founder David Cramer has shared his famous hot takes about it. In the past several months we’ve watched as MCP standardized on Streamable HTTP, developed better OAuth support, introduced new spec’s, and many other “adjustments” (to put it lightly) along the way.

We’ve built our MCP server “bleeding edge” with the idea of making adoption very easy for users, and to support the complex use cases that our user base runs into. Living on the edge of things like remote hosted, stateful MCP servers, supporting OAuth, and adding capabilities like Seer, and natural language search, has come with its own challenges to try and monitor and observe.

On top of all that, because of Sentry’s scale, the usage of our MCP server has grown very fast — 50 million requests per month across thousands of users (and climbing).

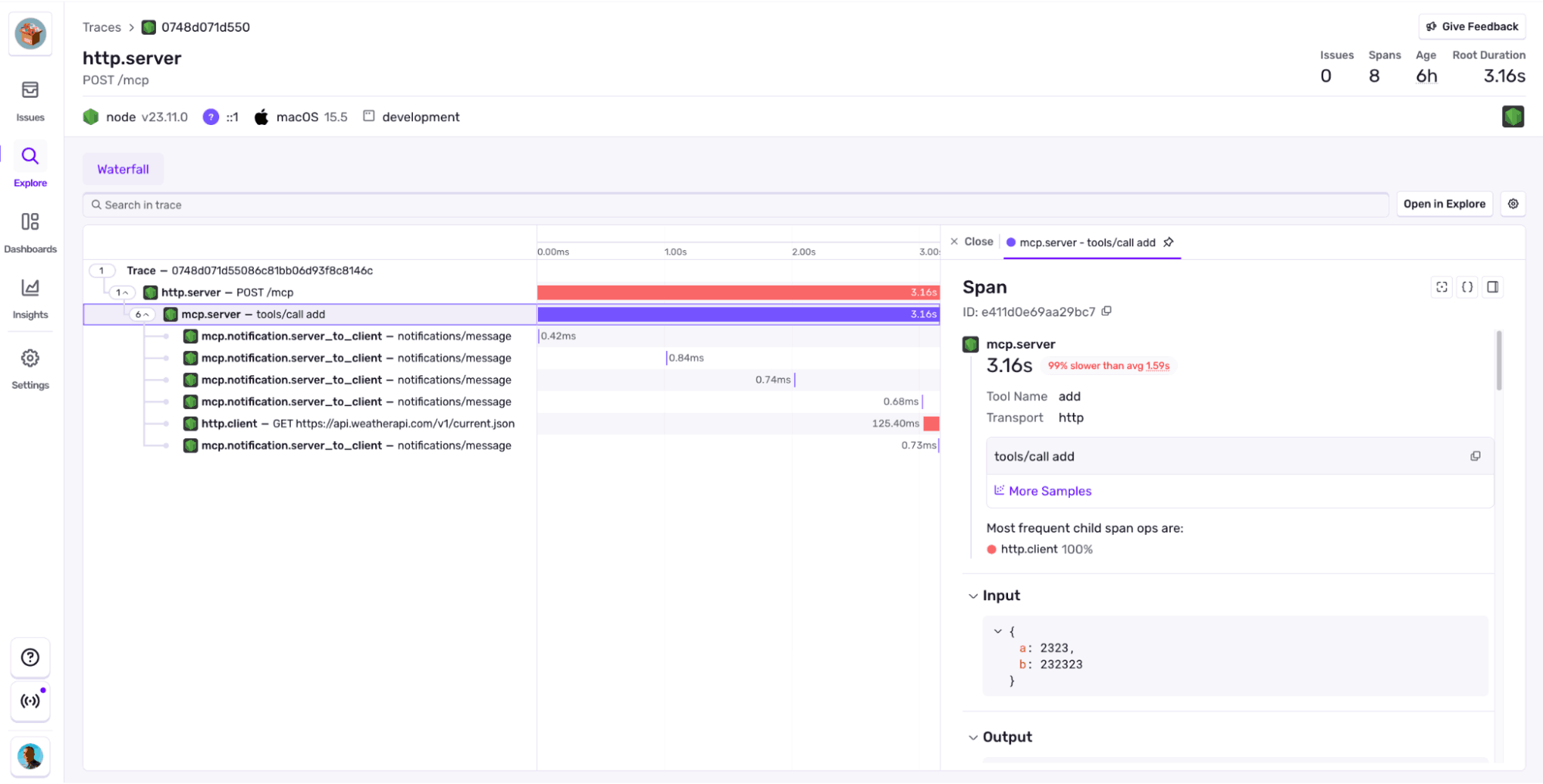

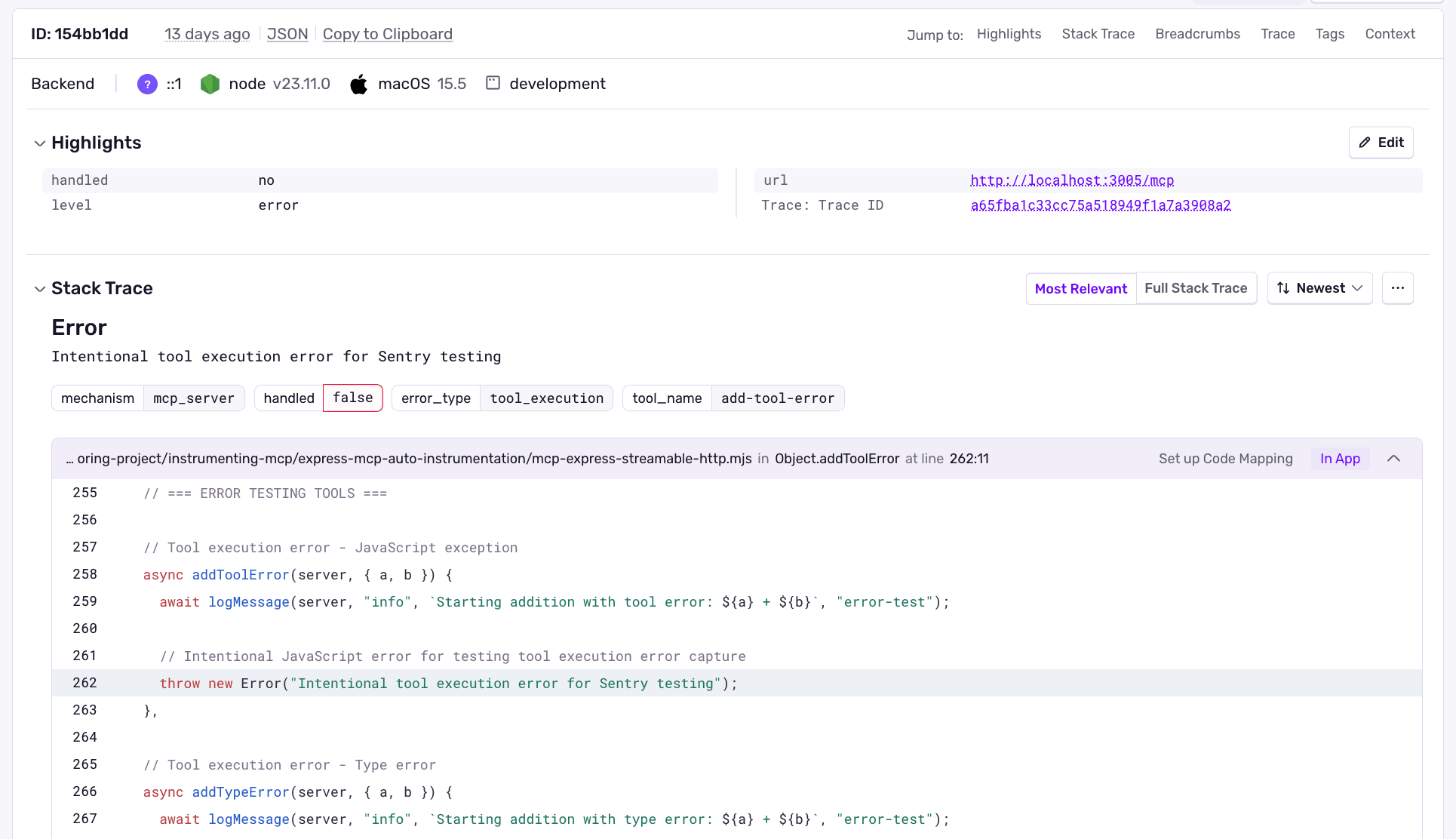

While building the Sentry MCP server, we started to get a good sense on where the gaps were in monitoring MCP servers. It’s challenging to capture details of errors or of the random sharp edges that came up within MCP. Performance issues in tool calls are hard to diagnose, and even challenges with specific inputs and outputs of the tool calls are all areas that are immature today in MCP monitoring.

On top of that, we experienced the normal “fun” of platform outages in upstream systems. In the most challenging case, we started receiving random reports of users timing out. Requests abruptly ending with no results and no errors. From our side, nothing looked wrong, and without visibility all we had to go off of was the users who reached out to us. We didn’t even have a way to know how many users were impacted and what MCP clients it was coming from.

As we built the MCP server, we started building our own tooling for monitoring it. During this time, MCP became “a whole thing”, and it was clear that the tooling we built for our own use would be pretty useful for the big new AI tool that everyone was building against.

And so, we built MCP server monitoring so you can get visibility into all the sharp edges that your MCP server has, who’s using it, how it’s working (or not), and get alerted when things break — without having to wait for your users to tell you.

MCP server monitoring is in beta and available to anyone using server-side JavaScript SDKs. All you need is a Sentry account and a few lines to start sending spans.

What is Sentry's MCP server monitoring?

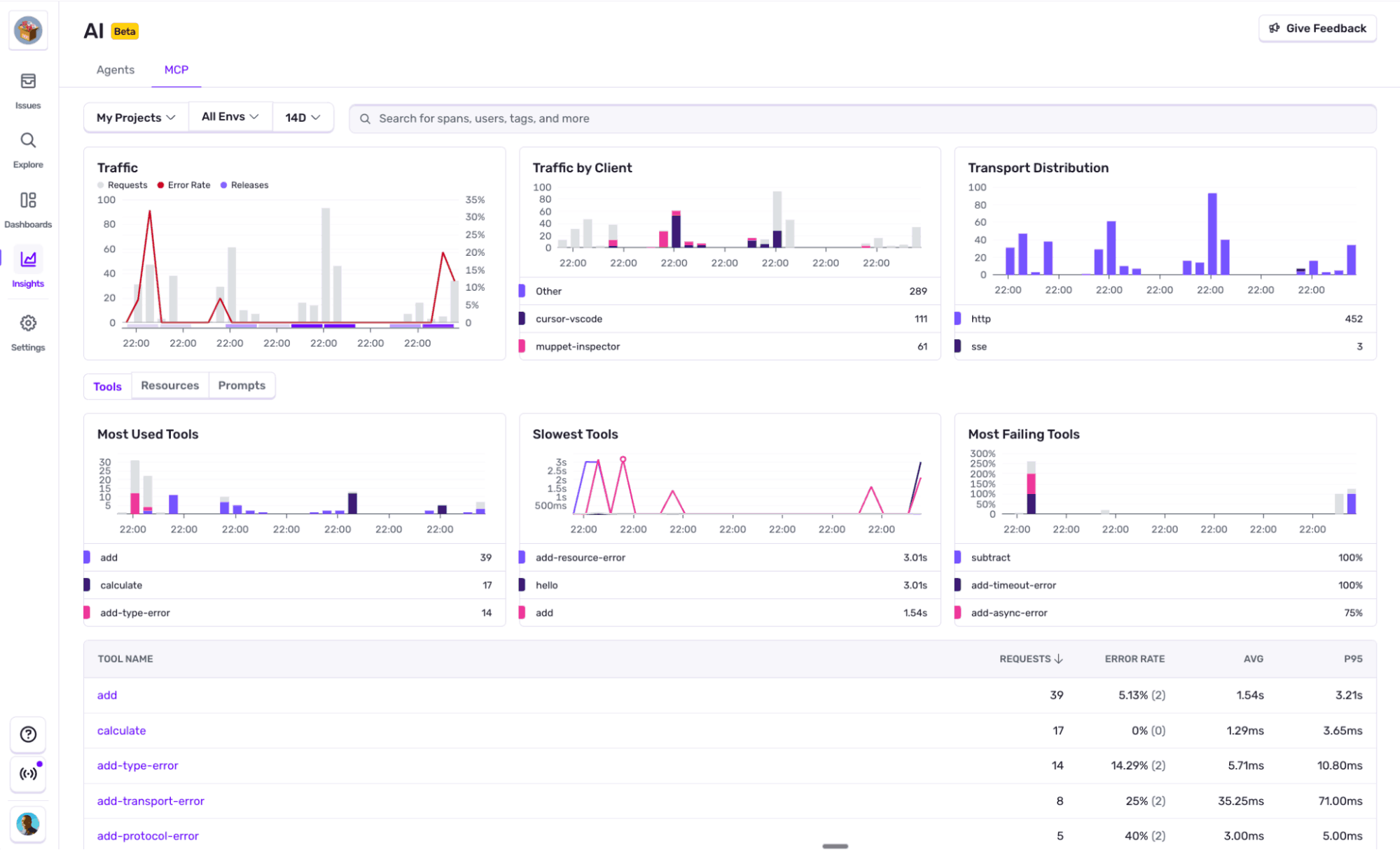

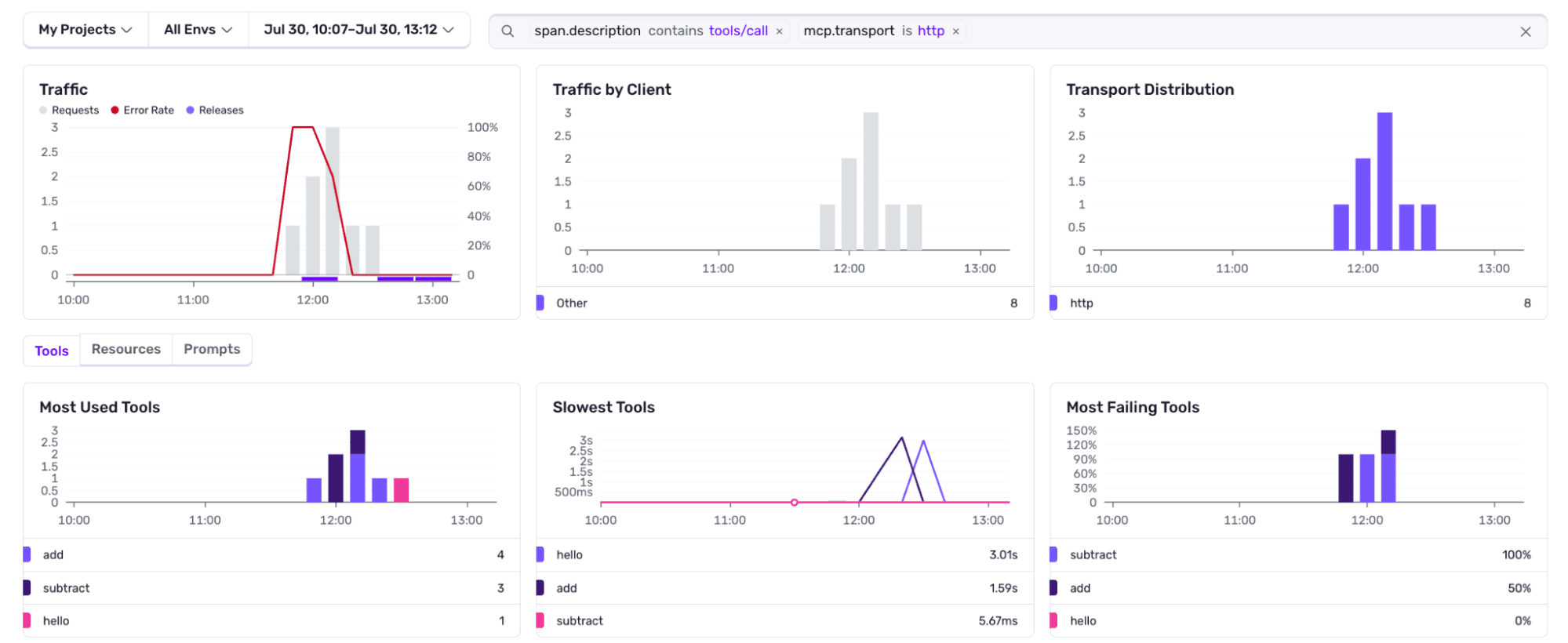

Sentry’s MCP server monitoring gives you protocol-aware visibility into how your MCP server is actually being used—broken down by transport (like SSE or HTTP), by performance, by tools, resources, and more.

You can quickly drop in and see which MCP clients are calling which tool calls, how those requests are performing, and most importantly, where things are going wrong.

We started where most people would: with OpenTelemetry. We were already well on our way to building agent monitoring, and it shared a lot of parallels to MCP server monitoring. OpenTelemetry MCP semantic conventions are still in draft — but it gave us a solid foundation to build on and extend with the visibility we needed. From there, we layered our own opinions, shaped by building and running our MCP server in the real world.

So now back to where this post started. Our MCP server monitoring is instrumented in one line, just wrap your McpServer from Anthropic’s MCP SDK with:

wrapMcpServerWithSentry(McpServer)Once setup in your MCP server, you’ll have:

A clear overview of the different aspects of your MCP Servers performance, with built-in paths to explore more. Client segmentation, transport distribution, most errored tools, most read resources, slowest tools, and more.

Tracing down to each of the JSON-RPC requests. Including tool calls arguments and results.

Errors. MCP protocol handles errors inside the server to avoid throwing them, and just respond with a “there was something wrong” kind of message. That’s good, but if it was an error, we want it logged in and linked to the specific part of the server that caused it.

And because we support OpenTelemetry, if you’re using another MCP server library and it follows the OpenTelemetry semantic conventions for MCP, you’ll get all of this out of the box.

What are users actually using your MCP server for?

You've built an MCP server. Tools are working, resources are flowing, and a few clients are connecting. But every new tool call you vibe code into existence has you wondering if anyone even uses what you built last week.

Without proper instrumentation, it’s just guessing.

Who's calling your server and what are they actually using?

MCP servers can handle thousands of requests per minute across multiple transports (stdio, HTTP, SSE). Logs show method names, but no context. You see tools/call everywhere, but which tools? From which clients? Over which transport?

2024-07-30T15:23:42Z tools/call {...}

2024-07-30T15:23:43Z resources/read {...}

2024-07-30T15:23:44Z tools/call {...}Without MCP instrumentation, you're reduced to parsing logs and guessing:

Is Claude Code using your calculator tool more than VS Code?

Are HTTP clients using the server differently from STDIO clients?

Which resources are actually being read?

With our MCP monitoring, you get clear breakdowns:

{

"method": "tools/call",

"tool_name": "file_search",

"client": "cursor",

"transport": "stdio",

"count": 3847,

"avg_duration_ms": 156,

"error_rate": 0.001

}Now you know: Cursor is heavily using file_search over STDIO, and you can even see its reliability stats.

Catch broken integrations before clients abandon your server

You ship MCP server v1.2.0 with improved resource caching. Everything looks good in local testing. No errors in CI. But three days later, you notice something strange in your hand-crafted request counting:

Volume for resources/read dropped 60% since the deploy.

Without proper instrumentation, you're left wondering:

Did clients stop using resources entirely?

Is there a silent failure mode?

Are clients falling back to different endpoints?

Is this related to the new caching logic?

Your MCP instrumentation catches this immediately and you get a Sentry e-mail saying:

CacheKeyError: undefined cache namespace in method “resources/read”.

You go to our UI and see that it's for streamable HTTP transport. So the new caching broke the resource reading in HTTP transport but STDIO and SSE were unaffected. You fix the cache namespace handling and resources/read volume returns to normal before clients give up and switch tools.

Stop bot abuse before it burns your server

MCP servers often start without authentication, perfect for early testing, and dangerous for production. One morning you wake up to doubled CPU usage and your logs flooded with malformed requests.

Infrastructure metrics show "everything normal". CPU is high, but no 5XX errors. Your server is technically responding to everything, even the garbage.

2024-07-30T08:15:32Z tools/call {"tool": null, "arguments": "invalid"}

2024-07-30T08:15:33Z tools/call {"tool": null, "arguments": "invalid"}

2024-07-30T08:15:34Z tools/call {"tool": null, "arguments": "invalid"}Without request attribution, you can't tell legitimate traffic from abuse. With MCP monitoring, the problem becomes obvious:

There is an unknown client using HTTP transport that’s making requests to your tool subtract that has a failure rate of 100%. With that, you can think of mitigation strategies to apply.

Know what to build next, before you waste weeks on unused features

You have 4 feature requests:

1. Add webhooks support for notifications

2. Improve file_search tool performance

3. Add streaming for large responses

4. Implement prompt templates

Without usage data, you're guessing which will have the most impact. You might spend two weeks building webhooks that nobody uses, while file_search performance issues frustrate your most active clients.

You can solve this problem by looking at our MCP monitoring charts, where you could see how that tool is the most used one, so you can work on improving the performance of it.

Where are we taking MCP server monitoring next?

MCP is a spec and service that is continuing to grow. New features and functionality are being added constantly, and on top of that, clients are becoming more sophisticated. It wasn’t that long ago that Cursor released 1.0 and standardized Streamable HTTP and OAuth support within it.

On top of that, people are running these servers in new and different ways. Cloudflare continues to add MCP and agent support functionality across its Workers platform, Vercel has released tooling to run alongside Next.js, and it’s getting easier for people to hand roll their own MCP servers from scratch.

In the coming months, we’ll continue to add more core monitoring functionality to MCP server monitoring such as Trace Propagation, which will allow us to carry forward trade identifiers into services that are downstream from the MCP server, to give better visibility into performance.

We also want to expand platform support, for example, for Cloudflare’s McpAgent.

Finally, we’ll be looking at how to extend the language support for MCP Servers to include Python.

As always, we keep an eye on what's happening in the community and are often ready to pivot into the newer areas users are jumping into. MCP is going to be no exception to this.

Ready to try it?

To get started, follow the MCP docs to wrap your server and then head to Insights > AI > MCP . No extra config, no manual dashboards, no waiting for your users to tell you something’s broken.

Don’t have a Sentry account? Start for free