Improve Performance in Your iOS Applications - Part 4

There are multiple factors including speed, performance, UI interactions, etc. which are critical to the success of your iOS application. Slow and unresponsive applications are despised by users, so it’s critical to thoroughly test before releasing to the public.

Techniques mentioned in this article are intended to test performance of your iOS application and may require basic knowledge about testing in iOS; however, this guide also walks you through the fundamentals of testing so you can get started writing your first test case.

Before we dive in – a quick recap of the first three articles in this series...

first article in the series showcases performance tips to help you improve compile time of your iOS applications, build faster applications, and focus on iOS performance improvements in the build system. The second article in the series talks about performance tips that help you improve the UI interactions, media playback, animations and visuals, etc. – delivering a smooth and seamless experience. In Part 3 in the series, I discuss the techniques to optimize iOS application performance through existing codebase, modularized architecture, utilizing reusable components in the code and more.

Performance Testing BasicsPerformance Testing Basics

Developers and testers review and analyze app performance in a virtual environment to forecast how users will interact with a product when it is released to the public. This implies:

App performance during high traffic periods is being tested.

Poor internet access might have a negative impact on the stability of an app.

Compatibility with a broad variety of mobile devices.

Analyzing the app's overall performance.

Accurately spotting and reporting bugs.

An evaluation of the app's capacity to handle additional demands.

When the number of users or activities happening at the same time goes up, developers may need to look at the performance of the backend server and network requests and make changes to keep the overall user experience smooth. When there is a lot of traffic, it is helpful to know how quickly an application works and how the system works. Load testing can give you an idea of how long it takes for the data to be loaded from the backend servers to your iOS app. If your application takes more than a couple of seconds to display the content, you'll want to investigate ways to improve this.

You may evaluate your application's durability by stress testing your app, such as data processing, response speed, and functional behavior in circumstances such as unresponsive servers. This data may be used to detect and fix application bottlenecks, thus improving app performance. Like stress testing, spike testing includes boosting demand to see whether an application slows down, or in some cases stops responding and crashes, due to heavy data that is managed improperly or server crashes due to spikes in network requests and responses as a result of exponentially increased user activity. It may help you determine the server’s load capability, along with how your application behaves in critical scenarios. Apps with frequent user spikes need to spike test.

Volume testing evaluates your application's total capacity. This testing evaluates your app's ability to manage large amounts of data without slowing down or losing data. On the other hand, capacity testing can identify difficulties faced by your users when app performance drops. As your app's user base grows, its dependability increases.

Scalability testing analyzes response time, requests per second, and transaction processing speed to determine the backend server’s ability to manage increasing workloads. While it does not directly focus on testing your iOS application, it can definitely identify issues with the backend servers that support your iOS apps to ensure stability of the data flow and overall user experience. Endurance testing measures an app's long-term performance. During endurance testing, developers and testers simulate strong traffic for a long time to verify an app's stability.

Basics of Testing in iOSBasics of Testing in iOS

The first step is to understand how testing works in iOS and how you can get started with testing your iOS applications. To write test cases, you need to focus on different types of testing:

Unit testing: Test a single function that is not reliant on any external dependencies such as, validating functional logic, validating email addresses, etc.

UI testing: Replicate user behavior and actions, such as navigating through the application.

Integration testing: Test a single function that has a dependency on another function such as, testing operations on the local files or database calls.

Performance testing: Test how the app performs during actual use such as, loading an excessively large list of image results.

To begin with, let’s understand unit testing with the following example. The function below returns an integer's factorial. To test the below function, you can run the example and test it with different numbers, such as 4 factorial is equal to 24.

func getFactorial(of num: Int) -> Int {

var fact = 1

for factor in 1...num {

fact = fact * factor

}

return fact

}Now, to automate the check, let’s write a simple function that will logically check the condition and return the result accordingly.

class getFactorialTest: XCTestCase {

func testGetFactorial() {

let factorial = (1...4).map(Double.init).reduce(1.0, *)

XCTAssertEqual(factorial, 24.0)

}

}This simple code logically checks if the factorial is correct or not and the test fails if the result is not correct. For the brevity of the article, the next sections will focus on how you can run tests to check app performance in iOS.

Getting Started with Performance Testing in iOSGetting Started with Performance Testing in iOS

The performance of an application depends on multiple factors including backend, the network, the application itself running on the device, battery percentage, background services, and more. A developer or mobile tester can use different situations to test how well an app works.

It's true that a mobile application's performance may be affected by a wide variety of circumstances. Apple revealed XCTest framework support for performance testing at WWDC 2014. Within unit or UI tests, XCTest frameworks may evaluate the performance of code blocks.

This approach to manually perform all the checks is unhealthy in iOS development since any of the issues missed might result in an application that is useless, unstable, and sluggish. As a developer, the team is unable to personally check all performance concerns all of the time. The performance of an application should be monitored on a regular basis and automatically. Fortunately, there are a few ways available for automating the performance testing of iOS applications.

Regularly performing instrumentation tests.

Framework-based automated performance testing with XCTest.

Utilizing the appropriate monitoring tools for application performance.

Let's look at how to make it possible.

Performance Testing with XCTestPerformance Testing with XCTest

There aren't many tools or frameworks to test performance, but the ones that do exist differ from standard unit tests in ways such that tests written are supposed to test the performance of the application or an action performed based on various factors rather than testing the validity or logic of the code written using unit tests. Performance testing may be the quickest way to discover underlying problems related to application performance that may otherwise be difficult to identify by the usual QA process.

measureBlock is a part of the XCTest framework. It can be used to test the performance of any code in a test method. When you write a unit test in XCTest, you can use a feature to find out how long it takes a block of code to run. Below is an example of how measureBlock can be used:

measureBlock in Swift:

func testFunction() {

measure {

someRandomClass.doImportantActions()

}

}project.xcodeproj/xcshareddata/xcbaselines/ stores baselines. This folder includes an Info.plist containing a list of host machine target combinations. The parameters are tied to the UUID it creates for the machine-target combinations. If you test on another machine with the same specs, you'll use the same baseline.

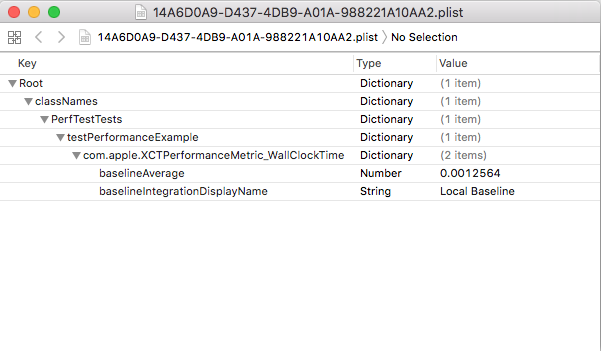

The function is executed numerous times by the measure block based on the number of invocations specified. In the above example, the number of executions generates the average value at the conclusion of the execution, which may be utilized as a baseline for subsequent test executions. The below image shows the Info.plist that contains the baseline configuration for a specific machine-target combination under the xcbaselines folder. We may also manually set the baseline value here. If the average time exceeds the baseline, the performance test will fail.

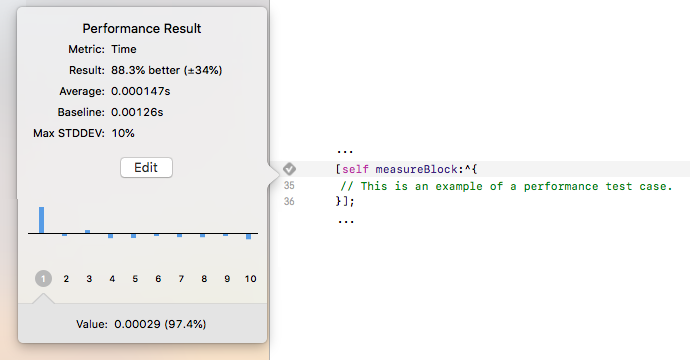

Popovers appear in Xcode that show both the average and the baseline. The below image showcases a popover. A key distinction is that the average is based on the time it took to execute a particular block of code during a previous test. If you don't manually specify the baseline, Xcode will automatically do it for you. Standard deviation is compared to baseline, and average stated in the popover has no bearing on your exam.

To demonstrate how it works, let's create an XCUITest-Performance sample app with a UI Test target. It is possible to run and use an app in an XCUITest performance test generated using the XCUITest template. A simple example looks like this:

func testApplicationPerformance() {

XCUIApplication().launch()

measure {

XCUIApplication().buttons["Signup"].tap()

}

}This measures the average time it takes for a user to push the app's Signup button after launching the app. There's a finer granularity to performance measurement, though, and you may select a starting point and end point.

Now, let's write a performance test for the situation, only when the button is pressed. During this test, measure metric technique will be used to control measuring the performance. Using measure metric technique, you can choose the point at which we begin and end our performance measurement. This implies that the start/stop function may be used to do performance testing on a particular section of test code inside a unit test. The test looks like this:

func testApplicationPerformance() {

measure(metrics: [XCTOSSignpostMetric.applicationLaunch]) {

XCUIApplication().launch()

}

}Once the test is run in Xcode, observe that it runs 10 times. When the test is performed for the first time, it will fail and you will be prompted to select the suitable baseline value for the future tests.

Once the baseline is set the tests will always run, but they will fail if Xcode detects an increase in average values above our baselines. This will ensure that you can run both your unit tests and performance tests using XCTest. However, the baseline stored in Info.plist is machine specific; if you try to run the same tests in CI, or any other machine which does not have the same configuration, it won’t work.

Continuing Performance TestsContinuing Performance Tests

While monitoring performance, it is possible to go granular and specify the starting and stopping points. Let’s run a performance test to measure app start.

func testApplicationPerformanceMeasureMetrics() {

XCUIApplication().launch()

self.measureMetrics([XCTPerformanceMetric.wallClockTime], automaticallyStartMeasuring: false) {

startMeasuring()

XCUIApplication().buttons["Signup"].tap()

stopMeasuring()

}

}When you run the test, the application is run only once, but you can measure the performance with every invocation of the defined action, which is a button click in our case. This technique allows you to define start and end points for performance measurement during the test execution.

XCTest also runs during CI in the DevOps pipelines, and many performance issues may appear during the test phase. As a result, you need to be aware of what issues can occur on your customers' iOS devices and rectify them before shipping the apps to the end customers. Sentry gives you the ability to answer questions like whether or not the performance of your application is improving, whether or not your most recent release is having a cold start problem, operating slower or having frozen frames, or which HTTP requests or remote services are running slowly. To provide you with such crucial details for your iOS application, Sentry collects a variety of data points through automatic instrumentation. Once the data is collected, Sentry gives you the ability to analyze performance trends and investigate transactions on the Performance Monitoring dashboard, where you can start addressing the code that's causing performance issues.

The metrics on the Sentry Performance dashboard also allow you to track and provide light on how people are interacting with your application. You will have a measurable assessment of the health of your application after you have identified relevant criteria to employ in the measurement process. Because of this, it will be much simpler for you to determine whether there is a problem with the system's performance or if mistakes are occurring.

Writing Robust Performance TestsWriting Robust Performance Tests

In Xcode, you can conduct performance tests alongside unit and user interface tests using the XCTest framework. It is the responsibility of the developers to ensure that the test is accurately set up and functions properly. There are a few things to consider while setting up performance testing.

Before commencing performance testing, decide what and how the user experience needs to be. If you wish to conduct performance tests, you must first choose at what level the tests should be performed, such as the unit level, integration level, or user interface level.

Several rounds of tests are performed on the same code block throughout the performance test. You must take the appropriate care to guarantee that each invocation is atomic, independent, and lacks a large standard deviation. In any case, Xcode will warn you if there is even a little difference in metrics - This will be highlighted with a different icon ⤵️ compared to ✅ succeed and ❌ failing one in your report next to the test name on the left pane in Xcode.

Data that is prepared or chosen which meets the requirements and inputs for one or more test cases is called planned test data. When conducting a performance test, it is best to use planned test data rather than random data since this increases the likelihood that the findings will be reliable. The haphazard data will provide unreliable and unhelpful findings.

The total amount of time needed to complete the performance tests significantly increases if there are a variety of tests. It is recommended that you keep your performance tests speedy so that you can run them frequently in order to prevent regressions. Depending on how important your application's performance is to your company, it may be worth paying for several performance tests on different platforms for each pull request, such as triggering GitHub Actions since this would prevent the regular flow of unit and UI tests from being disrupted.

Your iOS applications may benefit from Apple's features to the basic XCTest framework which can help you find performance bottlenecks early on. It is important to note that XCTest runs during the continuous integration phase in DevOps pipelines to detect regressions. However, XCTest is not enough to catch all performance issues during DevOps builds. Sentry’s suite of monitoring tools including Performance Monitoring capabilities helps you identify performance issues with your iOS apps with proper metadata and customized parameters. Performance testing does not require learning a new programming language or framework by an iOS developer.

ConclusionConclusion

To acquire reliable results, you must run meaningful tests. Inaccurate testing results may prevent your application's deployment. Your time and money will be wasted if your programm doesn't function for real users, and if an application is launched to the public with bugs or performance issues, negative online reviews may kill its popularity. Devices and operating systems both in the Apple ecosystem as well as Android’s ecosystem are so diverse that emulators may not be enough to perform complete test coverage for your apps. Emulation tools such as AWS Device Farm, Kobiton, Hockeyapp, etc. let you compare software versions or test against competitors. You need to test application performance on both emulators and actual devices to get accurate mobile user experience data.

This is the fourth article in the Performance Series: Improve your iOS Applications. All articles from this series are linked at the beginning of this article, and can be helpul in isolation or read sequentially.