How We Run Successful Beta Tests with Error Reporting

We've recently completed a large beta test for our new product here at Testmo. We build a test management tool, so most of our users are professional software testers. As you can imagine, our customers are a rather critical group of users when it comes to software quality. We've learned some important lessons about running a large beta test and we want to share how we benefited from Sentry error reporting to identify, find, and fix issues quickly.

During the beta test we've received a lot of great feedback and questions from users. This usually involved questions about using the product, feedback about missing features, tips to improve the documentation and similar suggestions. But there were few actual problems and bugs that users reported. We do have large test suites to test the product, but all new software ships with problems, and ours surely wasn't different.

Fortunately, we've implemented Sentry error reporting throughout our stack, so we receive additional useful metrics. We added Sentry to our front-end code (JavaScript), our back-end and API layer (PHP + Laravel), and even our website and customer portal. Whenever there's a problem with our app or infrastructure, we receive a chat notification in Slack so we can investigate the issue.

Our help desk tool pinged us whenever a customer question came in, usually in the form of "How can I do this?" and "Can you add that feature?". We also started to receive some error reports from Sentry from time to time. What we didn't receive though were customers contacting us about errors or issues they saw. The thing that surprised us: we show some (friendly) error messages in the app to users when something goes wrong, so users must have seen these errors as well.

Was it because they expect that most vendors don't listen to error reports? Or was it because users just expect some hiccups during a beta test? We don't know, but with the Sentry error reports we've received, we still had all the info to identify and fix the issues anyway.

How we fixed issues with SentryHow we fixed issues with Sentry

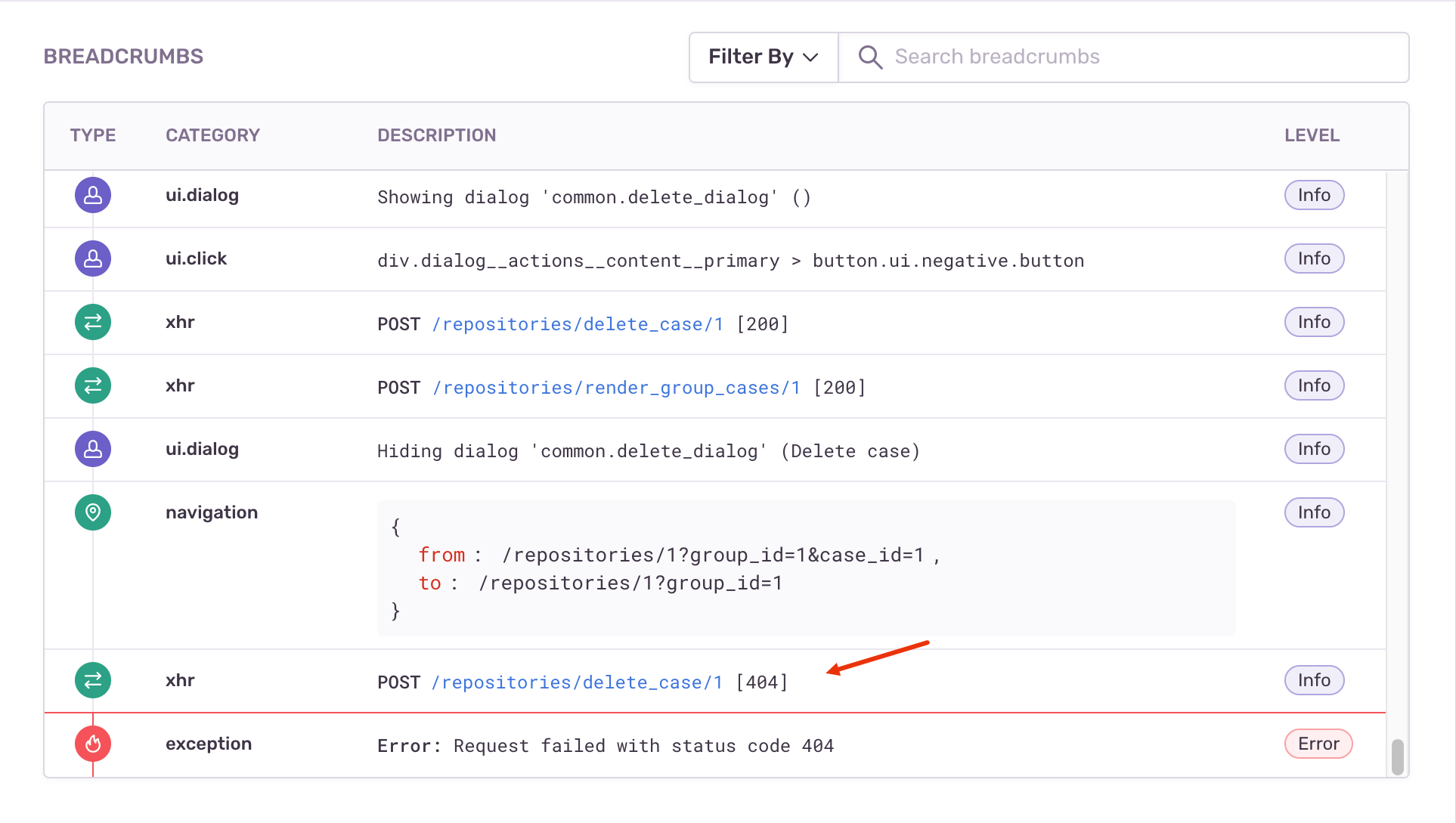

Let's take a look at one of the issues we found and solved this way. We make heavy use of Sentry's breadcrumb feature. We've instrumented our code with additional events at strategic points so we have more details in our error reports. The following issue is a good example:

This event trail from the breadcrumbs section of a Sentry error report shows how a user opened the Delete dialog to remove an entry. The user then confirmed the dialog by clicking the submit button. Next, our front-end code correctly sent a request to delete the entry, refreshed the view's fragment and updated the browser's location.

But then something strange happened: our code sent another delete request for the same entry. This was correctly confirmed by our API with a 404 error, as the entry was already removed. What happened? After reviewing the stack trace logged by Sentry, we could actually identify the problem.

After clicking the submit button, we disable the UI to prevent additional duplicate clicks. When hiding the delete dialog after the successful action, there was a brief period when the user could click the button again during the dialog's hide animation anyway. So this particular user just clicked the confirm button a second time by mistake, in the exact few milliseconds this was actually possible.

This was an easy fix once we found the problem. But this class of bug would have been super difficult to diagnose and reproduce without such a detailed log. We identified various other problems during the beta that would have been difficult to find without the Sentry reports, such as rare data query deadlocks.

We do have various additional application and infrastructure level logging in place as well, but we noticed that such general-purpose logs usually miss the details needed to actually find the issues. For example, to reproduce issues in our staging and development environments, we often rely on Sentry's breadcrumbs feature.

Lessons learned: User feedback & error reportingLessons learned: User feedback & error reporting

A beta test is a great way to make sure that a product is ready before it's fully launched. It's just impossible to test every possible scenario. Users will always find creative ways to break things in ways you didn't expect. But if you run a beta test and don't have robust error reporting in place, you are missing out on a lot of feedback you won't hear from users directly.

So in our experience, while users are asking questions about using the product, telling you about features they want to see and pointing out problems in the documentation, they sometimes won't email you about bugs they notice. But with error reporting in place it's also not necessary. As users try your product and use it in every possible way you did and didn't imagine, you will still benefit from issues they find. And if implemented correctly, automatic error reports include many details you wouldn't be able to get otherwise.

Many users will just give up and try a different product if they encounter too many issues. With today's high expectations for software quality, you want to make sure that your product is really ready before launching it. For us, implementing error reporting (such as Sentry) is a great way to make sure we don't miss critical issues.

In our case, error reporting even helped with sales and customer loyalty. We sometimes notified users that we received an error report from their account and that we already identified and fixed it. Users were always pleasantly surprised about the pro-active support and loved it.