How Sentry could stop npm from breaking the Internet

How Sentry could stop npm from breaking the Internet

Caching is great! When it works… When it fails, it puts a big load on your backend, resulting in either a self-inflicted DoS, increased server bills, or both.

This article is inspired by a real-world incident that happened to npm back in 2016. In the next part, Ben recounts his personal experience responding to the incident while working at npm. Afterwards, Lazar will walk through a different scenario and show how Sentry enables you to both detect cache misses quickly and maintain ongoing visibility, so you’re always on top of new problems

How VSCode broke npm

In early November 2016, we noticed some really weird behavior that was threatening to bring down npm’s servers. npm was very read heavy and optimized for this. When you requested a package, you would usually be served from our CDN, with a very small amount of traffic falling through to our backend servers. On this particular morning, we suddenly saw a huge number of GET requests falling through to our backend servers (like 50% missing cache rather than 2%). We were starting to overwhelm the CPU and memory of our CouchDB read servers and it was only a matter of time before npm went down.

Shelling in and tailing logs, I noticed something very strange. All the requests missing cache were for package names prefixed with @types and many were for partially formed package names, e.g., @types/loda, rather than @types/lodash. The majority of these requests were returning 404s which npm did not cache (explaining why the requests were falling through to our backend servers).

Fortunately, the @types prefix was a bit of a smoking gun pointing towards Microsoft’s open source projects . We were able to find a recent update to VSCode on GitHub that looked like the culprit and were fortunate enough to know people working on the project we could call. VSCode had accidentally built a feature that was DDoSing npm.

This is a case where the client and server do not operate with a mutual agreement on handling certain requests, ultimately leading to costly inefficiencies. And this can happen to anyone, even to teams belonging to the same org.

Breaking cache easily

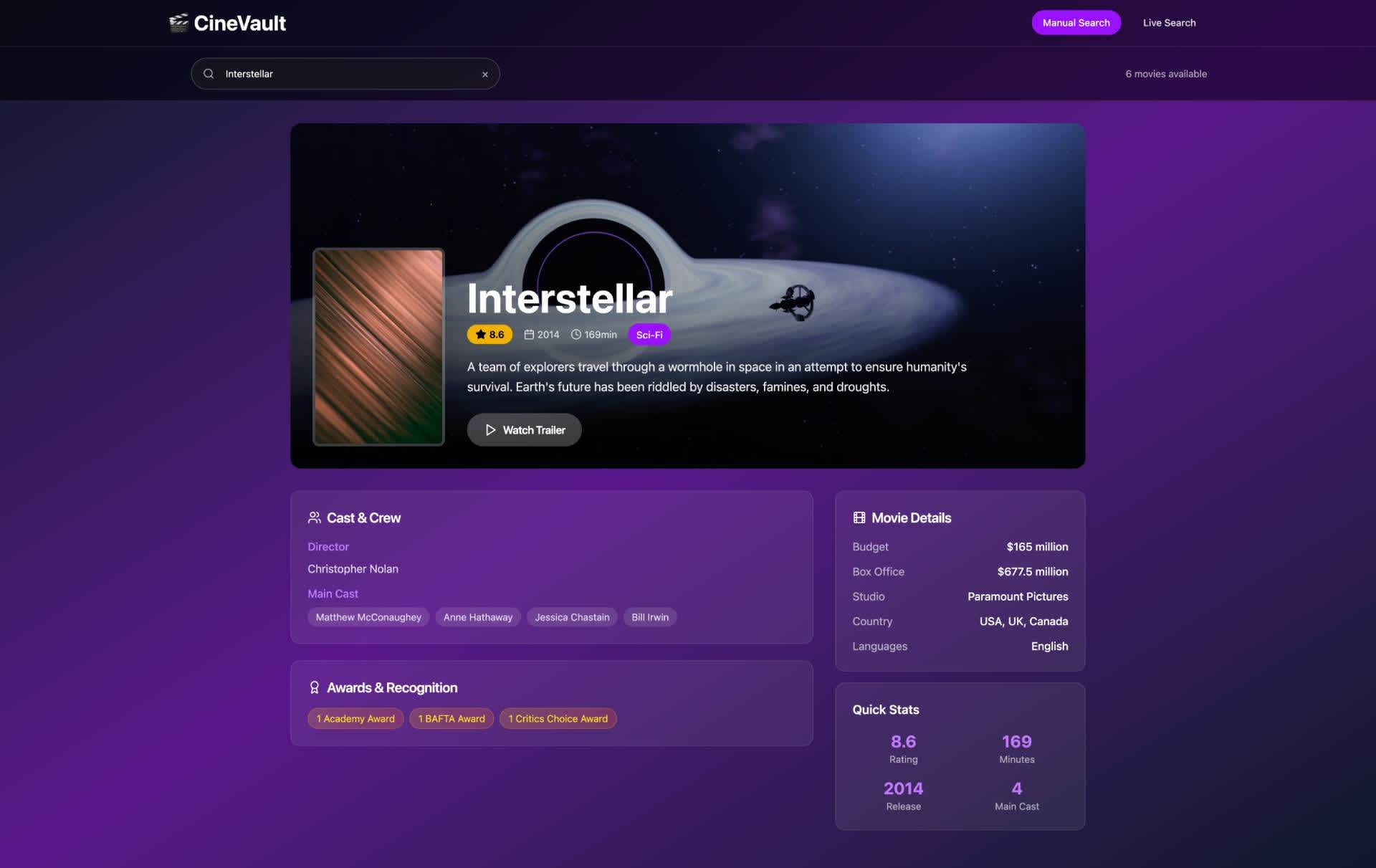

Let’s look at a scenario that seems harmless: a developer adds typeahead to a search input to improve user experience, unaware that this change will trigger a flood of uncached requests and 404s. What started as a simple frontend tweak can quickly spiral into a costly backend problem. I built an app to demonstrate this (github repo): https://cache-misses-monitoring.nikolovlazar.workers.dev/

It’s a simple movie search app. You type in the name of the movie in the search input at the top, hit enter, and you get results back. The /movie-details is an expensive endpoint, so its results are cached for a long period of time because movie details don’t change that often (if at all). But because it’s heavily cached, it needs to be invoked with the exact movie title to hit the cache.

Later, to improve the user experience a typeahead feature is developed (it provides autocomplete to users as they type). This feature still invokes the /movie-details endpoint, but with a partial search query. The movie details simply appear when the user finishes writing the query, no need to press “enter” to search.

But in the meantime, it produces a 404 for every keystroke, bypasses the caching layer, and hits the server directly. The issue was that the frontend and backend teams weren't aligned on this.

How to monitor caching

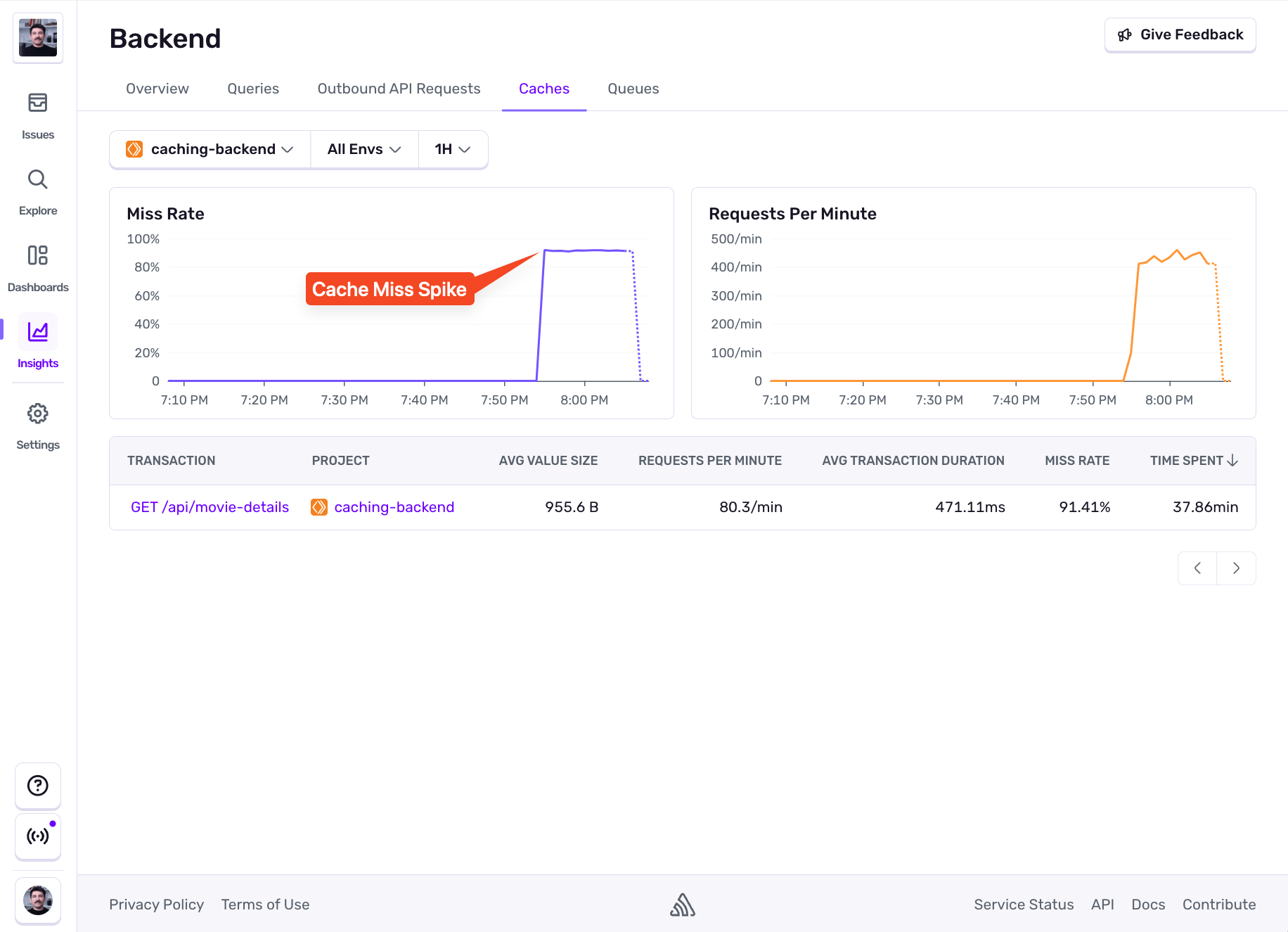

Now let’s see how we can monitor caching issues like this with Sentry. Since Sentry is already set up in the backend, and we have the caching layer instrumented, we’re already tracing metrics in Sentry so we can go straight to the Insights > Backend > Caches page in Sentry:

We can see there’s a significant step change in the Miss Rate chart. We’re seeing more than a 90% Miss Rate, which means 90% of the requests aren’t hitting the cache and going straight to our backend. This is our first clue, but we don’t know why that’s happening yet. Let’s head to Explore > Traces to find out more.

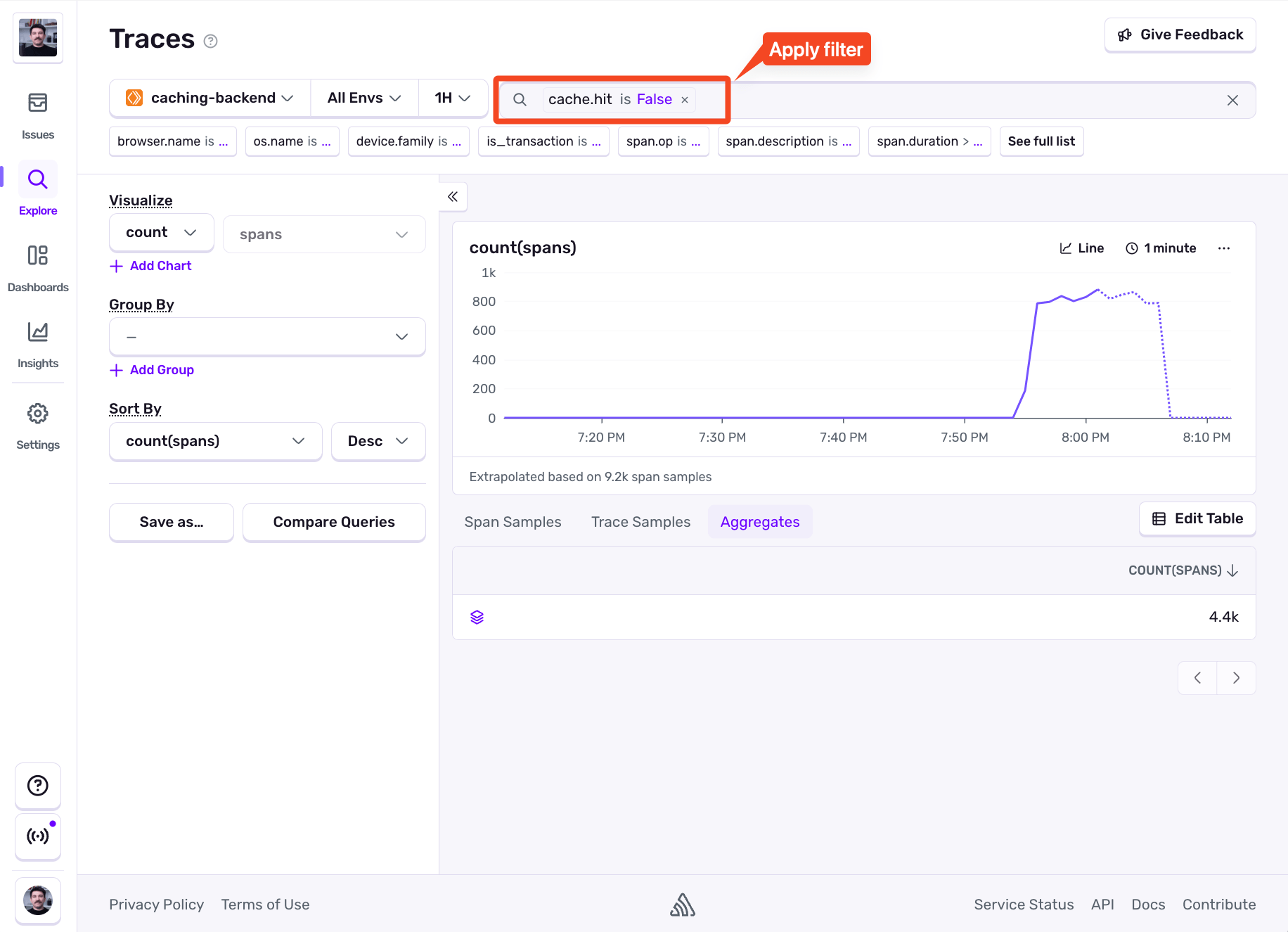

We’re going to apply the cache.hit is False filter to see an actual number of cache misses.

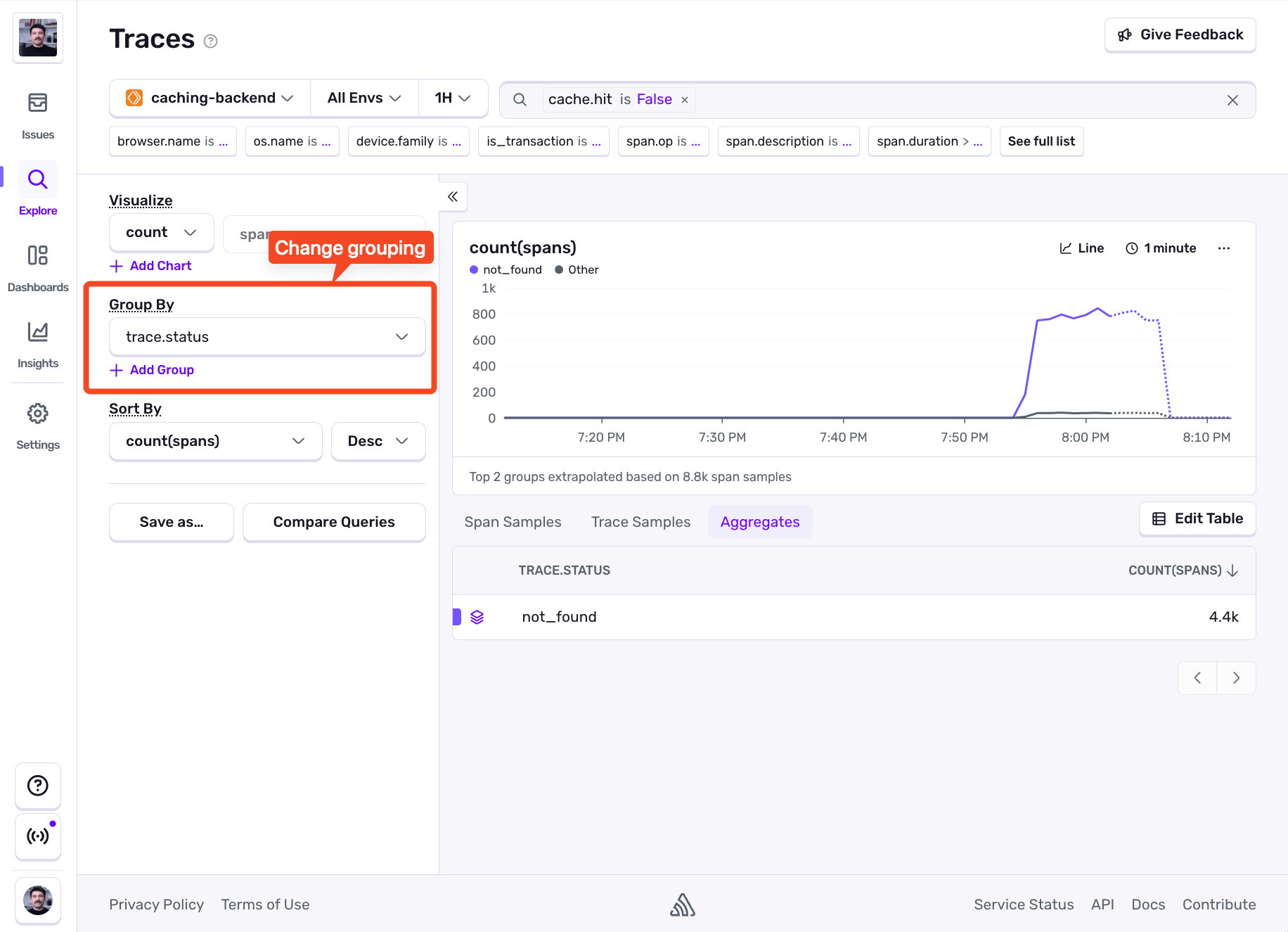

To confirm that the cache misses are happening due to 404s, we’re going to group by trace.status, and voila:

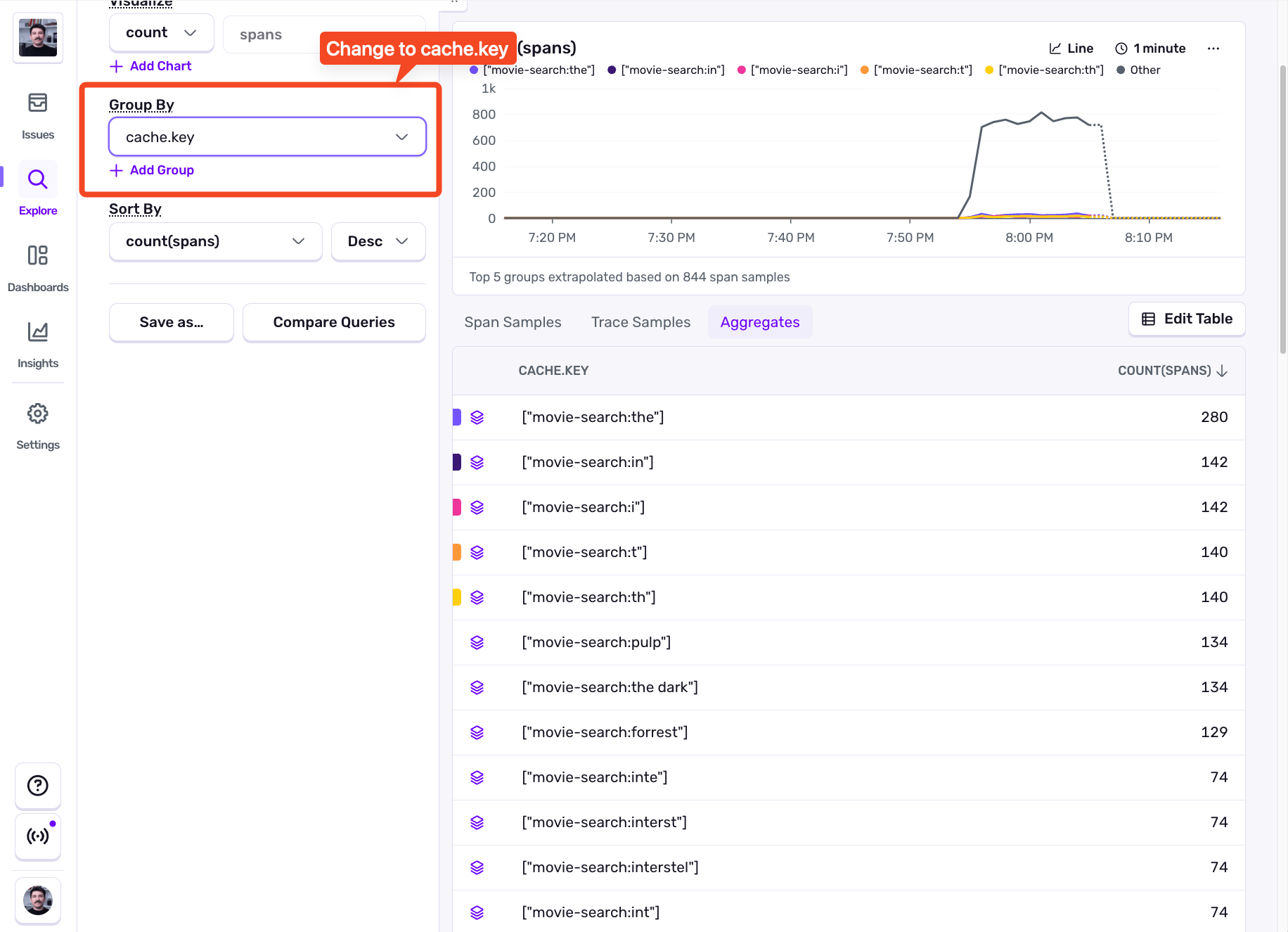

The biggest trace status group is not_found. That tells us that the server returned a 404. Let’s actually see what the users were searching for by setting the “group by” to cache.key:

All these look like incomplete searches, like hitting “Enter” as you type out the whole movie’s name. If we check the frontend we’ll see that the search input implements a debouncedSearchQuery that triggers the search on every stroke. There’s the problem. Our backend expects only full movie titles, not partial queries.

In the trace view we’re focused on showing the traces and their individual spans, but we could also configure our logger instance within our application to send debug logs whenever search queries happen, along with their results. If we had this setup we would’ve quickly seen the partial queries failing.

Getting notified when cache misses happen

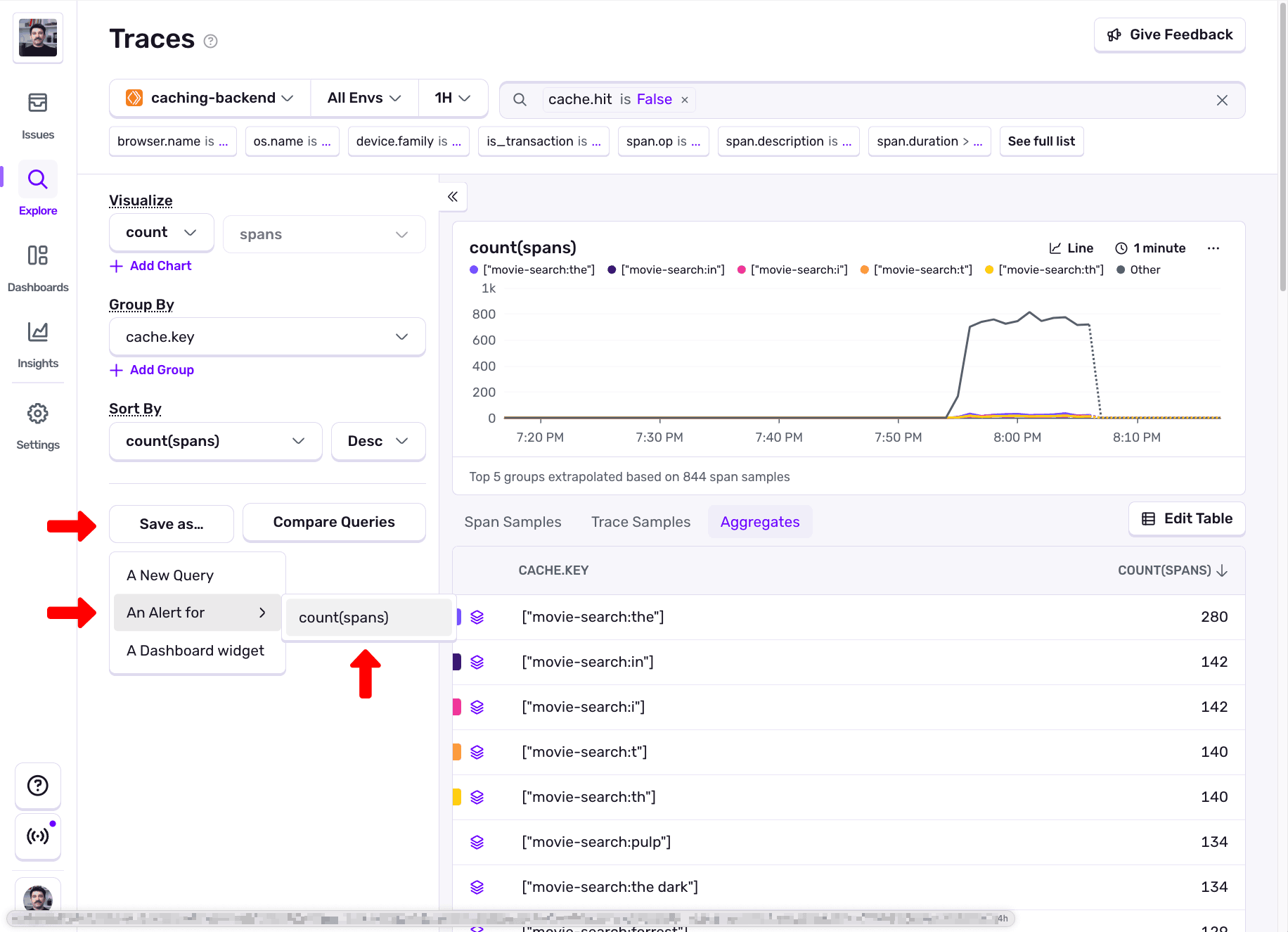

Even though we figured out what caused the issue, we’re still not done. To stay on top of all potential future caching issues, it would be wise to set up an Alert for this, and we can turn our Traces explorer query into an alert. Let’s first remove the trace.status is not_found from the filter, and then in the sidebar on the left side click on Save as... > An alert for > count(spans).

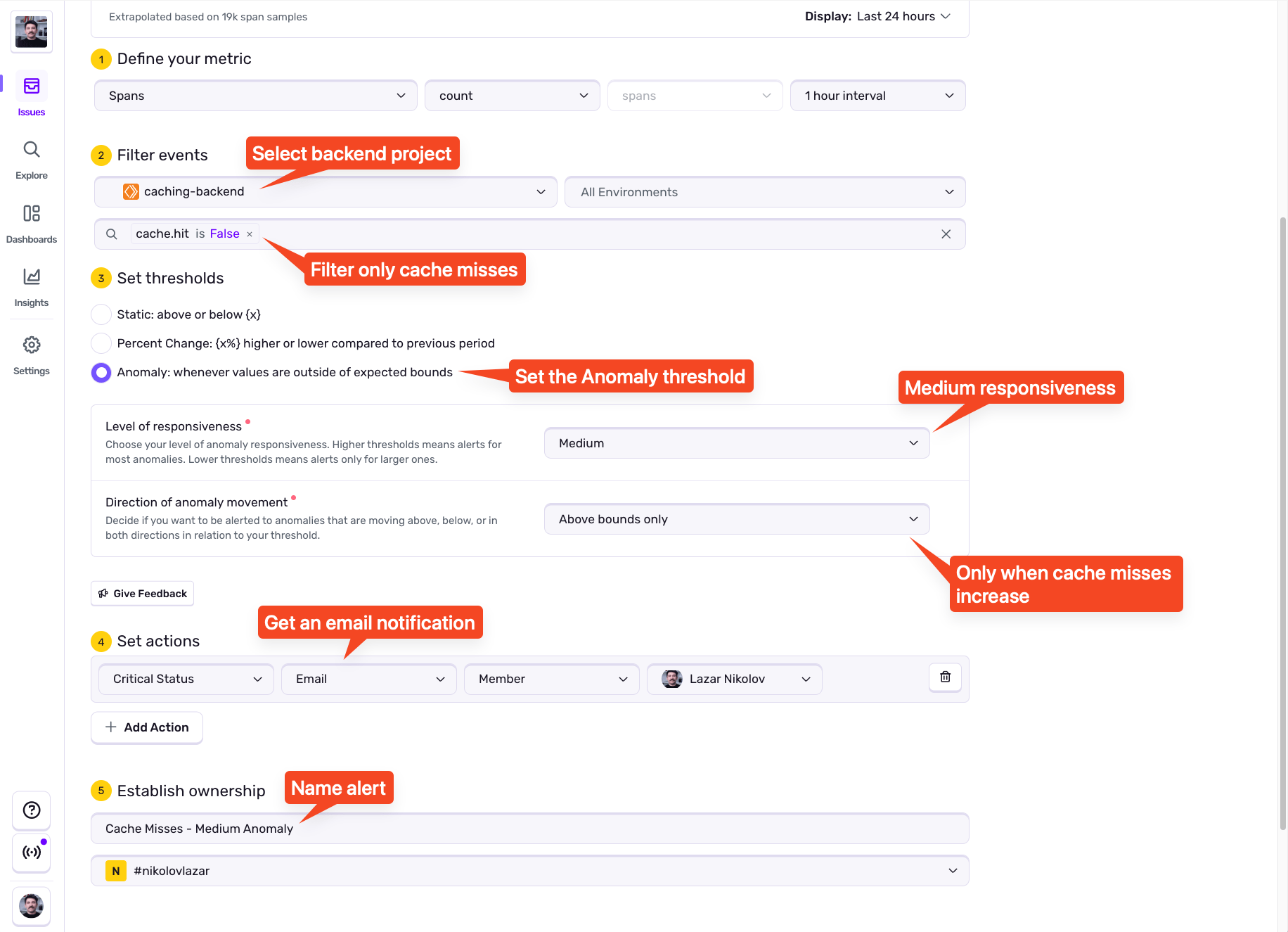

Now we can define our alert based on the number of cache miss spans coming in our Sentry account. We can define the threshold as either static (the exact number of cache misses), a percent change (ex. 30% increase), or set it to “Anomaly” and let Sentry figure out anomalies and alert us when they happen. Here’s an alert config that sends me an email when cache misses happen on medium anomaly responsiveness:

TL;DR

In this article we’ve demonstrated how easily caching can be broken, from large-scale incidents like the VSCode impact on npm, to subtle frontend changes like adding a typeahead feature. These examples highlight that even minor adjustments can lead to significant backend strain and increased costs if not properly monitored.

Effective observability is crucial, especially for high-stake situations like these. Without having monitoring put in place and an understanding of how your application interacts with its cache, these issues can silently drain resources and rack up your server bills. By proactively detecting, diagnosing, and alerting on these caching anomalies, teams can maintain a healthy, performant, and cost-effective system.