Fetch Waterfall in React

Fetch Waterfall in React

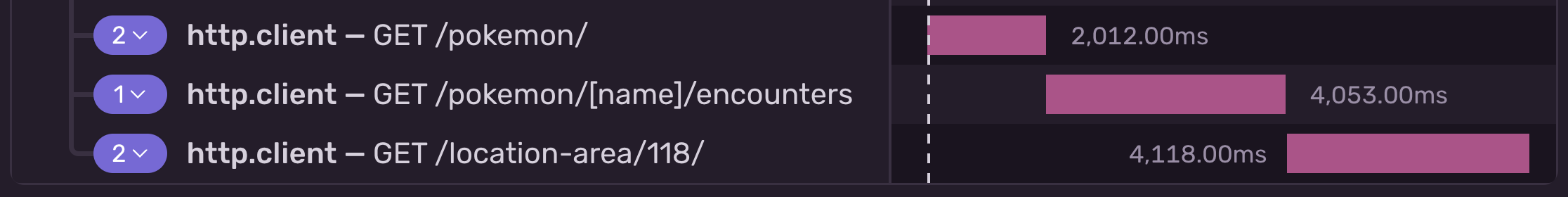

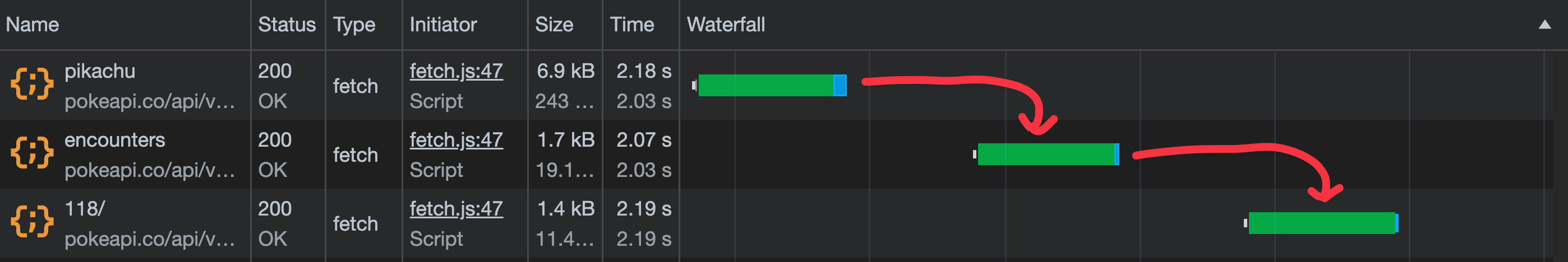

Have you seen this problem?

Or maybe this one?

You’ve most likely seen this:

export const Component = () => {

const [data, setData] = useState()

const [loading, setLoading] = useState(true)

useEffect(() => {

const fetchData = async () => {

const res = await fetch(api)

const json = await res.json()

setData(json)

setLoading(false)

}

fetchData()

}, [])

if (loading) {

return <Spinner />

}

return <> ... <AnotherComponent /> ... </>;

}Hint: they’re all the same. The first image is Sentry’s Event Details page, the second is Chrome’s Network tab, and the code snippet is what causes it.

If you can answer yes to any of these, then you need to keep reading. If not, you still need to keep reading, so your future self can thank you.

This is called “fetch waterfall” and it’s a common data fetching issue in React. It happens when you create a hierarchy of components that fetch their own data and show a “Loading” state before rendering their child component, which then does the same etc.

A fetches its data and then renders B

B fetches its data and then renders C

C fetches its dataThis would be fine if each component’s data depends on its parent’s, but that’s not always the case. If each fetch takes at least a second, then you’re looking at 3+ seconds of page load, which is considered slow by today’s standards. But why wait for 3+ seconds for data that you can get in 1 second if it’s fetched in parallel? Here’s a CodeSandbox of this issue so you can see for yourself.

Let’s explore three ways to maintain good performance and scenarios when you would want to use each solution.

Using “Suspense” to avoid a fetch waterfall

Suspense is oftentimes mentioned as one possible solution to avoid fetch waterfall. And, yes, it does solve the issue. It triggers the whole component tree’s fetches in parallel, so the data gets fetched much faster.

React’s docsite explains that only Suspense-enabled data sources will activate the Suspense component, and those are frameworks (like Relay and Next.js), or lazy-loading components with lazy, or reading the promise value with use.

Lazy-loading and the use hook are still not necessarily good solutions. Lazy-loading components won’t eliminate the fetch waterfall. It’ll behave the same way, with just the “loading” fallback from the suspense as the bonus. The use hook is only available in React’s canary version, so it hasn’t been released as stable just yet.

So, if unless you're using either Next.js or Relay, I wouldn't advise you to use Suspense as a solution just yet.

But, if you really want to, check out this CodeSandbox example. When Suspense becomes safe to use in plain React in production, it could be a great way to maintain good performance and avoid the fetch waterfall.

Fetch data on server to avoid a fetch waterfall

I hear you, you’re saying the solution is obvious, just make that page server-side rendered, or if you use Next.js - use Server Components. Then the client receives the HTML along with the data and doesn’t have to make any data requests. No requests = no fetch waterfall!

Okay, but then would you fetch the data on the server-side like this?

const pokemon = await fetch(...)

const encounters = await fetch(...)

const locations = []

for (let encounter of encounters ) {

const location = await fetchLocation(encounter.location)

locations.push(location)

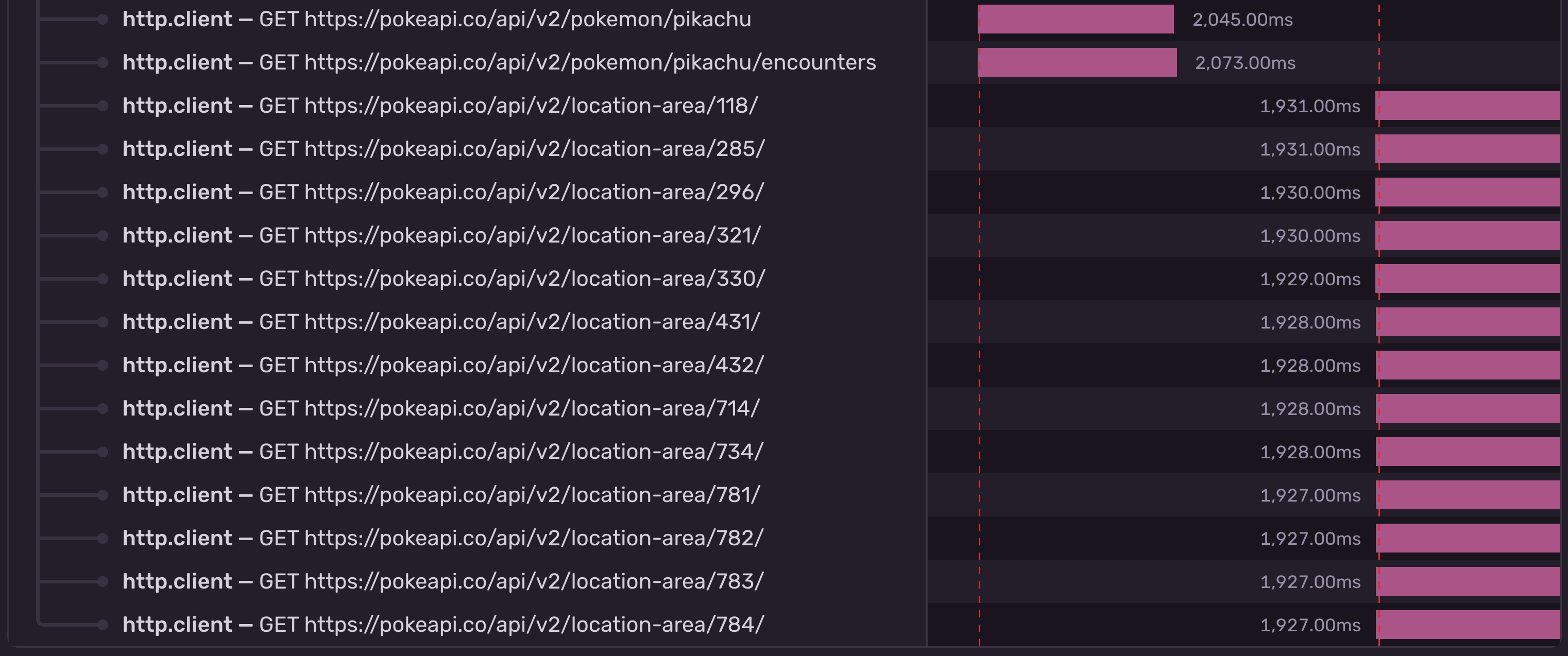

}If that was your idea, you’re just passing the ball to the server. The server will still wait to fetch all of the data in series before serving everything back to the client. This is a concurrency problem, not a rendering problem. Just fetching the data on the server won’t automatically solve it.

Yeah, that definitely looks like a waterfall 😁

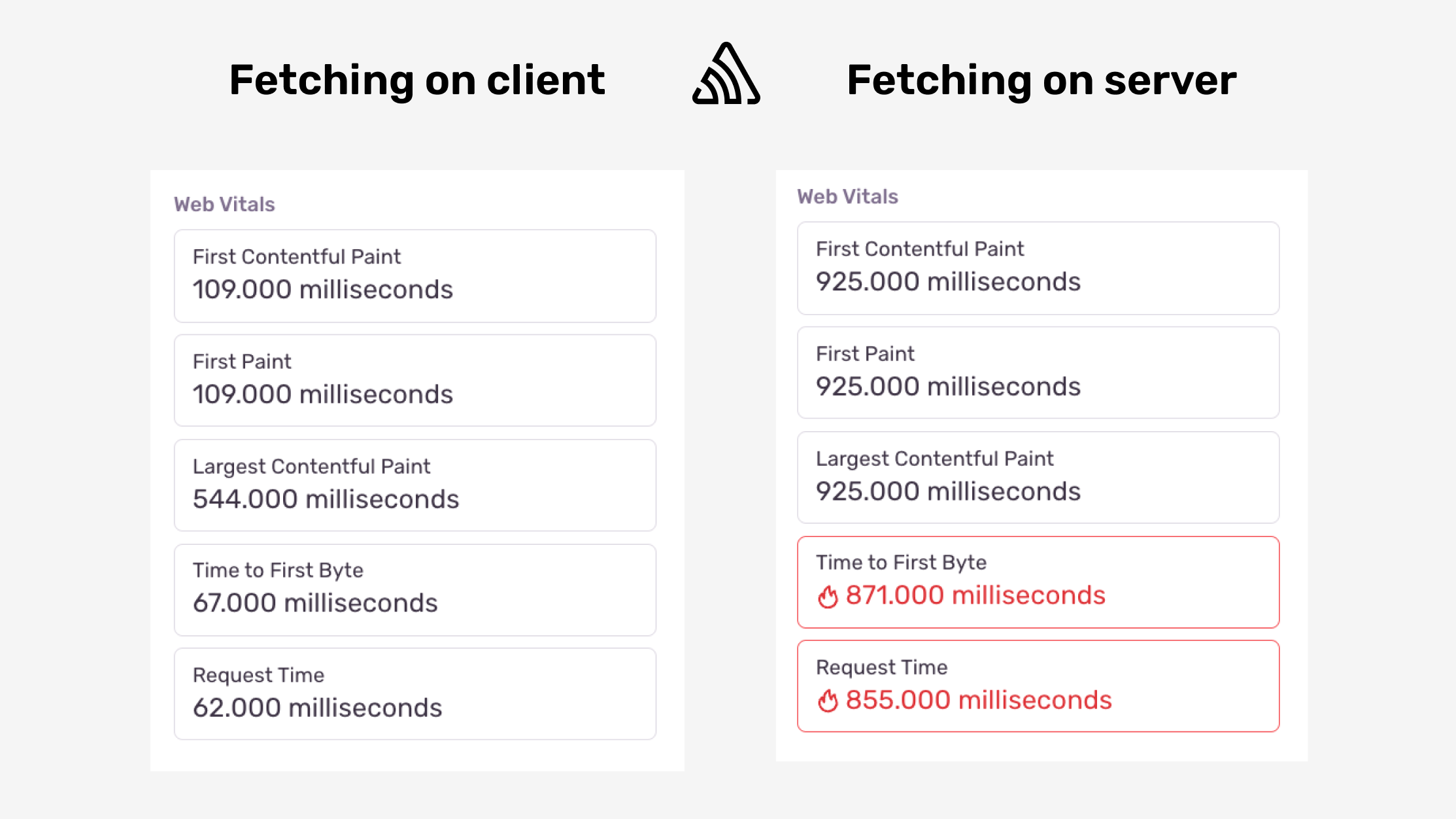

In fact, you actually made it worse. On a classic SSR (or Server Components without a "loading" component) the TTFB is now slower than the time it takes to fetch the data. And since TTFB has a larger value, all the other web vitals will too!

You could fix the TTFB in Next.js by also providing a loading component, but the waterfall will still keep on falling.

Another thing to consider is that when doing SSR/Server Components, you still render on the browser. The client still needs to display the DOM it got from the server, and then hydrate it so it can be able to “react 😉” to the user interactions. And now:

You have two computers (the browser and your server) performing rendering tasks.

The server has a lot more work to do on each request.

The browser is still showing a blank page to your users (when SSR), and still does some rendering when it gets the response back.

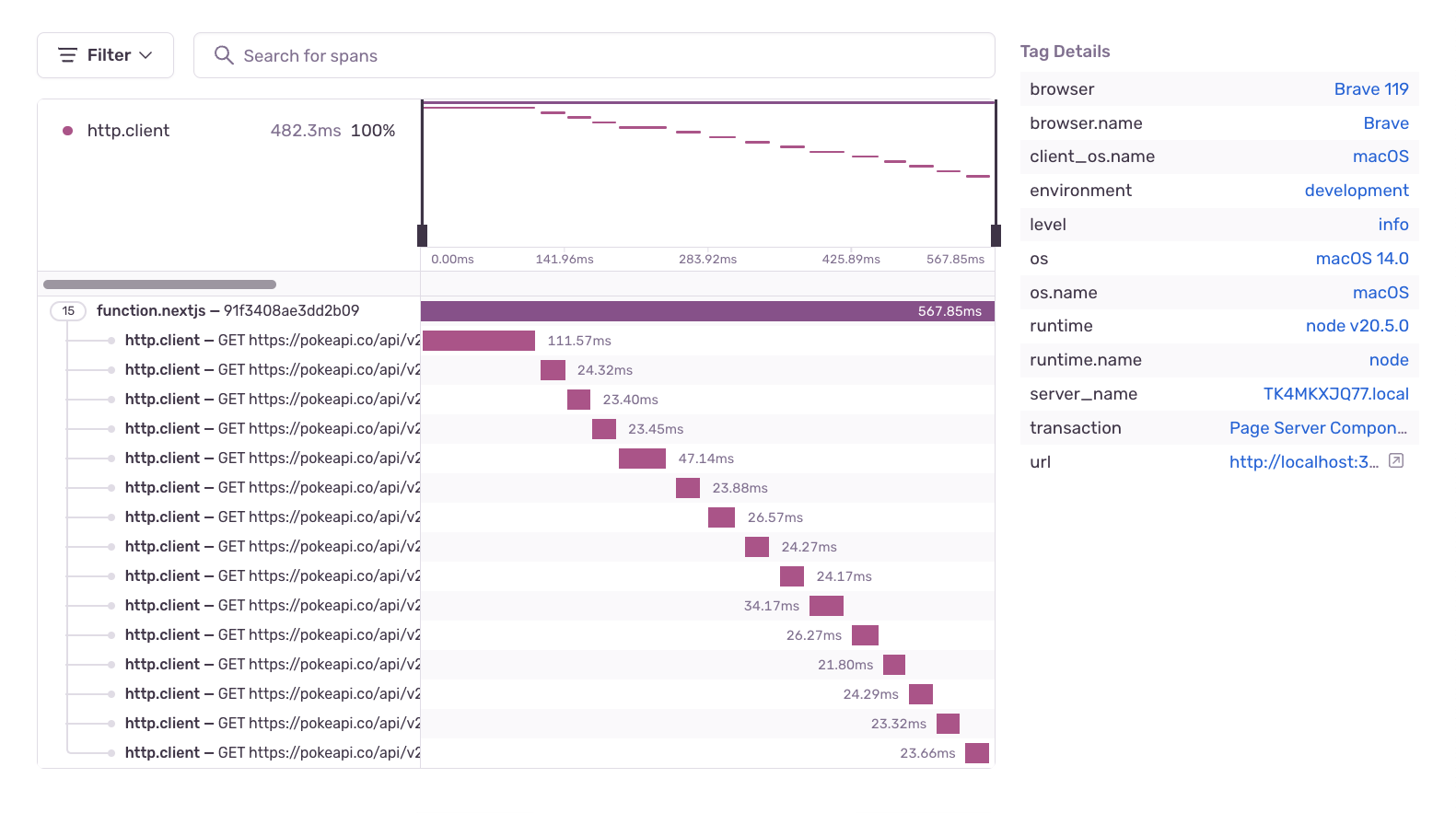

Fetching on the server is not a bad idea. But you should be aware of the tradeoffs you’re making if you’re opting in to use it. If you’re okay with the tradeoffs then make sure you fetch the data in parallel, like so:

const [pokemon, encounters] = await Promise.all([

fetchPokemon(),

fetchEncounters(),

])

const locations = await Promise.all(

encounters.map((encounter) => fetchLocation(encounter.location))

)The three fetch methods can be anything - other API requests or database calls - but must return a Promise. Promise.all will trigger all input promises in parallel, and will return a new promise that gets resolved when the last of the input promises gets resolved.

Hoisting the data fetching to avoid a fetch waterfall

Another solution to the fetch waterfall issue is hoisting the data fetching to an upper level in the component hierarchy. Instead of fetching the data at the component level, you can fetch it at the topmost level in your component tree (the first component that starts to fetch the data), and pass it down to the component tree.

App

└── DataContext.Provider

├── PokemonPage (useContext -> pokemon)

└── PokemonEncounters (useContext -> encounters)

├── LocationDetails (useContext -> otherPokemon)

├── LocationDetails (useContext -> otherPokemon)

├── LocationDetails (useContext -> otherPokemon)

└── ...

Fetching the data in parallel is still important in this case, so make sure you do that.

So - fetch it when the first component mounts, and then pass the data down. Does it solve the problem? Yes. Does it couple the components and introduce prop drilling? Double yes. You can solve that with a Context Provider, so all of the children components can access the part of the data they’re interested in without prop drilling.

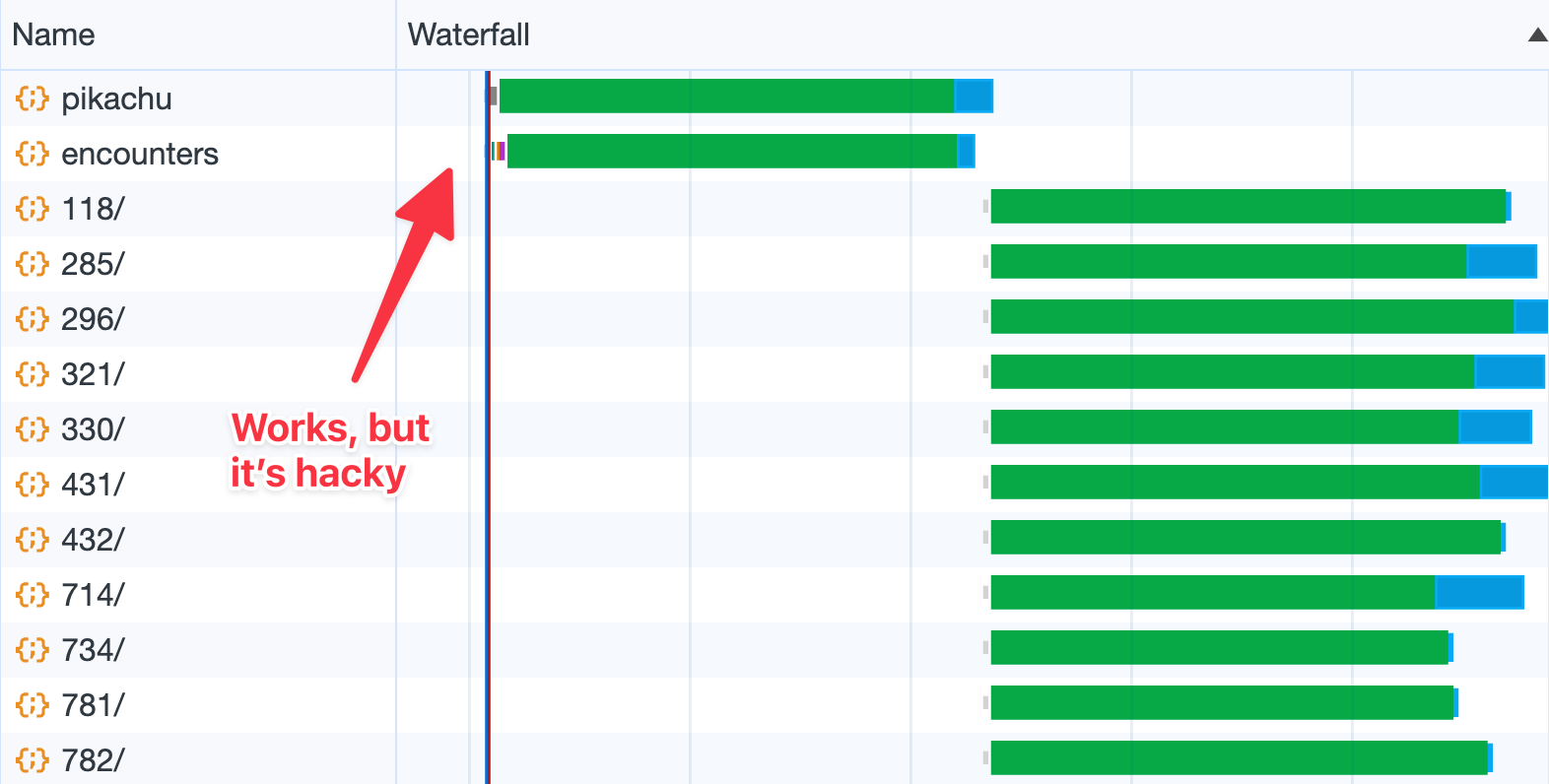

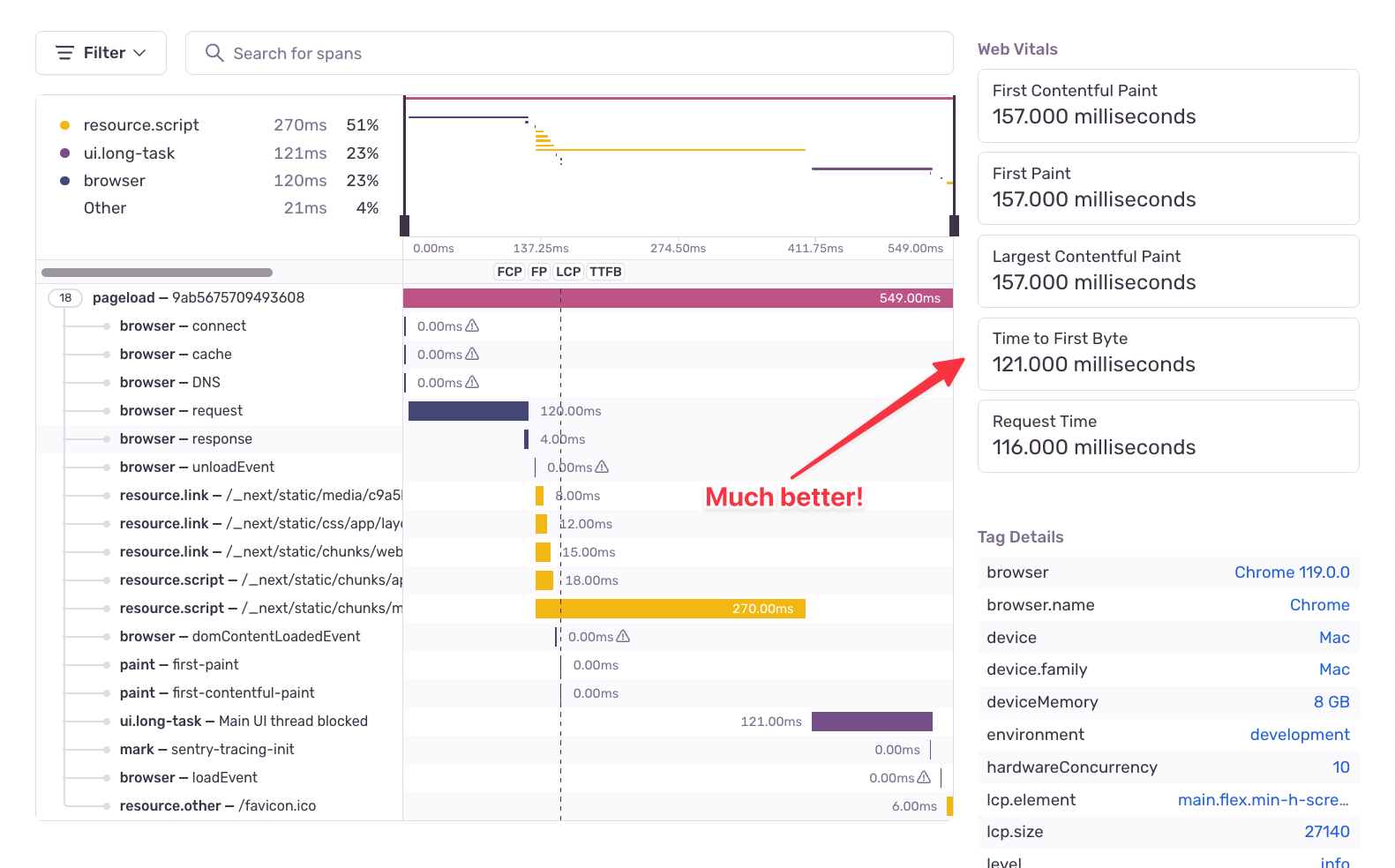

I’ve prepared a CodeSandbox example for you, if you want to see how you can implement a hoisted data fetching with a React Context. Here’s how the our requests look like now:

The /pikachu and /pikachu/encounters requests start at the same time, while the /location-area requests depend on the result of the /pikachu/encounters request, but they too start at the same time. We broke out the waterfall.

Hoisting the data fetching mechanism is probably the most universal approach you can take to eliminate the fetch waterfall. It happens on the client side, so your server does less work and your app’s web vitals are lower. It doesn’t rely on experimental features like Suspense, and it doesn’t work only in Next.js, or when using Relay.

Whether you fetch it in a React Context, or fetch it in the parent component and pass it down through the props, it’s up to you. It depends on how your component structure looks like, what you and your team are comfortable with, and what other libraries you use in your project.

For example, if you use TanStack Query, you can perform all the fetches and cache the results in a higher level of the component hierarchy, but then use the QueryClient in each of the components to read the data from the cache. It’s still considered as hoisting, and it can be an alternative if you don’t want to create a new Context. Simply make the fetches where you would put the Context Provider, and read the data from the cache instead of the provider in the components.

Conclusion

So what we can learn from this article is this - there are two things that you can do to cause a fetch waterfall:

Nest components that fetch their own data while conditionally rendering them.

Invoke the requests sequentially (await first, then await second, then await third and so on).

If you’re fetching the data on the client side, make sure you’re not fetching the data in each component while also conditionally rendering them. If each component fetches its own data, and you display them sequentially, then they’re going to sequentially trigger their data fetches -> waterfall.

Hoisting the data fetching mechanism to a more upper-level component is a good fix. It depends on your structure, but you can either fetch it in the parent component and pass it down through the props, create a React Context if passing down the data causes prop drilling, or if you already use a library like TanStack Query - perform the fetches at a higher level and use the QueryClient to read the results from the cache.

Just moving the data fetching to the server side doesn’t mean that you’ll fix the problem. If you’re await-ing each fetch request individually, you’ll still cause a fetch waterfall, but this time on the server-side. This way the TTFB web vital also suffers because the client needs to wait for the server to do all the data fetching before it starts to draw pixels. So not only you didn’t fix the problem, you introduced a new one.

Being mindful of how you build your app, and keeping an eye on its performance in production is how you win in this game.