Evals are just tests, so why aren’t engineers writing them?

Evals are just tests, so why aren’t engineers writing them?

You’ve shipped an AI feature. Prompts are tuned, models wired up, everything looks solid in local testing. But in production, things fall apart—responses are inconsistent, quality drops, weird edge cases appear out of nowhere.

You set up evals to improve quality and consistency. You use Langfuse, Braintrust, Promptfoo—whatever fits. You start running your evals, tracking regressions, fixing issues, and confidence goes up as a result. Things improve.

But now evals are living in a separate system. They’re useful, but siloed—totally decoupled from the rest of your testing strategy, with evals and results locked away in their own dedicated platform. You’ve got coverage, but no cohesion. And keeping everything in sync is one more thing on your plate.

Evals are too siloed

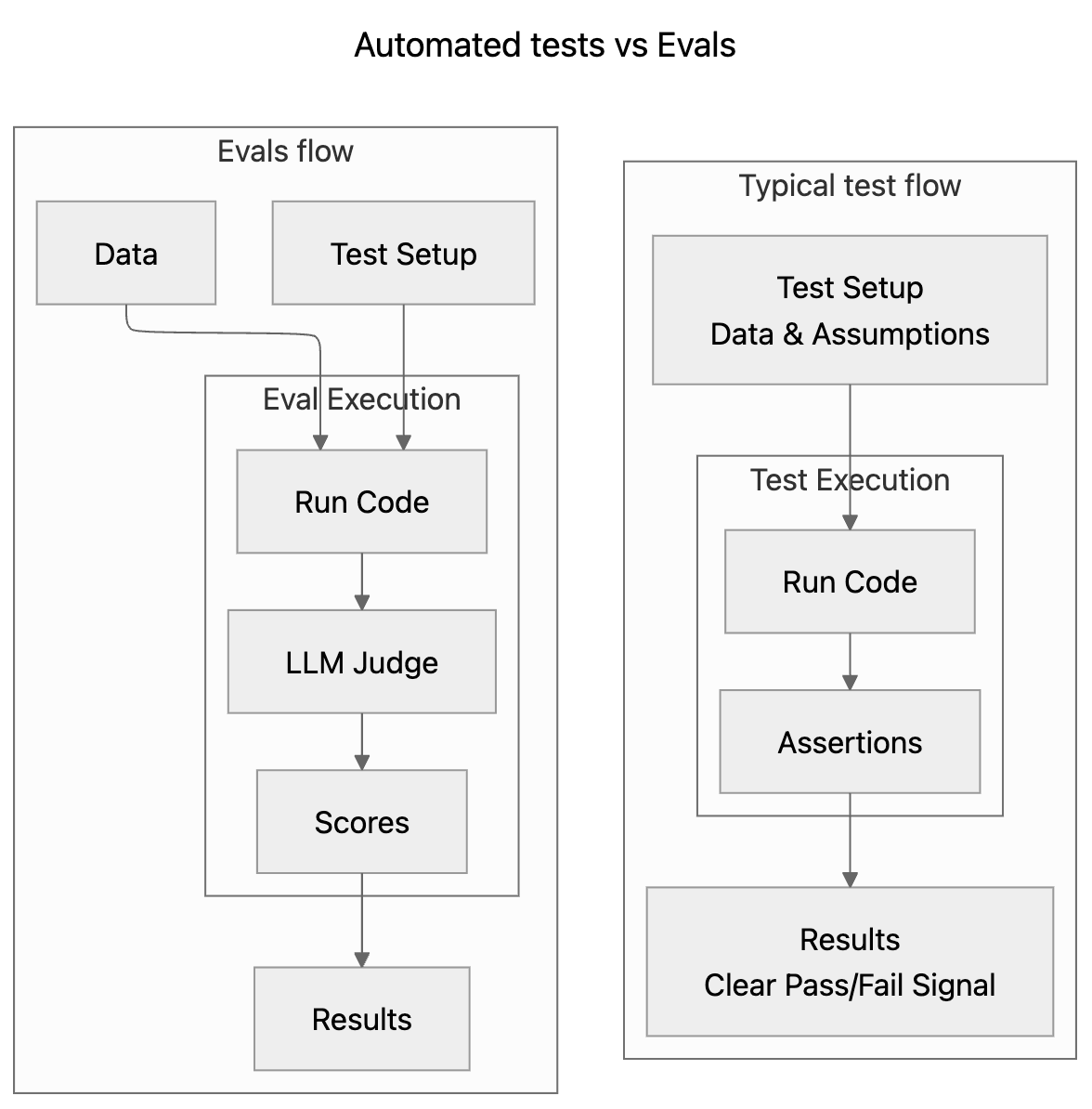

When testing, you set up data and assumptions, run your code, compare results to expectations, and get a clear pass/fail signal. Evals work exactly the same way - they just add some additional layer of scrutiny (such as an LLM judge) between your system output and the final assertion to properly evaluate the non-deterministic nature of AI responses. As a result, unlike conventional testing, you usually do not receive a simple pass/fail, but rather a percentage, confidence assessment, or other metrics.

A side-by-side comparison of eval and conventional testing workflows.

Eval platforms are great at assessing LLM output, and offer robust tools to evaluate that output’s correctness and consistency. But as the above image shows, evals feel very similar to conventional testing because they are just tests. And yet, somehow, we’ve convinced ourselves that they need to live in special platforms, run in isolated environments, and work in a way that’s totally divorced from the developer workflow. This is broken for several reasons:

You can’t iterate quickly. When your evals live in an external platform, they’re challenging to run locally as part of your development cycle. You have to push changes, wait for the eval platform to run, then check results. This kills your development velocity.

You lose visibility. When eval results don’t show up in your CI/CD pipeline, you don’t know if your changes are improving or degrading your AI’s performance until it’s too late. You might merge a breaking change without even knowing it.

You can’t use your existing infrastructure. You already have tools for test reporting, code coverage, and CI/CD. But eval results may not integrate with any of them.

You create organizational friction. Since evals exist on a different platform outside of the core developer experience, evals end up falling on product managers to maintain and monitor. This creates a broken workflow where engineers ship changes, and PMs discover that the LLM’s performance as a result (or worse, users are giving your PMs negative feedback on your new feature). PMs then cajole engineers into course-correcting. If evals were integrated into engineers’ workflow, they could catch issues before they ever get merged.

You create reporting chaos. When evals produce complex scores without clear pass/fail criteria, you end up with custom post-processing code, confusing aggregated metrics, and stakeholders who can’t understand what “50% performance” actually means. You need a PhD in statistics just to explain your AI’s performance to leadership.

How should evals work?

Evals should work like any other test, so teams can get all the benefits of traditional testing: fast iteration, clear visibility, and seamless integration with your development workflow. You can run evals locally during development. You can see eval results in your pull requests. You can integrate eval reporting with tools like Codecov.

Most importantly, you can treat AI quality the same way you treat code quality - as something that’s measured, tracked, and improved as part of your normal development process.

Here’s what we're doing to fix it

At Sentry, we’ve been working on fixing this problem. Here’s what we’ve built so far:

vitest-evals: Evals that look like tests

vitest-evals: Evals that look like testsDavid Cramer built vitest-evals to solve exactly this problem. It lets you write evals that look and feel like regular unit tests:

import { describeEval } from "vitest-evals";

import { Factuality } from "autoevals";

import { openai } from "@ai-sdk/openai";

import { generateText } from "ai";

describeEval("capitals", {

data: async () => {

return [

{

input: "What is the capital of France?",

expected: "Paris",

},

];

},

task: async (prompt: string) => {

const model = openai("gpt-4o");

const { text } = await generateText({

model,

prompt,

})

return text;

},

scorers: [Factuality],

threshold: 0.6,

})This looks like a test because it is a test:

It runs in your test suite.

It integrates with your existing CI/CD pipeline.

It reports clear results that are easy to interpret.

You can run it locally, debug it, iterate on it. It’s part of your normal development workflow.

Standardized output formats

We’ve also created a proof-of-concept output standard based on JUnit that any eval tool can use. By exporting eval results as JUnit XML, we can integrate with any tool that understands test results - which is basically every CI/CD and reporting tool in existence. No more custom dashboards, no more locked-in reporting, no more confusion about what the numbers mean.

For example, once the standard was developed, it was trivial for a Sentry engineer to write an exporter for Langfuse that exports eval results as JUnit compatible XML.

Integration with existing tools

To take this a step farther, as a proof of concept, we updated Codecov to ingest this JUnit XML output. So now these results can be uploaded to Codecov, processed, and stored. Once uploaded, summarized results can be fetched via our public API.

Real-world results

We’ve seen this work in practice. When we built Sentry’s AI PR Review feature, we started with complex metrics (per item) like content_match: 0.82, location_match: 0.9, noise: 2, bugs_not_found: 2 that were impossible to communicate clearly. We transformed this into a simple did_item_pass score that could be exported as JUnit XML and integrated with our existing test analytics. Suddenly, instead of explaining multiple confusing metrics, we could simply say “the system is successful in 40.32% of our dataset.”

The future of AI testing

This is just the beginning. When evals work like tests, you can:

Run evals as part of your normal test suite

See eval results in your pull requests

Track eval performance over time

Integrate eval reporting with your existing tools

Build AI systems with the same confidence you have in the rest of your production systems.

We don't want to replace existing eval tools. We want to make evals work the way tests work - as an integrated part of your development process, rather than a separate system you have to manage.

Evals are just tests. Let’s start treating them that way.