The metrics product we built worked — But we killed it and started over anyway

The metrics product we built worked — But we killed it and started over anyway

Two years ago, Sentry built a metrics product that worked great on paper. But when we dogfooded it, we realized it was not what our customers really needed. Two weeks before launch, we killed the whole thing. Here’s what we learned, why classical time-series metrics break down for debugging modern applications, and how we rebuilt the system from scratch.

Why our first metrics product was not good

We set out to build a metrics product for developers more than two years ago–before I even joined Sentry. We ended up following the path most observability platforms take: pre-aggregating metrics into time series. This approach promised to make tracking things like endpoint latency or request volume efficient and fast. And our team succeeded; tracking an individual metric was fast and cheap.

But we have a culture of dogfooding at Sentry and as we started to put the system to real use, we started to feel the pain. It’s the same problem that plagues every similarly-designed metrics product: the age-old Cartesian product-problem. If you didn’t know, in traditional metrics, a new time series needs to be stored for every unique value of an attribute you add (e.g. for every individual server name you store under the “server” attribute.) And if you want to do multiple attributes, you need to deal with every combination of values. All of this comes from the fundamental issue that when you are pre-aggregating you need to define your questions up front.

Now sometimes this isn’t a big issue. If you’re just tracking memory usage on 30 servers, or CPU% of each of the 16 cores on those servers, it’s all good.

But the problem is that Sentry’s goal is to give developers rich context to debug and fix code when it breaks. To this end, we know developers love to add lots of context/attributes to make it easier to understand what’s going on. But when the extra context explodes the cost, it incentivizes developers to not track all the context they want. Our engineers dogfooding our product continuously found themselves stuck between a rock and a hard place… Not good.

Cardinality is a harsh mistress

Let’s look at an example. Assume that each individual time series costs $0.01/mo. (That’s the right order of magnitude for the industry.) And let’s say you are tracking the latency of a specific endpoint called 100,000 times/day. Reasonable situation. Now let’s say one developer is interested in which (of 8) servers it’s being served on and adds an attribute for that. And another is interested in which (of 200) customer accounts it’s for. Then another wants to know which (of 12) sub-request types it is. Then the kicker–a developer wants to know which (of 5,000) users it’s for. I’ll take your guesses for what the cost is up to… OK, did you guess ~$1M/mo? Yes, that’s the real number.

And, honestly, this is an easy example. It’s not unusual for a developer to want to capture 10-20 attributes.

Of course you can play games like trying to avoid certain combinations of combined filters to keep things in check, but the management of it all is a pain. Long story short, we became convinced that we were about to ship a bunch of painful tradeoffs to our users that would make it hard for them to succeed–especially for Sentry’s mission of providing the richest possible debugging context. And ultimately it was me, just two weeks before our planned ship date (and two weeks after I joined), who decided to pull the plug on the product. I was not popular.

Didn’t we see this coming?

I know what you’re saying: Are you guys dumb? Surely this was totally predictable. You’re right. When we started the metrics project, there were significant debates up front. And indeed, the original vision was about trying to connect our metrics to the existing tracing telemetry that our users have. But in a series of scope drifts, bad communications, and fears about cost structure and being different, we ended up making some sacrifices. It was a painful lesson we had to re-learn: When building a new product you have to make sure to be very clear up front about the vision, and diligent that you’re not compromising or straying from it as you build.

Why was the vision “trace connected”?

Wait, you just said the problem was cost blow up. What’s this about connecting to traces? Well, dear reader, the problems with v1 went even deeper…

Let’s put aside all considerations about cardinality and cost. Assume infinite compute. The other problem with time series is that taking a bunch of increment() or gauge() calls in your code and aggregating them to 1 second granularity sucks, because it destroys the connection between data and code. You can graph the metrics over time, sure, but that graph lives in a parallel universe. It is connected to your code (and your traces, logs, errors, etc.) only indirectly via timestamp.

And that’s a problem, because, again, our goal isn’t to give developers dashboards, it’s to give them context—direct, actionable, connected context—so when something goes wrong, they can see why.

Correlating indirectly via timestamp simply doesn't do that very well. You look at a spike in latency, or an increase in error rate, and then jump over to a separate system, hunting through traces or logs, trying to reconcile timelines. This kind of “roughly around that time” debugging makes you pull your hair out, and is the (other) achilles heel of traditional metrics.

Can our customers afford the best?

So what would a system look like that didn’t blow up in cardinality, and didn’t aggregate to 1 second granularity?

Dave, you say, I know where you are going, and it sounds expensive… Yes, let’s talk about what it costs to keep all the raw data and aggregate it on the fly, and the tradeoffs.

But, first, a bit of context: most observability and analytics systems have moved away from pre-aggregation and toward raw-event storage with on-demand aggregation. This has been enabled by cheaper storage, massively parallel compute, and the rise of columnar query engines. It’s been a mega trend that started in the early 2000s with Hadoop, and has slowly swept across the industry ever since. Logs, tracing, BI (do you remember OLAP cubes?), even Security are all domains where early tools relied heavily on pre-defined aggregations and now almost exclusively store raw data and analyze it on demand. Is it metrics’ time?

Let’s use the previous example for endpoint latency. Sorry, more math… Let’s say that the attributes we used in the example above (server, customer, sub type, user email) amount to 250 bytes of raw data. And, let’s just use the price of Sentry logs as a cost proxy ($0.50/GB/mo). At the stated rate of 100,000 times/day, we are talking a cost of, drumroll… $0.37/mo. Yes, that’s right. It’s way more ($0.36/mo more) than it costs to track an individual time series, but way less (~$1M/mo less) than if you wanted to track those four attributes too. The lesson is: though your metric vendor’s sales rep thinks otherwise, you really don’t want to be on the wrong side of an exponential.

Now, the astute among you probably realize that the 100,000 times/day is a key variable here as well. Traditional metrics don’t scale in cost with this metric, but raw-data based metrics do. So, if you have a metric that fires 1M, or 10M times per day, we’re talking about $3.70, or $37/mo if you want to keep everything. Which is a lot for 1 metric, but honestly, if you have an important user-facing endpoint firing 10M times per day, congratulations! And if you want to limit costs, you can always sample (my personal go to), or just find a higher-level aggregate counter to log.

What’s that, you hate sampling or anything lossy? Well, I hate to break it to you, but if you have an endpoint firing 10M/day, it’s firing 100+ times per second on average, and that 1-second-bucket time series is already aggregating a ton of detail away anyway.

Starting over with trace-connected telemetry

Back to our story. We pulled the plug. The next steps were straightforward, but ended up taking us a while. We went back to the drawing board to build event-based metrics.

That decision actually led to a much deeper rearchitecture at Sentry—and the creation of a new, generic telemetry analytics system we internally call the Event Analytics Platform (EAP). It’s based on ClickHouse, which is an excellent modern columnar store. We’ve given a couple of talks about it, but this work probably deserves its own blog post. We first used EAP to power-up our existing tracing product, building new slicing and querying capabilities. We next used it to deliver our recently-shipped logging product. Now, more than 18 months after pulling the plug on metrics 1.0, it’s backing our next generation Metrics product.

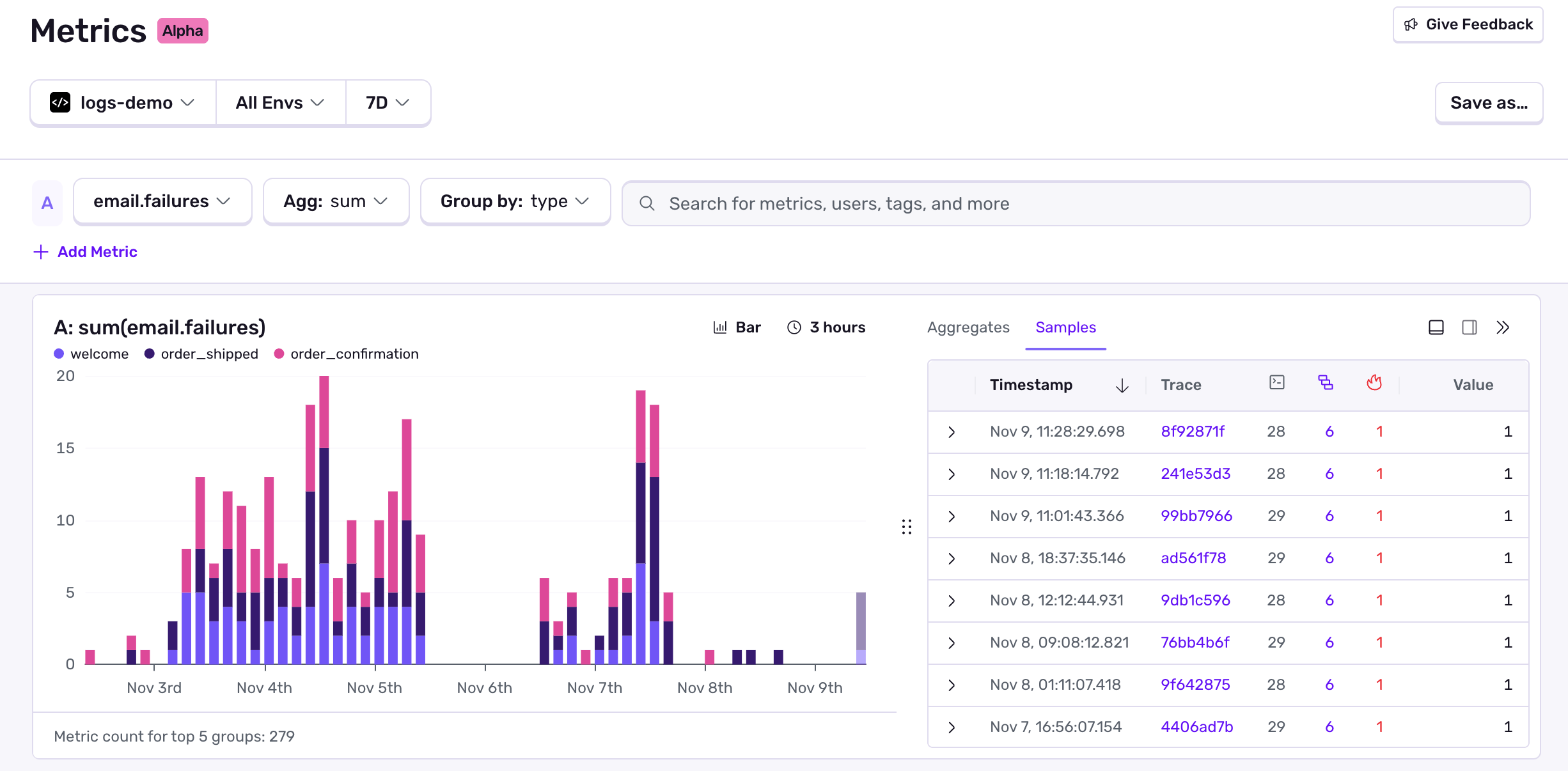

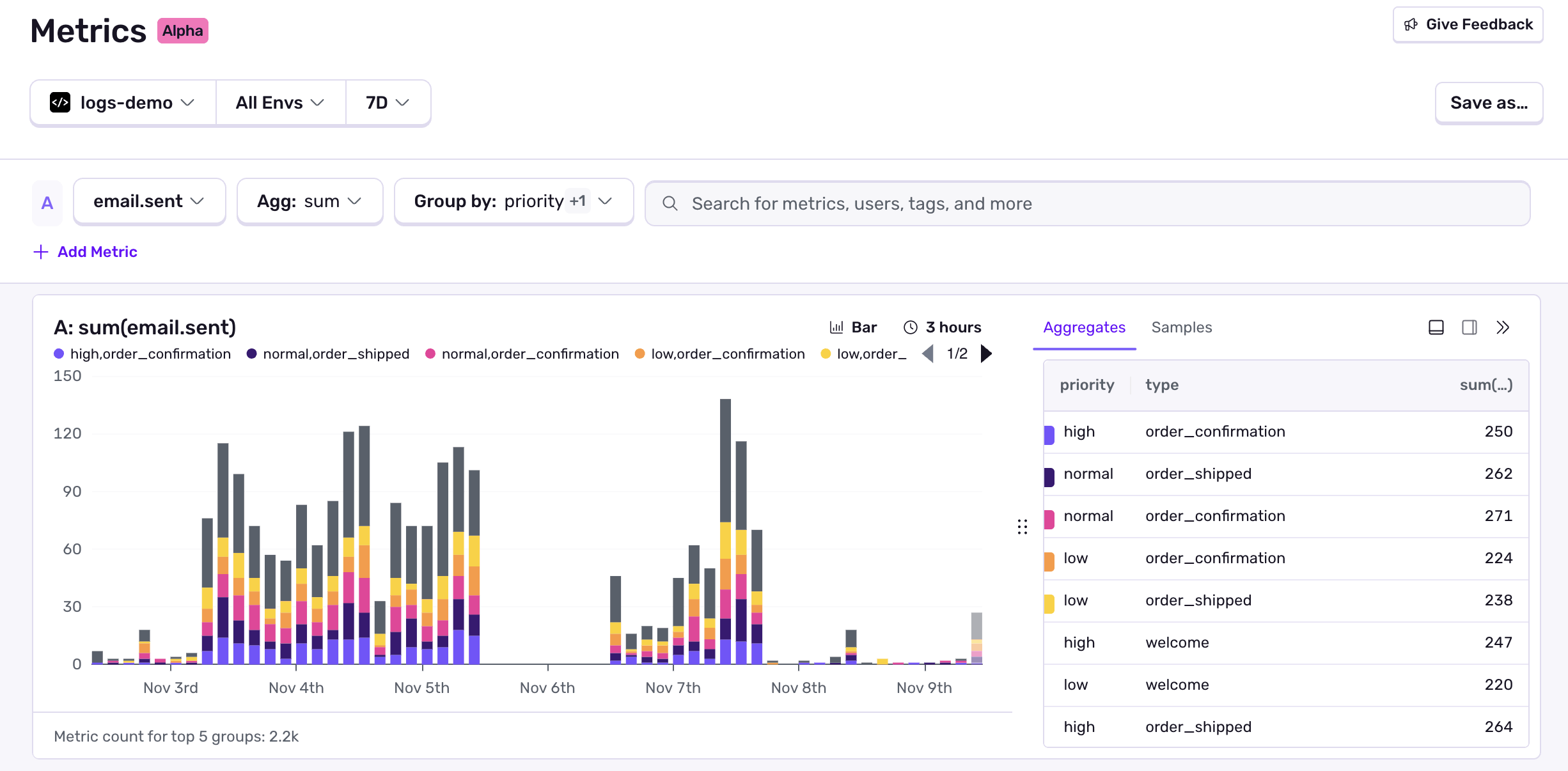

What’s new about Metrics this time around is that every metric event is stored independently in EAP and connected to its trace ID. This solves both the cardinality problem, and the connectedness problem. It means that we can slice and dice metrics dynamically and link them to other telemetry. It also means that you can keep adding more and more contextual tags, even ones with super high cardinality, without fear of blowing up costs.

Metrics with context

We’ve been back to dogfooding, and this time we’re pretty happy. The trace-connected model unlocks debugging workflows that weren’t possible before. Instead of jumping between dashboards and guessing at time correlations, you can follow the data directly:

See a spike in checkout failures? Jump from that metric to the exact trace where a failure happened; jump from the trace to an attached Sentry error.

Notice an increase in retries? Break down the metrics by span to see which service is at fault.

Watching p95 latency climb? Drill into the worst offenders and find related session replays to see what the user is experiencing.

Metrics about your application

What about Infra monitoring? Well, we expect some of our users will do it, but we’re not really pushing that use case for Sentry metrics. This point created a good deal of internal discussion since it’s so hard to decouple the idea of metrics from the idea of infrastructure. And time-series pre-aggregation is sooo tempting for infra because machine-level metrics are already disconnected from your code, and it could be cheap, and, look, this simple little daemon can slurp up all of these signals, and, look, charts!

I won’t lie, we use infra metrics, and we know they aren’t going away. But for us, metrics are much more powerful when they are application-level signals, connected to the underlying code that produced them. Traditional CPU and memory graphs have their place, but modern developers, especially ones building on high-level platform abstractions, care less about infra and more about higher-level application health: login failures, payment errors, request latencies. Those are the metrics that tell you what’s really happening to your users.

As we add modern metrics to our own product, we find that we are using the dashboards-of-1000-time-series a lot less, and I think you will too.

Built for Seer

Since I’m officially a CTO, I’d be remiss to go an entire blog without mentioning AI. And, even though some of our efforts were just getting going when we made the call to reboot metrics, it turns out it was the right call for our AI debugging ambitions as well.

If you don’t know, we have an agent we call Seer built into Sentry. It root causes issues, proposes fixes, and will do even more soon. Behind the scenes, Seer uses tool calls to traverse all of the connected telemetry in Sentry—errors, traces, logs, and now metrics. What we keep finding with our testing is that data volume (a million examples) isn’t very important to make Seer debug well, but every additional type of connected data pays huge dividends. So, by making metrics first-class citizens in our telemetry graph, we ended up giving Seer a lot more connected context to work with.

I think this was a good demonstration for our team of the importance of sticking to your original vision for something. The original “make it all trace-connected” really did pay dividends vs. just shipping a traditional metrics solution as a separate feature, and even for a feature we hadn’t fully envisioned at the time.

The long road was worth it

If you’re reading this, you probably know how hard it is to kill a product you’ve already built. It’s harder still to admit that what you have, while functional, isn’t right. The sunk cost fallacy is a mighty foe. And I’m really sorry to all of the beta testers who were enjoying metrics v1, but we’re convinced the wait was worth it for the new version.

So, this wasn’t exactly a traditional marketing blog post announcing a product, but since we’re taking a different tack on this one, I thought it would be interesting to share the thought process. I hope you get a chance to try our new metrics product as we continue to build it and improve it, and hopefully this story also gives you a little inspiration for your own software projects to not be afraid to make the tough calls.