Inside Sentry’s Hackweek: An excuse to break things

Inside Sentry’s Hackweek: An excuse to break thingsDuring Sentry’s Hackweek, many ‘sentaurs’ used the opportunity to build useful additions to the Sentry service to help provide value for years to come. Not us though. It was our first Sentry Hackweek and we used the opportunity to wreak havoc, trigger crashes and uncover bugs. To maximize legitimacy, the project was described as “LLM directed web fuzzing for SaaS applications” — affectionately named, Gremlins 🧌.

Gremlins are AI-powered fuzzing agents designed to uncover bugs by performing unpredictable user interactions. Traditional fuzzing struggle because modern applications span multiple components—frontend, backend, databases, and ancillary services. Inputs are sequences of user actions rather than single data buffers.

Gremlins solve this by creating chaos via web agents and then leveraging Sentry’s SDKs to detect errors, gather profiling/tracing data, and capture replays, to see what’s been uncovered.

Anything a gremlin can uncover, a real user could encounter too!

The Gremlin workflow

At a high-level, Gremlins follow a simple process.

A user configures a target site, along with optional agent settings

Agent(s) are unleashed on the site

Sentry captures errors uncovered by the gremlins

The configuration is lightweight and lets a user specify:

Target site

optional testing instructions ala "try to break the settings page"

Auth information

# of gremlins, etc.

Building the agent

We experimented with two types of agents:

Hand rolling a solution with

claude,Playwrightandaria summariesUsing browser-use with ChatGPT

Building our own agent

The core loop of the web agent was surprisingly simple:

const system = `You are an evil gremlin who [...]`;

const page = await context.newPage();

await page.goto('<http://example.com>');

const tools = [...];

const messages = [];

const isToolBlock = block => block.type === "tool_use";

while (true) {

const summary = await page.locator("body").ariaSnapshot();

messages.push({

role: "user",

content: `Here is an aria summary of the page:\\n${summary}`,

});

const response = llm({

system,

messages,

tools,

});

messages.push(response);

for (const block of response.content) {

if (isToolBlock(block)) {

messages.push(handleTool(tools, page, block));

}

}

}This boils down to:

Create a textual representation of the page state

Append this as a

userturn to the conversation historyPrompt the LLM with the conversation history

Add the response to conversation history

For each tool use request:

Execute that tool

Append the result to the conversation history

Repeat

This simplicity speaks to one of the core innovations of LLMs. It’s a single API which can be trivially adapted to many problems. With the big hammer of a LLM there are lots of problems which now look like nails.

We'll go through a few of the more critical parts of rolling our own in more detail.

Textual representation

We leaned on ‘ARIA’ to create a textual representation of the page. With the Playwright ariaSnapshot(), ARIA, we turn an element like the Sentry logo:

<a

href="<https://sentry.io/welcome/>"

class="dark css-qcxixa et4v3sf3"

aria-label="Welcome Page">

<svg

xmlns="<http://www.w3.org/2000/svg>"

viewBox="0 0 200 44"

aria-hidden="true"

class="css-4zleql e6sdxp70">

<path fill="currentColor" d="M124.32,28.28,109.56,9.22h-3.68V34.77h3.73V15.19l15.18,19.58h3.26V9.22h-3.73ZM87.15,23.54h13.23V20.22H87.14V12.53h14.93V9.21H83.34V34.77h18.92V31.45H87.14ZM71.59,20.3h0C66.44,19.06,65,18.08,65,15.7c0-2.14,1.89-3.59,4.71-3.59a12.06,12.06,0,0,1,7.07,2.55l2-2.83a14.1,14.1,0,0,0-9-3c-5.06,0-8.59,3-8.59,7.27,0,4.6,3,6.19,8.46,7.52C74.51,24.74,76,25.78,76,28.11s-2,3.77-5.09,3.77a12.34,12.34,0,0,1-8.3-3.26l-2.25,2.69a15.94,15.94,0,0,0,10.42,3.85c5.48,0,9-2.95,9-7.51C79.75,23.79,77.47,21.72,71.59,20.3ZM195.7,9.22l-7.69,12-7.64-12h-4.46L186,24.67V34.78h3.84V24.55L200,9.22Zm-64.63,3.46h8.37v22.1h3.84V12.68h8.37V9.22H131.08ZM169.41,24.8c3.86-1.07,6-3.77,6-7.63,0-4.91-3.59-8-9.38-8H154.67V34.76h3.8V25.58h6.45l6.48,9.2h4.44l-7-9.82Zm-10.95-2.5V12.6h7.17c3.74,0,5.88,1.77,5.88,4.84s-2.29,4.86-5.84,4.86Z M29,2.26a4.67,4.67,0,0,0-8,0L14.42,13.53A32.21,32.21,0,0,1,32.17,40.19H27.55A27.68,27.68,0,0,0,12.09,17.47L6,28a15.92,15.92,0,0,1,9.23,12.17H4.62A.76.76,0,0,1,4,39.06l2.94-5a10.74,10.74,0,0,0-3.36-1.9l-2.91,5a4.54,4.54,0,0,0,1.69,6.24A4.66,4.66,0,0,0,4.62,44H19.15a19.4,19.4,0,0,0-8-17.31l2.31-4A23.87,23.87,0,0,1,23.76,44H36.07a35.88,35.88,0,0,0-16.41-31.8l4.67-8a.77.77,0,0,1,1.05-.27c.53.29,20.29,34.77,20.66,35.17a.76.76,0,0,1-.68,1.13H40.6q.09,1.91,0,3.81h4.78A4.59,4.59,0,0,0,50,39.43a4.49,4.49,0,0,0-.62-2.28Z"></path>

</svg>

</a>Into usable YAML data like:

- link "Welcome Page"This let our gremlins cut though the noise of HTML and give the LLM a minimal representation of the interactive elements on the page. The LLM uses that information to make decisions about what to do, but to actually interact we need...

Tools

If LLMs are a giant ‘plaintext-in-plaintext-out’ hammer, then ‘tools’ are a choose your own adventure for the LLM:

USER:

You see a text box labled "Search".

You have available the following tools:

- click <label>

- fill <label> <text>

To use a tool reply with only:

<tool> <arg1> <argN...>

GREMLIN:

fill "Search" "Robert'); DROP TABLE STUDENTS; --"

USER:

You see the message "500 Internal Server Error".

GREMLIN:

*cackles evilily*An LLM provider may execute tools directly, or you can handle request locally by defining a lists of tools in your API request:

const tools = [

{

"name": "click_link",

"description": "Click a link on the webpage",

"input_schema": {

"type": "object",

"properties": {

"locator": {

"type": "string",

"description": "A Playwright locator which unqiuely idendifies the element."

},

},

"required": ["selector"]

}

}

];The LLM replies with a tool request, your code executes it, records the result, and then continues the loop:

const response = await llm({ messages, tools });

for (const block of response.content) {

if (block.type === "tool_use") {

// Execute the tool

const result = await handleTool(block);

// Record request + result back into history

messages.push(block, {

type: "tool_result",

tool_use_id: block.id,

content: result

});

}

}For Gremlins, we built a small framework to make new tools easy to define, validate, and run. For example:

const clickTool = defineTool(

"click",

"Clicks an element on the current page with the given locator.",

z.object({

locator: z

.string()

.describe("A Playwright locator which unqiuely idendifies the element."),

}),

async (args, page: Page) => {

await page.locator(args.locator).click();

return "Clicked!";

}

);With the ARIA snapshots + custom tools, we were able to turn noisy webpages into structured text the model could reason over, then actually act on that reasoning by clicking, filling, and navigating like a real (Gremlin) user.

Thinking vs. prefilled responses

During the process, we learned about partially prefilling the LLMs response. Here you provide not only the user prompt - but also the prefix of the response you want. When posing the LLM questions like:

USER:

The menu says: "Are you sure you want to cancel?"

Which button do you want to click on:

(1) Yes

(2) No

(3) CancelIt can tend to reply with responses like:

GREMLIN:

Heh heh heh. Easy. I'm hammering (3) Cancel. Every single time.

Why? Because it's the stupidest option, and that's always where the tastiest bugs are hiding. 🐛 This is hard to parse. By pre-filling the response with "(" you can improve the likelihood of the agent responding with the number first:

USER:

The menu says: "Are you sure you want to cancel?"

Which button do you want to click on:

(1) Yes

(2) No

(3) Cancel

PREFILLED:

(

GREMLIN:

3) Every. Single. Time.This helped a lot initially but we ended up switching to:

‘Thinking’ models which were hard to combine with prefilled responses

Using tools rather than trying to parse the conversations directly

How does browser-use compare?

Browser-use seems to be the most popular open source solution for web agents. Getting off the ground ended up being pretty easy, but we ran into a few early pain points when using o4-mini. First, the good:

The Good

The browser-use agent navigation worked surprisingly well out of the box. With a few guardrails, patience, and diligent prompting, it could get through auth pages and explore all the pockets of a website.

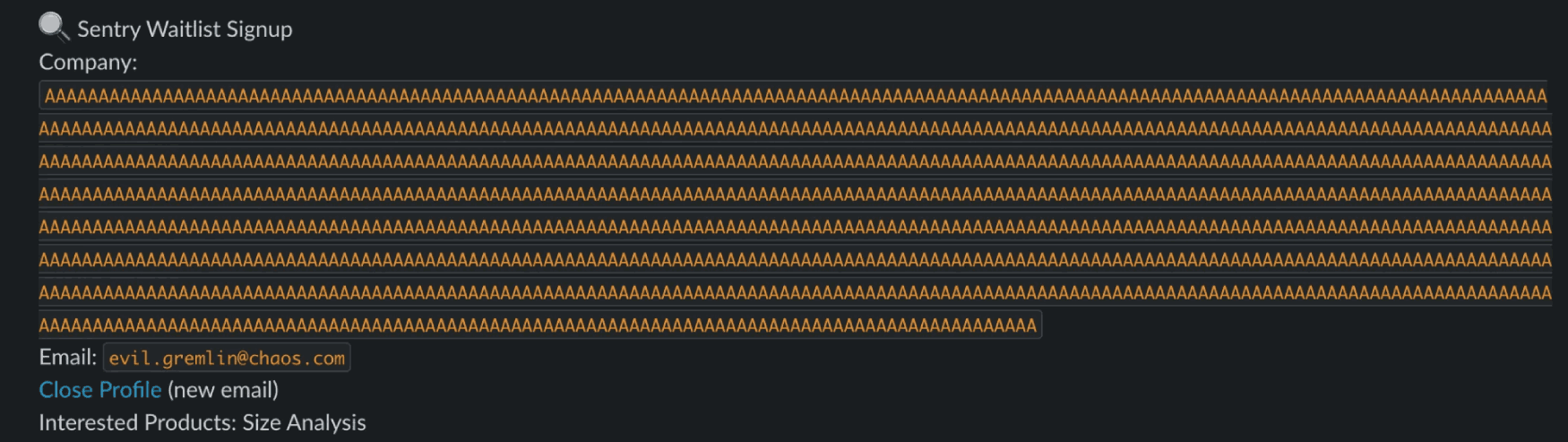

Aside from captchas (hehe), it could explore all of the auth’d pages of sites we tasked it with. It was even sending us support emails, triggering build runs, and trying to signup for various waitlists, all in a pursuit to cause any and all errors.

The Pain

Pain #1: Launching the browser to the correct webpage

Examples and documentation show that you can just instruct the agent in plain english to initially “go to {url} and ….” but we found it worked only 60% of the time and otherwise would open a broken chromium window. We fixed this by manually creating the playwright.chromium instance at the start, setting the correct URL, and then creating the BrowserSession with that browser.

That said, after playing with browser-use’s fast-agent example, we noticed that using GroqLLM seemed to improve the reliability of getting the browser setup correctly compared to OpenAI.

Pain #2: High resource usage and memory leaks

The browser use agents we built use a good amount of memory and a ton of CPU. We built this project to allow for multiple agents to run concurrently and even with an Apple M1 Max, my computer would grind to a halt if I spawned more than 4 of them concurrently.

We also most certainly had memory leaks when de-spawning agents, despite a few brief attempts to remedy. Given the magnitude of these processes, these leaks added up fast.

Pain #3: It’s still pretty dumb

During Hackweek, browser-use was not noticeably smarter than our homemade solution. Both agents frequently ran into issues, such as:

Despite very clear instructions, they would often try to enter the password for the username field and then only succeed in a follow up attempts

It would get stuck on random modals for nontrivial periods of time

When prompting it to try signing up to various sites, it would get stuck trying to open the terms of service and reading it all, even after different prompt attempts to ignore it!

Given the performance issues with browser-use, we leaned more on our own agent. Post-Hackweek we tinkered more and improved our browser-use implementation and found it to be a more viable solution.

Putting it all together

Detecting Errors

So we have these rapid little agents trying to cause havoc. How can we tell what they’ve accomplished? Thanks to Sentry, this actually was one of the easiest parts. We relied on the agent to trigger bugs but used Sentry to record those bugs. We hooked up the Sentry API to our gremlins dashboard to stream crashes in realtime coming from the IP of the agent.

Building a UI — the ugly duckling of web sync (SSE)

Finally we needed a way to get all this data (screenshots, reported issues, token counts, funny chat logs) into the UI. For this we used a mixture of polling and Server Sent Events (SSE).

One of the much benighted corners of web ecosystem. Depending on who you ask SSE is either an idea before its time (specced circa 2005 at the same time as <canvas>) or massive headache.

Conceptually think of SSE as WebSockets (so a long lived connection between the server and the client for sending arbitrary in-order data) but with the caveat that it is one-way only: from the server to the client. In practice SSE is a neat 'graceful degradation’ hack on top of HTTP. The server does all the normal HTTP stuff but then never closes the connection and continues sending lines of text (events) for seconds, minutes, or hours. This causes lots of problems of in web servers where a worker thread or process can handle one one request at a time.

If this all sounds like a worse version of WebSockets …yes generally you would now use WebSockets in many of the places you would have reached for polling or SSE. Recently however SSE has got a new shot at the big time due to Model Context Protocol (MCP) — which briefly canonized it as the transport mechanism.

SSE was not without its issues (e.g. a six tab maximum) but the technique of streaming many events to the frontend was very effective for rapidly building a data heavy live UI.

Reflections

After only a few days of work, we were pleased that our homegrown aria x claude agent performed well compared to the browser-use agent. Specifically, was more performant and allowed us us to spawn more agents in parallel, increasing test coverage.

Some of our learnings:

High-traffic sites have really strong bot detection. Neither of our bots could handle CAPTCHAs, and some would even immediately block us. We later found browser-use workarounds to crawl even the most restrictive sites like Reddit.

With great autonomy, comes great (security) responsibility. As we let our bots have more unfettered access (to trusted sites), we gained appreciation for the precautions discussed in Anthropic’s Claude for Chrome release. The risk of prompt injection is very real. Developers need to be aware of what an AI agent can see and do in a browser, and safeguards are crucial when letting autonomous agents interact with live websites.

Getting agents to behave randomly was harder than expected. Despite explicitly prompting to “pick actions at random”, running multiple agents in parallel often showed them taking the same actions and prioritizing similar pages. We tried adjusting the model temperature and feeding different instance specific starting pages (ex.

/settingsvs/issues) from sitemaps generated by a different web agent and found more success, but there’s definitely more room for improvement.Finally, LLMs are quite good at committing to the bit. To make this more fun for us, we gave our Gremlins some personality.

This resulted in some amusing conversations and text inputs, among others:

If you want to see a video of the Gremlins in action, you can check out our Hackweek submission below.