How to measure and fix latency with edge deployments and Sentry

How to measure and fix latency with edge deployments and Sentry

A 2017 study by Google, researchers found:

As page load time goes from 1 to 3 seconds, the probability of bounce increases 32%.

That was over 8 years ago. And let’s be honest, it’s not likely users have found any additional patience in that time.

Web Vitals are a set of performance metrics defined by Google that measure user experience. They focus on things like LCP (how long the main content takes to load), INP (how quickly the page responds to input), and CLS (how visually stable the app is, meaning whether content shifts unexpectedly).

When a delay of even 1 second can lead to fewer sales or missed signups, it’s critical to investigate latency from all angles.

Even with a hyper-optimized application, there’s going to be at least one bottleneck you can’t fix with a PR: distance.

Identifying latency

You can’t fix what you can’t see.

If your service runs on a single server in the US, users farther away, say in Asia or Europe, will naturally see slower load times simply because their requests have farther to travel.

Let’s walk through a basic setup to measure and visualize the impact of regional latency using Sentry.

Test setup

We’ll deploy an e-commerce store with both a frontend app and backend API in the US, specifically in the iad (Ashburn, Virginia) region using Fly.io, which makes it easy to deploy code in up to 35 regions.

These will be deployed as two separate VMs, in the same region. For now, our traffic is entirely US-based.

This represents our “ideal” baseline.

Using Sentry to visualize latency

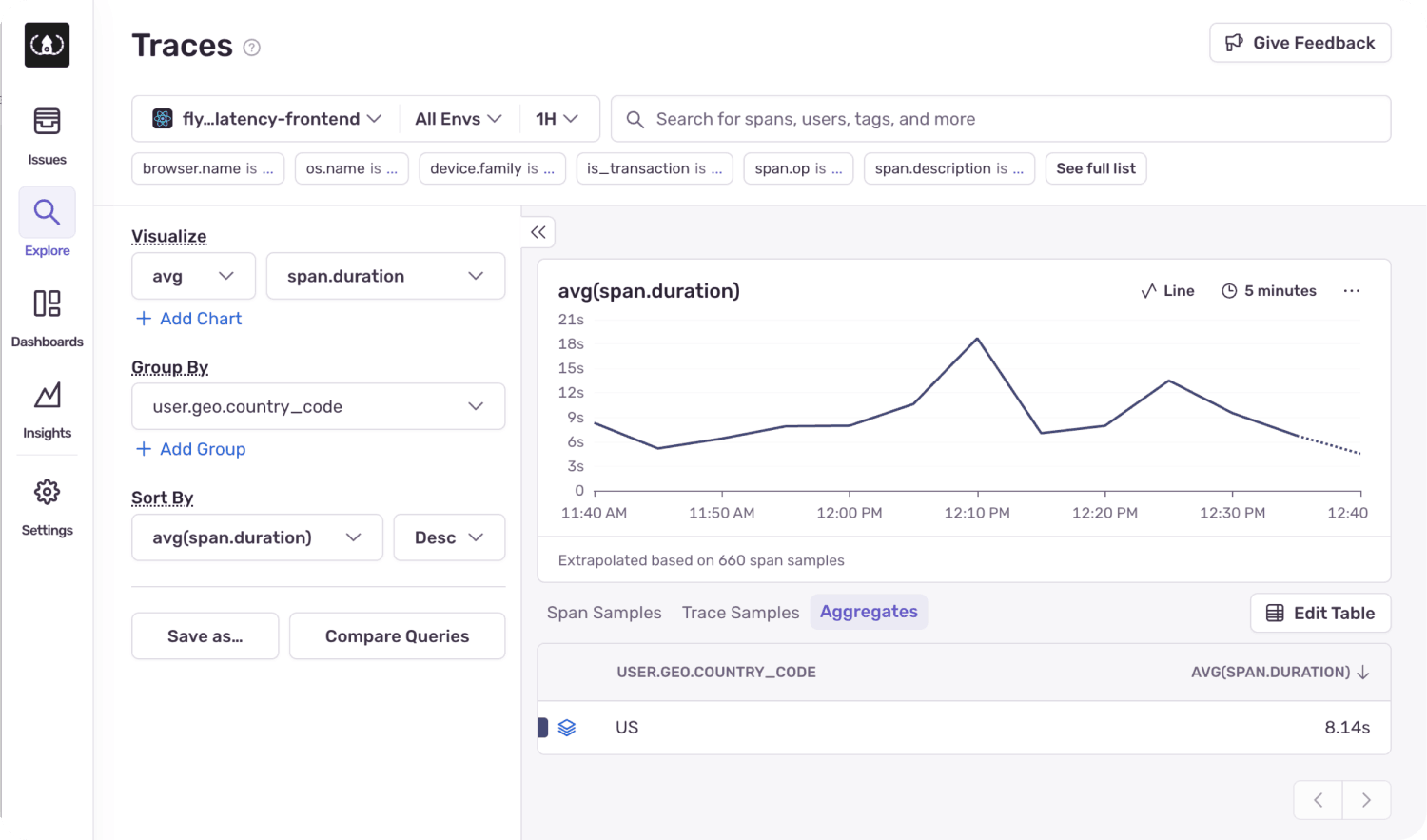

From our Sentry dashboard, we’ll click into Explore > Traces to view of all of the traffic in our application. Each span represents some event or action that we can measure in our app.

In the drop down in the top left, we can narrow down which projects we want to view traces for. Right now, we’ll focus on just our frontend application, as it is capable of measuring our user’s experience though the app.

Change the Visualize settings from count , and instead show the avg of span.duration. Now rather than just seeing the raw number of spans captured, we can start to analyze how long they are taking on average.

Underneath, click the drop-down labeled “Group By” and select user.geo.country_code to get the average duration broken down by country.

In our demo, it’s just us visiting, so we are only seeing a single data grouping for the US. If your app is receiving traffic from multiple countries, you’ll be able to see them all displayed here.

However, this is currently showing the average duration of all spans. Instead, let’s scope our search down to a specific event we want to measure.

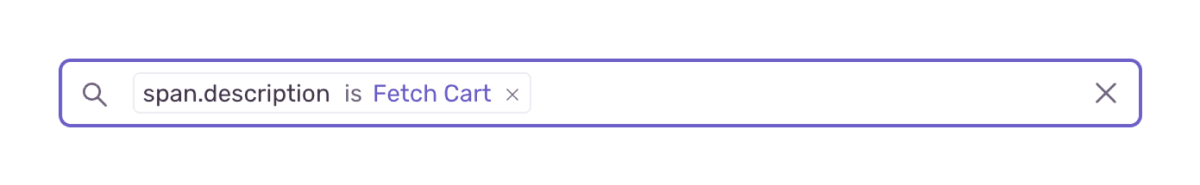

We can manually wrap any callback in our code with a Sentry.startSpan to give it specific properties that are easy to query.

const fetchCartData = async () => {

return Sentry.startSpan({ name: "Fetch Cart", op: "http.request" }, async () => {

try {

const res = await client.FetchCart();

const data = await res.json();

return { success: res.ok, data };

} catch (error) {

return { success: false, error };

}

});

};In this case, I have wrapped a call in our application responsible for loading the contents of the cart. By setting the name, we can easily search for this span via the span.description.

Previously we saw an average span duration of 8.14s, but after scoping in to just the “Fetch Cart” span, our new average is 7.84s.

For our demo, the server is intentionally under heavy load, so it is fairly slow, but let’s see how this compare if the server’s backend was deployed physically far away from the user, like in Japan.

Why edge computing matters

With traditional applications often being stuck in a single region (especially in the case of databases), latency can become a large bottleneck compared to what optimizations you could make.

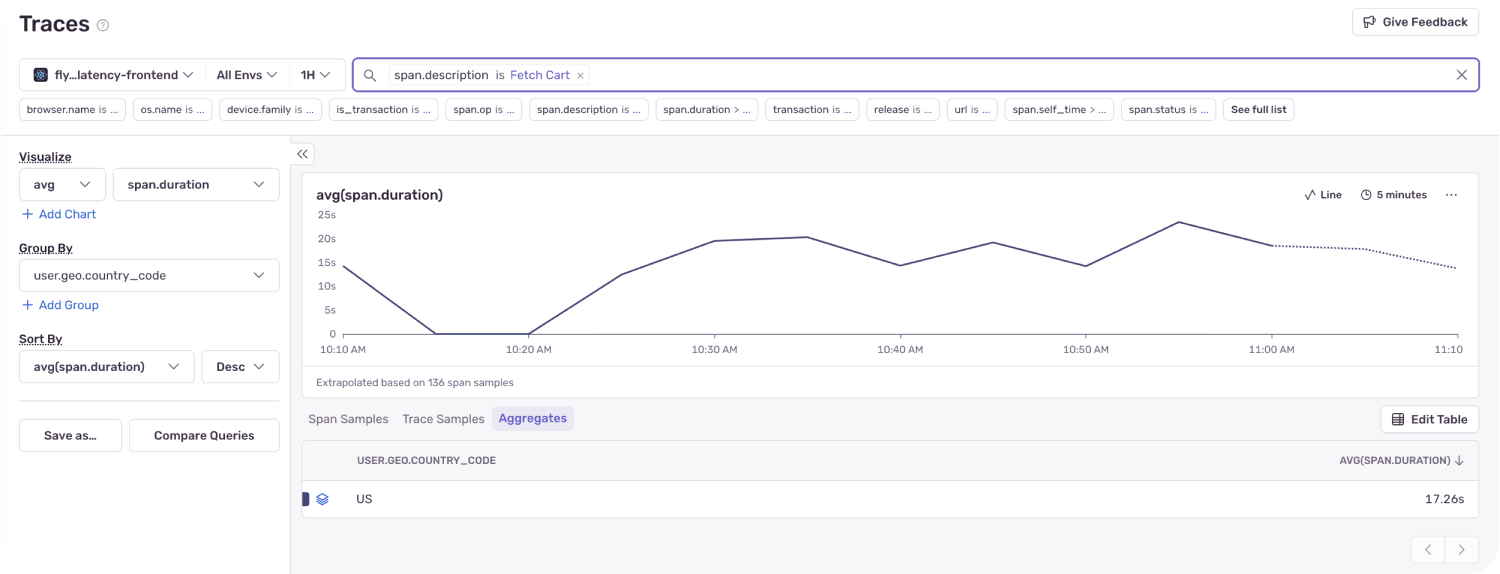

Let’s run our test again, this time we’ll deploy the backend to Japan (nrt). This would be analogous to a service native to Japan but with a user presence in the US.

The code is exactly the same. There will be some variability, but on average, any additional time we see now should be due to the transient latency.

Our new average span duration for the “Fetch Cart” event is 17.2s, or over 2x as long.

While some of that may be attributed to the variable nature of our calls, the majority of the time difference is due to the travel time of the frontend server communicating with the backend over a great distance.

In a real application, you might have your server deployed in a single region, as we have, and you would then see traffic coming from multiple countries in the same graph. But, the issue would be the same.

How to compute at the edge?

Edge computing is the idea of hosting your services and data physically close to your end user. Edge Computing providers will typically automatically route you users to

Some modern software paradigms allow for simple functions to be run in the “cloud” globally. Edge networking is often available for “serverless” providers like Vercel’s “Vercel Functions” or Cloudflare’s “Workers”.

Using Sentry for measuring our average trace durations in different regions, we can see that users are having a less-optimal experience the farther they are from our backend (and to some extent, our frontend). What can you do to ensure the fastest load times for your users?

Many different hosting providers and cloud platforms out there offer solutions for edge computing and CDN (storing files close to your users).

Serverless functions

Serverless edge functions are one of the easiest ways to move code closer to your users, without having to manage infrastructure. Platforms like AWS Lambda@Edge, Cloudflare Workers, and Vercel Functions run lightweight, stateless functions in data centers around the world.

Because the platform handles scaling, provisioning, and routing automatically, you can focus on writing your code, and know that it will run nearly instantly from the closest location you your users.

A lot of popular modern frameworks like Next.JS have adapters or built-in support for running on serverless edge platforms as functions. If you are using a popular framework, migrating to the edge might only be a plugin away.

Containers

If your app can’t be broken down into small stateless functions, containers are the next step for running at the edge. Pretty much anything that runs in Docker can be deployed to a VM in an edge region. Platforms like Fly.io specialize in deploying micro-VMs to dozens of regions, giving you serverless-like capabilities for nearly all apps.

With containers, you can run full-stack services, custom runtimes, and background processes in multiple locations. For example, you might deploy your frontend and API to several edge regions and manage your own high-availability Postgres cluster.

The benefit here is flexibility. You can run exactly what you need, in exactly the regions you need it. The trade-off is that you’re responsible for more of the operational side: scaling, health checks, failover, and keeping images up to date. But for complex apps that don’t fit neatly into serverless constraints, containers give you the power to go global without rewriting your architecture.

Databases

When you’re pushing your app out to the edge, the database is often the hardest part to keep up. You can move your frontend and backend closer to users pretty easily, but your data still needs to come along for the ride, and that’s where things get tricky.

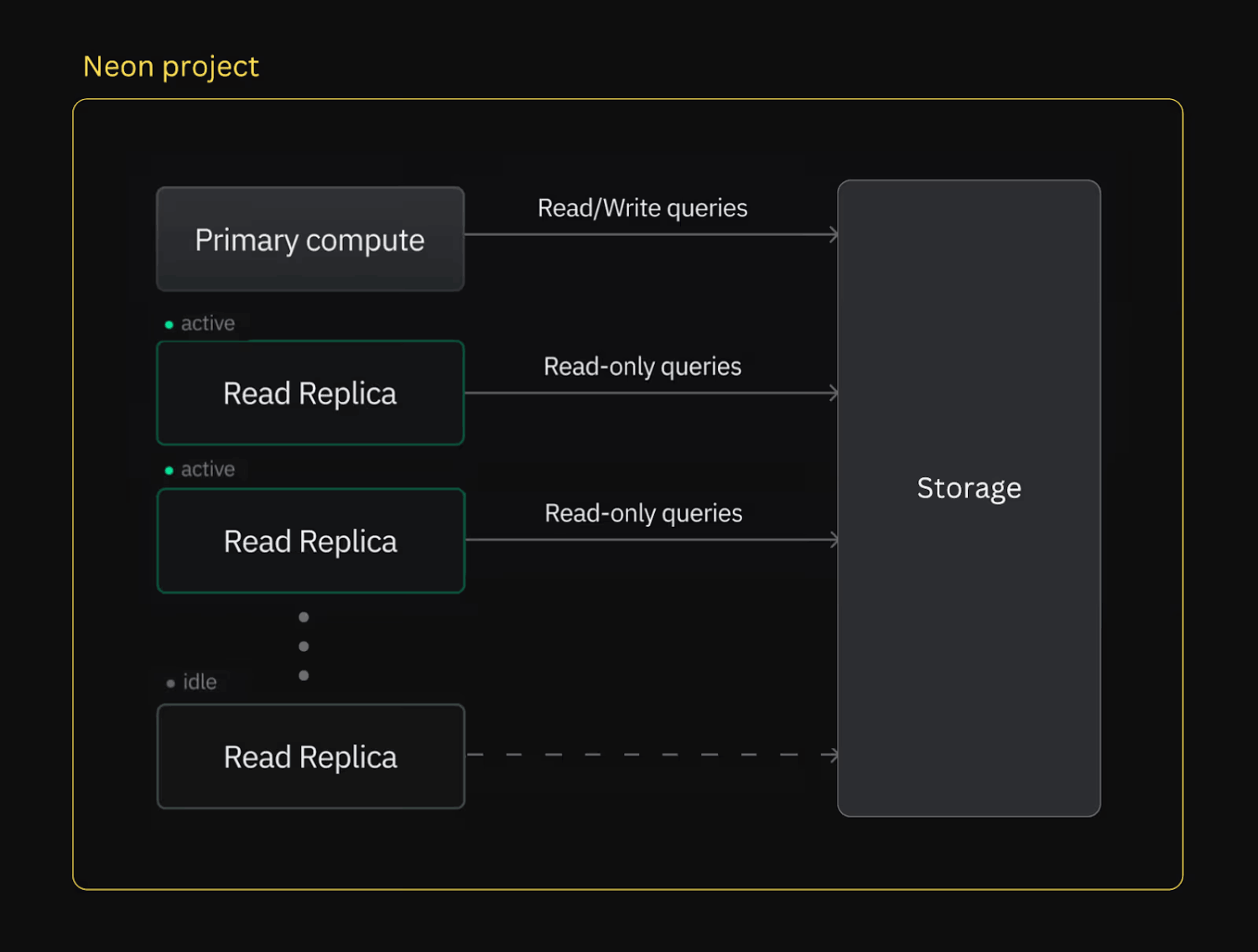

With a typical setup, like Postgres on Supabase, a read replica is a full copy of your primary database running in another region. It’s kept up to date by streaming changes from the primary, and because it’s sitting physically closer to your users, queries can return much faster.

Writes still go directly to the primary, but reads hit the nearest replica, cutting down round-trip time and taking some load off the main database. The trade-off: you’re paying for the compute and storage for each replica, and there’s a tiny bit of replication lag.

Neon has a different approach, offering “serverless” Postgres. They separate compute from storage, so spinning up a replica does not mean copying all your data. Instead, you get another compute node pointing at the same storage layer.

Neon read replicas - Neon Docs

That makes it cheap and fast to spin compute down to zero when not in use, and quickly spin up replica compute nodes in other regions when needed. On the potential downside, because you are reading from that same storage layer, you are likely to save money but there is the potential for some additional latency.

Whether it’s a full Postgres read replica or a serverless Neon compute node, the goal is the same: move closer to your users so queries return faster. Combined with caching (like KV), this is one of the most effective ways to make a globally distributed app feel snappy.

TL;DR

User experience takes a hit when latency goes up. Even a one-second delay can cost sales or signups.

Using Sentry, you can measure real-world performance for user experiences across regions and see exactly how physical distance affects load times. By utilizing modern edge computing paradigms like serverless compute, read replicas, and containerized deployments, you can push your application closer to your users, reduce round-trip times, and keep your site feeling fast no matter where your audience is. Pair these with smart caching and you can deliver a snappy, reliable experience to a global user base.