Beyond Coverage: Flaky Test Detection, AI Test Generation, and More

We’re expanding beyond just code coverage and added a suite of new capabilities to help you break production less: AI PR Review, AI Test Generation, Test Analytics, and Bundle Analysis.

AI PR Review

Raise your hand if you’ve ever pushed something to production and immediately face-palmed at the rookie-level mistake another set of eyes definitely would’ve caught. Syntax just did a whole series of spooky web development stories, and several of the stories probably would have benefited from or maybe even been prevented by a basic pre-release review.

Sure, you could slack a teammate and ask them to take a look at your work. You could just open the PR and follow your team’s merge requirements. While that works most of the time, most developers mainly care about the logic and whether the big pieces work as intended, not fussing with typos or placeholder text. And to be very honest, we agree. So, we built an AI-powered code review assistant that will catch the basic errors you may have missed, giving reviewers more space to review the critical changes in your PR thoroughly.

The next time you push a change, just comment @codecov-ai-reviewer review in the PR to get feedback in seconds. Unfortunately for one developer, it won’t catch <h1> OH MY GOD I’M A MONKEY </h1>, but it will catch things like incorrect attribute usage. So, when you’re building out a login form and accidentally write <button type="button">Login</button> instead of <button type="submit">Login</button>, we’ve got your back—before your users start rage-clicking.

In addition to flagging errors, it will also improve efficiency and readability.

Just like when our team was trying to format headers when forwarding webhooks, and it commented, "The use of array_map to flatten the headers is correct, but it can be simplified and made more readable by using a single line lambda function."

To use AI PR Review, you just need to have both the Codecov GitHub app and the Codecov AI app installed. Read the docs to learn more and get started.

AI Test Generation

Shipping tested code isn't just a good idea. Think about that the next time you’re riding home in a robo-taxi through late-night hordes of daredevil electric scooter riders and over-served pedestrians. You’d be tempted to tuck & roll out of the moving car if their code coverage was at 30%, but it's important to note that in the automotive industry, 70-80% code coverage is expected at a minimum.

When you’re starting from low test coverage, getting it up to speed can feel like a lot—especially with new features to code and bugs from past releases lurking around. You could probably pull a few all-nighters and make it happen, but that’ll just lead to sloppy code, flaky tests, and more work. Yuck.

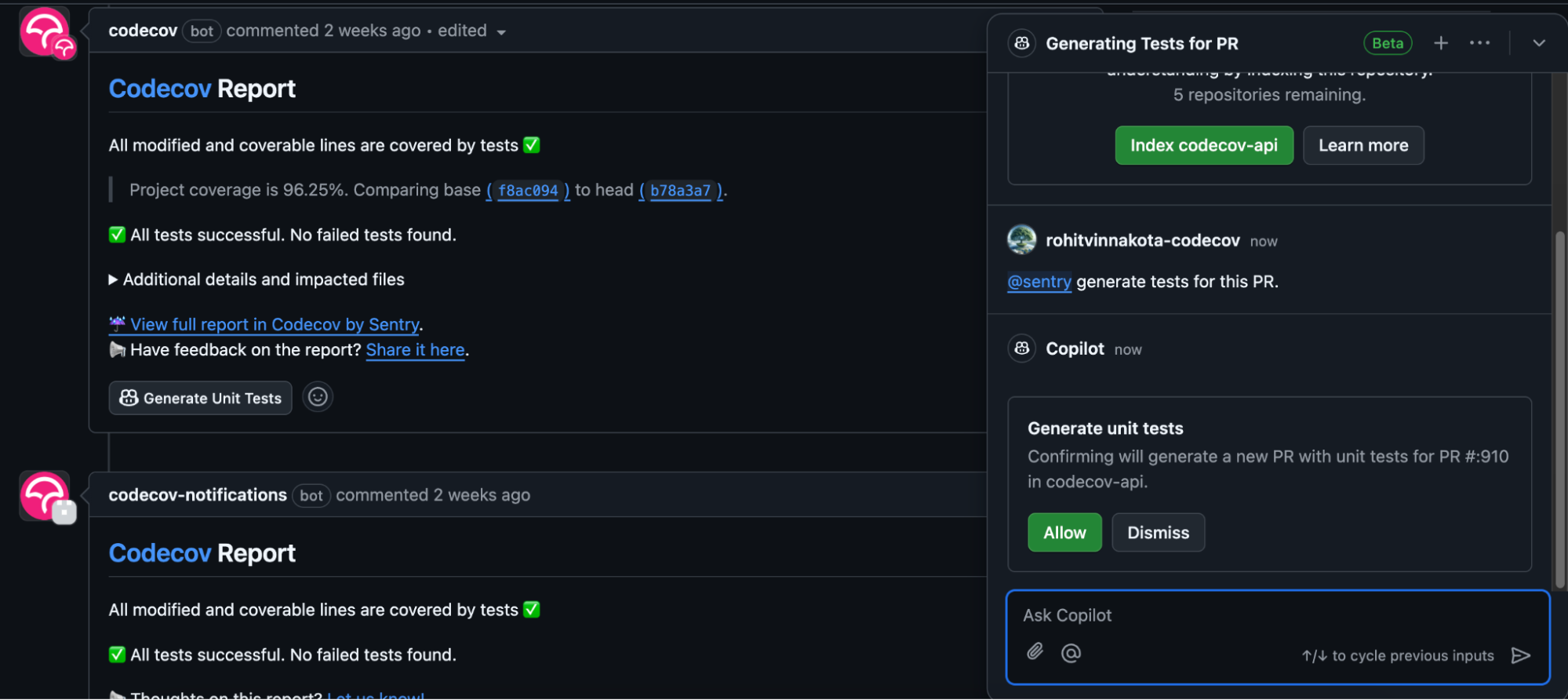

Instead, now you can use the Sentry GitHub Copilot extension to automatically create AI-generated tests for changes in your PR. It uses your coverage data, test results, and diff to build thorough, working tests that will increase your code coverage in literally seconds. There are two ways to generate tests: by clicking the test generation button or using Github Copilot chat. Here’s how to do it:

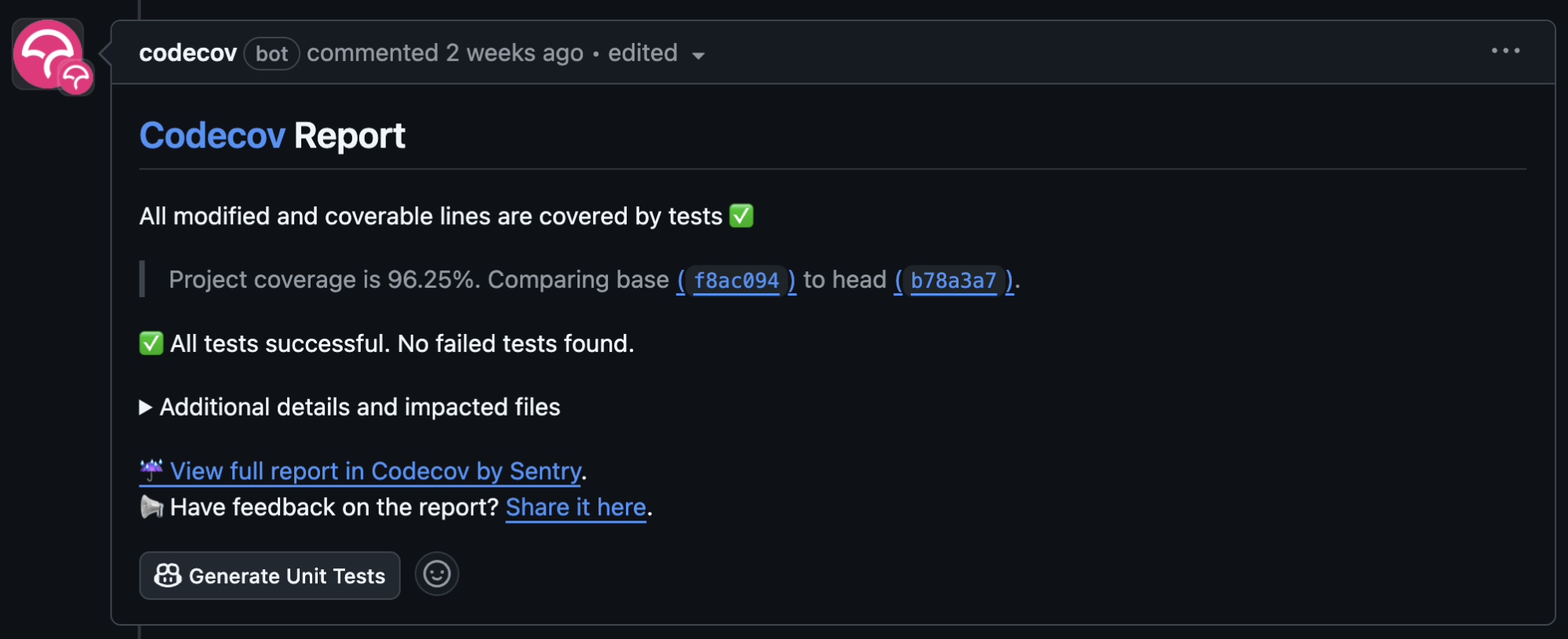

If Codecov is installed on your repo, you can click the ‘Generate Unit Tests’ button found in the Codecov PR comment.

If your repo does not have Codecov installed, you can open the Copilot Chat and type @Sentry please generate tests for me. (You don’t even have to say please. It’s an NLM, so ask however you like as long as you mention Sentry.)

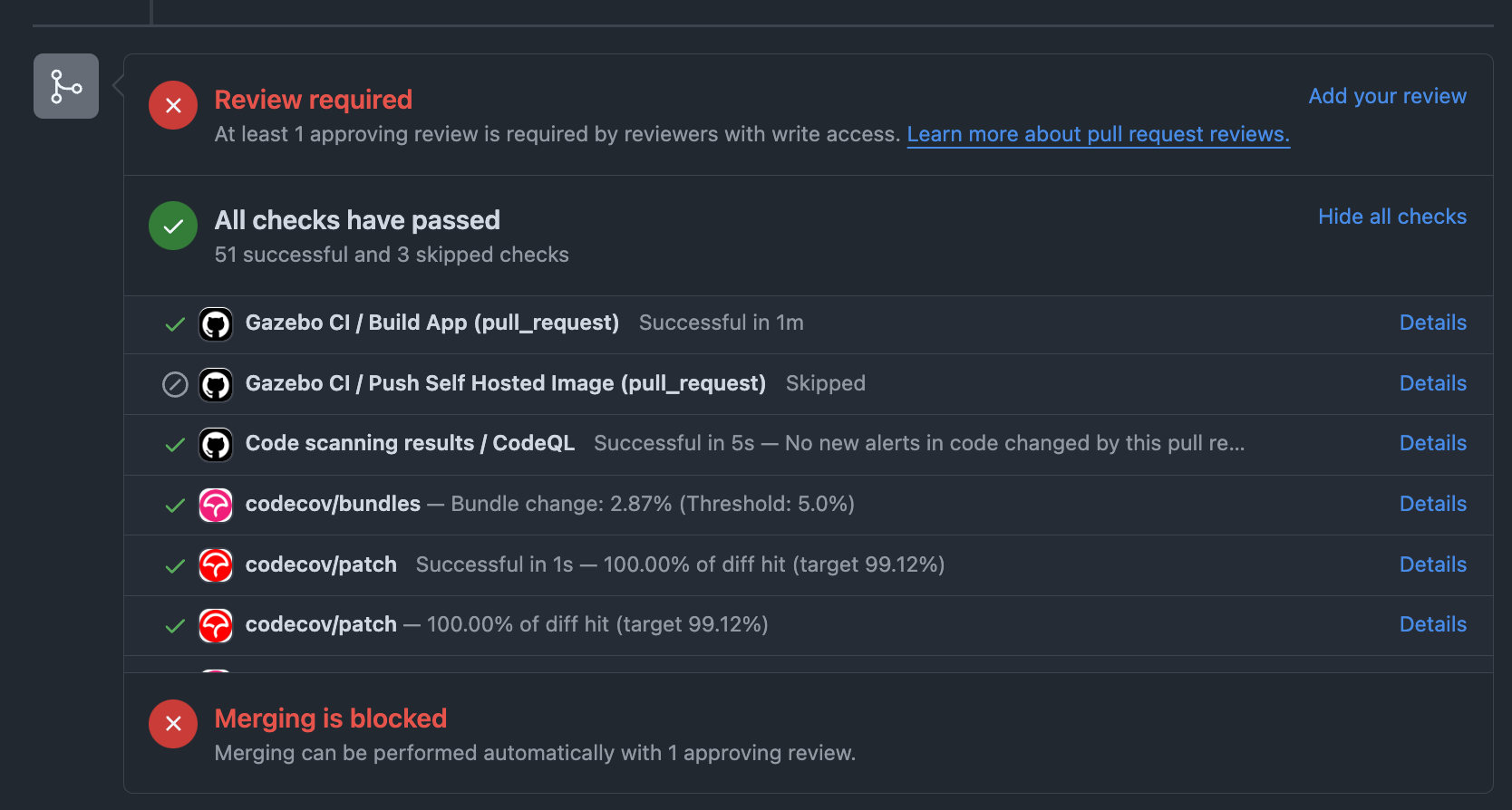

Either way, the extension will generate tests in a new PR, for which a link is provided in a PR comment. You can review the newly generated tests, and if they look good, simply merge this branch into the original PR, and your patch coverage will be 100%.

To use AI PR Test Generation, download the GitHub Copilot Sentry extension, and if you’d like the test generation button in your PRs, sign up for Codecov.

Test Analytics

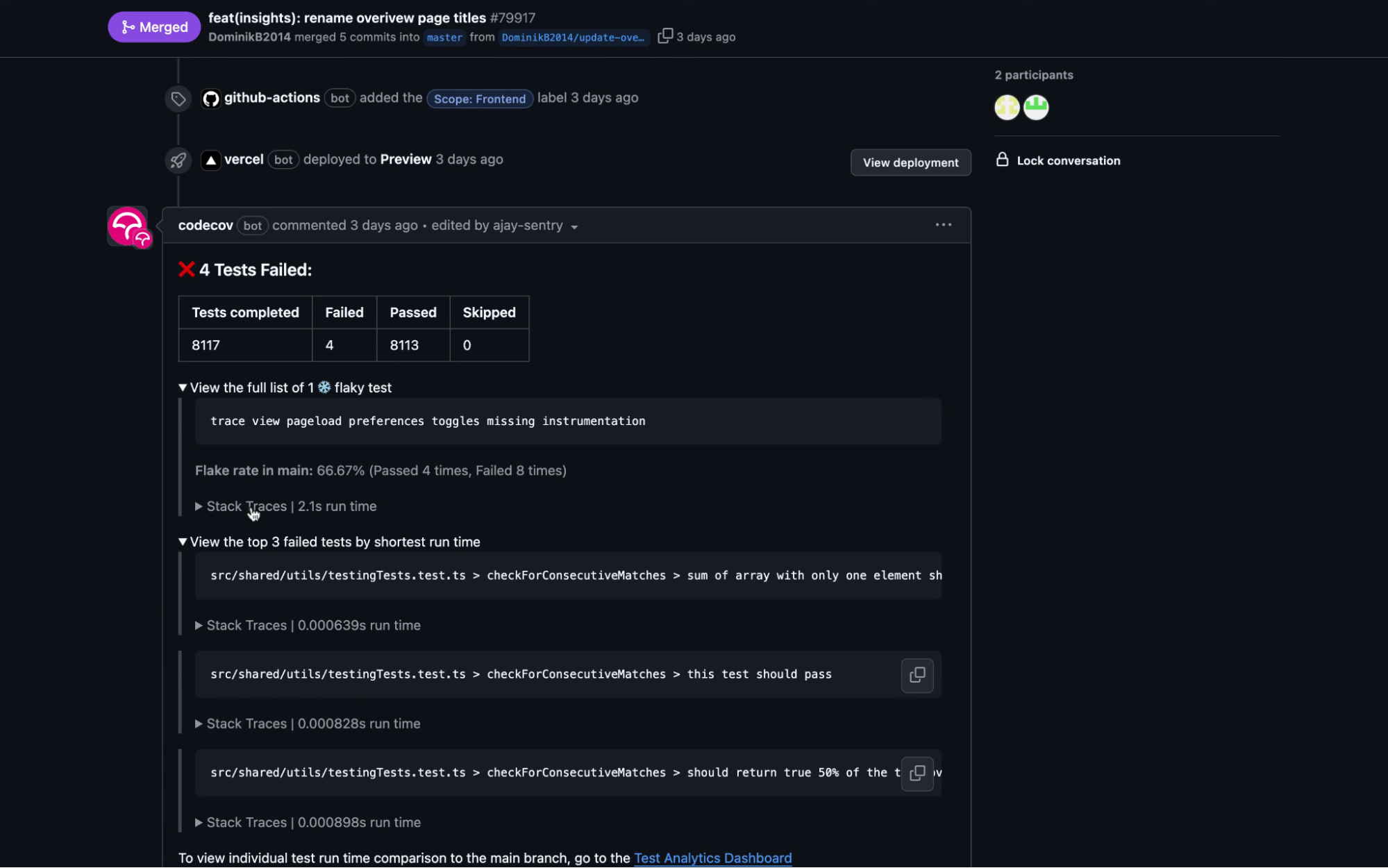

Failing tests are annoying. Flaky tests are rage-inducing – especially when you know a bogus test will probably pass the next time you run it. Dealing with failing and flaky tests in the moment is one thing, but repeated flakes and slow-as-molasses tests can ruin the developer experience and grind your CI pipeline to a halt.

Codecov Test Analytics separates flaky tests from legitimate failures, so you know which tests to re-run or skip and which ones mean your code needs fixing. You’ll also see a stack trace that makes debugging way easier. Instead of endlessly searching through failed test logs for the flaky needle in the haystack, you just copy and paste the exact function from the provided stack trace into your terminal.

To solve the bigger developer experience and CI efficiency problems, Test Analytics makes it clear which tests are causing the most problems so you know which to tackle first.

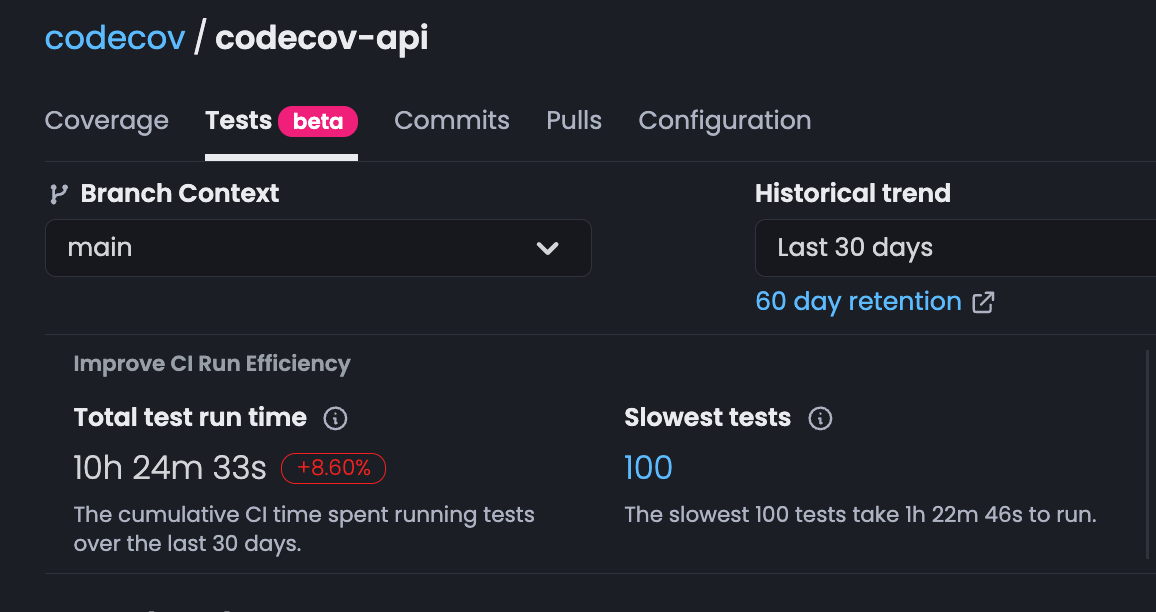

If your goal is to improve CI efficiency, you can track overall test run time compared with historical trends and see which tests run the slowest.

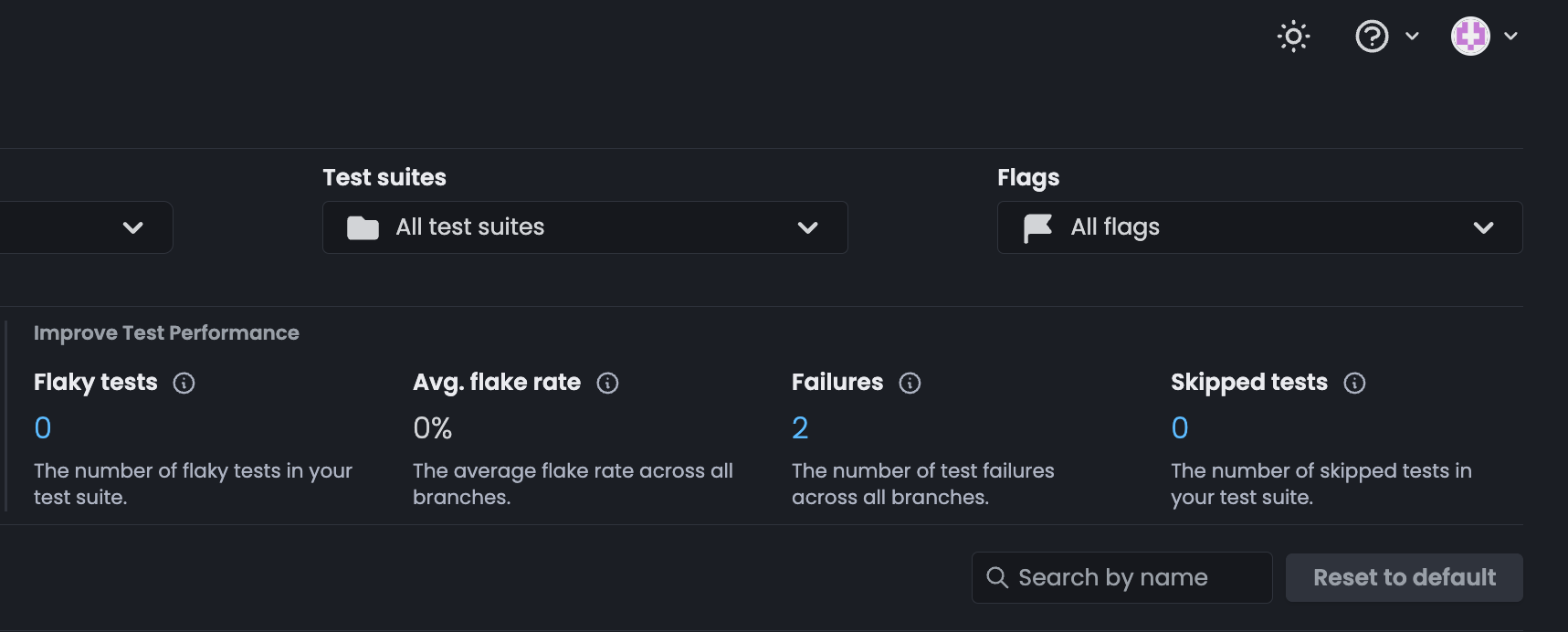

If you’re looking to improve test performance, you can check the total number of flaky tests, average flake rate, and total failures, all of which can be drilled into for in-depth analysis of problematic tests.

Since launching the Test Analytics Beta, participating organizations have run about 4.7 million tests, and of those, Codecov has caught ~ 300,000 that are flaky. That’s 300,000 times teams didn’t have to re-run their CI because they were confident in test outcomes, or 300,000 times a developer didn’t feel like ripping their hair out.

Test analytics is available for all Codecov users, with flaky test features exclusive to Pro and Enterprise plans for private repos. To get started, you'll need to configure your test framework to produce JUnit XML reports, then use the GitHub Action or the CLI to upload reports directly to your dashboard for instant insights.

Bundle Analysis

Speaking of rage-inducing experiences, why is it always the moment you need a website to work that it loads at an unbearable pace or not at all? You get the spinning arrow on the airline app right when you need your boarding pass, or you’re trying to pay a bill that’s due tomorrow, but the site just won’t load. There’s a strong chance it’s not just Murphy’s Law, it’s a JavaScript bundle that’s too damn big.

We updated our Bundle Analysis offering to help you avoid inadvertently increasing your bundle size too much. Codecov now highlights total bundle size changes and alerts on bundles that break your team’s pre-defined thresholds every time you open a PR.

The updated Bundles tab gives you a historical view so you can see how the package size changes over time. If you’re looking for extra credit, you can compare bundle size increases with changes in performance data you’re tracking in Sentry. Additionally, you can filter by asset type—CSS, images, fonts, or JavaScript code—to optimize exactly what matters.

To get started, you’ll need to install a bundler plugin. We currently support Rollup, Vite, and Webpack. Check out the docs for framework and environment-specific details.

Try Codecov and see for yourself

All of these features are designed to make writing tests, reviewing code changes, and pre-empting performance issues less terrible than they are today. Everything is available to try right now, so set them up and let us know what you think.

If you haven’t used Codecov yet, start a 14-day free trial of Codecov Pro.